RAG indexing: Structure and evaluate for grounded LLM answers

Guide to what RAG indexing is, how it works, key strategies, when to refresh, and how to measure performance for grounded LLM answers.

In this article

RAG (retrieval-augmented generation) indexing determines how well an AI system grounds its answers in relevant information. It enables the system to quickly locate relevant documents for a search query.

Developing RAG indexing involves building a pipeline with the following steps:

- Data collection

- Meaningful chunking

- Metadata addition

- Embedding

- Storage in a vector index

Good indexing reduces AI hallucinations and improves relevance across both structured and unstructured data.

RAG indexing strategies, such as hybrid, hierarchical, domain-specific, and graph-based, adapt to the structure of the knowledge base and the retrieval system's goals.

Issues caused by scale, chunk size, and metadata quality can be handled with clean data, consistent embeddings, and regular updates.

Factors such as speed, cost, integration needs, and dataset size are important considerations when selecting a database.

RAG indexing differs from keyword search by using embeddings rather than exact term matches.

Future RAG developments are moving toward hybrid retrieval methods, graph-enhanced approaches, and RAPTOR-style hierarchical indexing.

What is RAG indexing?

RAG indexing is the process by which a RAG system organizes and prepares data so that, when prompted by a user query, the correct information can be easily retrieved.

It takes the documents, chunks them into smaller pieces, creates vector representations of the chunks, and stores them in a special index so the system can quickly find them when needed.

Without indexing, a RAG system would not know how to connect a user’s question to the relevant context. The index serves as a map of the knowledge base, enabling the retriever to quickly locate the most relevant text without having to search the entire knowledge base.

That retrieved information is then passed to the LLM (large language model) to generate an appropriate answer to the query.

How does RAG indexing work?

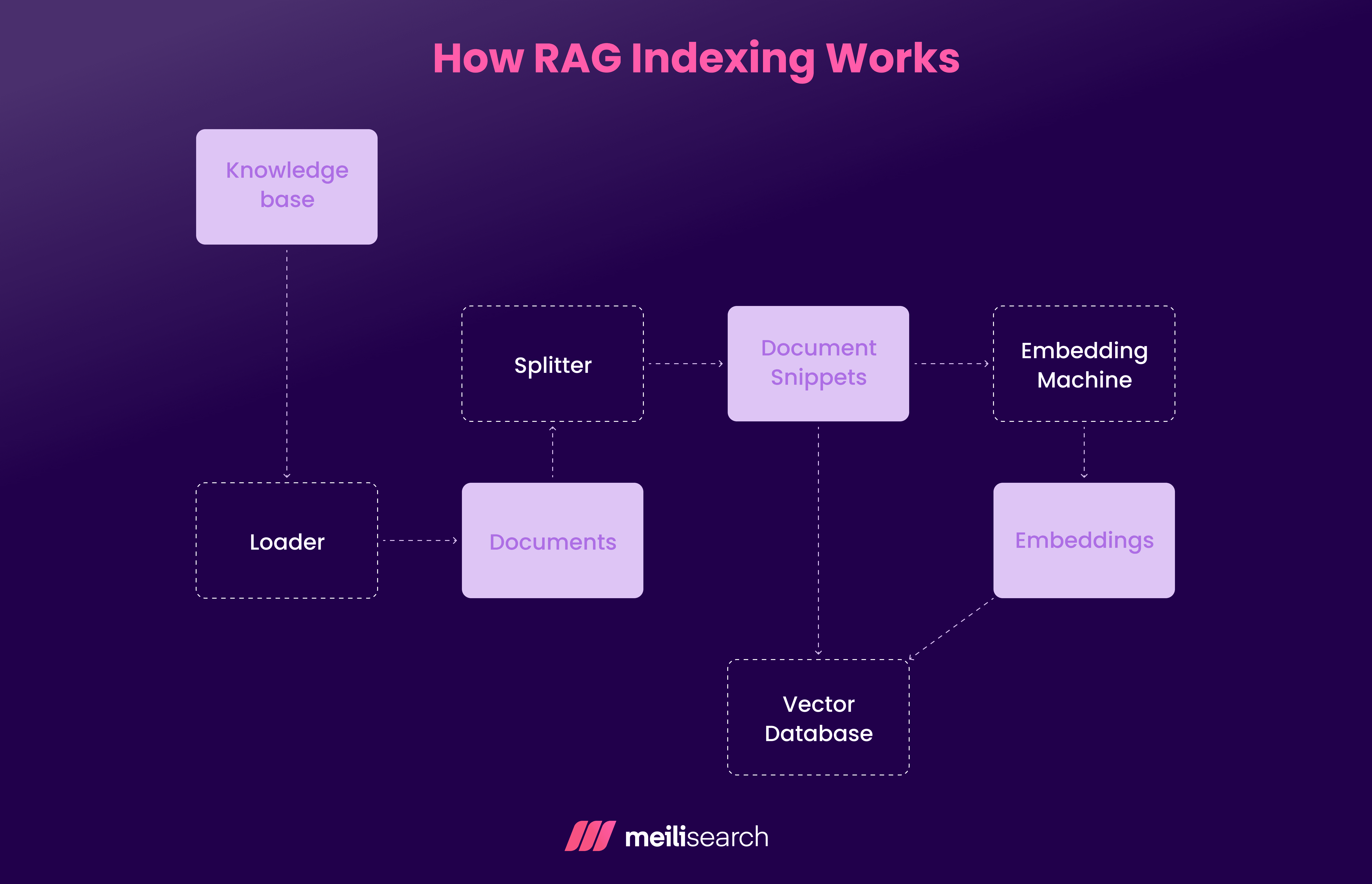

RAG indexing organizes the source data so that the RAG system can quickly find and return the most relevant information chunk. It converts raw documents into searchable vector embeddings and stores them in a vector database, enabling fast similarity searches.

Here are the main stages of RAG indexing:

- Data collection: RAG indexing begins by collecting data from various sources. These can be external PDFs, blog articles, an FAQ spreadsheet, etc.

- Cleaning the text: Next, the collected data is processed. Data cleaning may involve removing headers, fixing unparseable symbols, removing extra spaces, and so on.

- Document chunking: Long documents are typically split into smaller chunks to ensure the text is within the LLM’s context window. The chunks should be large enough to retain context but small enough to be processed efficiently. For instance, instead of treating a 40-page policy manual as one giant block of text, you can break it into hundreds of smaller sections.

- Tagging with metadata: Next, each chunk is tagged with metadata for quick filtering. The metadata are relevant fields such as title, data source, topic, category, or date. For example, tagging a chunk from a refund policy document with ‘topic: refunds' allows the system to instantly find it when a user asks a related question.

- Embedding: The chunks are then converted into vector embeddings to ensure similar chunks are placed close to each other. For example, we can expect chunks on ‘refund rules’ and ‘return policy’ to be close because they would share similar vectors.

- Vector storage: Finally, these vectors and their metadata tags are stored in a vector database. Some common vector databases are Pinecone, Milvus, FAISS, and Weaviate.

Why is indexing important in RAG?

RAG indexing makes RAG systems more accurate by organizing information so the LLM can quickly find and use the right content when answering a question.

Here are some of its benefits:

- Speed: Without indexing, the system would have to scan every document in its entirety whenever someone asks a question. Indexing stores precomputed embeddings, so the system can instantly search and retrieve relevant chunks without having to search from scratch.

- Relevance: Because chunks are embedded and tagged with metadata, the algorithm can match a user’s query to the most meaningful pieces of text. This precise retrieval enhances the relevance of answers.

- Accuracy: Indexing preserves context and meaning. Since the retrieval step is cleaner and more targeted, the final answer generated is better grounded in correct information.

- Scalability: Vector databases and indexing techniques can elegantly handle large data volumes. Therefore, it is possible to update or expand the knowledge base over time without affecting the RAG system's performance.

What are the main RAG indexing strategies?

Different indexing strategies fit different types of RAG. Picking the right one mainly depends on the content you want to search through.

Your indexing strategy can also impact the relevance and performance of the RAG.

Here are several common RAG indexing strategies and when you would use them:

1. Hierarchical indexing

Hierarchical indexing involves organizing content at multiple levels. The text resource is broken down from a document into sections, then paragraphs, and finally chunks. This strategy respects a document's structure and does not randomly create chunks.

For example, a legal contract might first be identified by section (e.g., ‘Termination Clause’) then by the exact paragraph that answers the user query.

You can use this technique when your documents, such as manuals or textbooks, are long and logically organized. It reduces noise, improves relevance, and allows the retriever to first narrow down the section before searching in-depth.

2. Hybrid indexing

Hybrid indexing combines keyword-based search (e.g., BM25) with vector search, similar to hybrid search. The AI system uses both approaches to improve its search accuracy.

For example, when searching for ‘how to fix my damaged electric kettle,’ a keyword search identifies documents containing those exact terms. A vector search retrieves related content to help fix damaged electrical appliances.

This strategy is ideal when you want precision and coverage. It is also useful when migrating from classic search tools to RAG tools without losing keyword search performance.

3. Time-based Indexing

Time-based indexing is used to keep track of information that changes over time. Each document or section is stored with a date label, so the AI system knows which are the most recent.

This strategy is useful for materials that are frequently updated, such as financial reports, company guidelines, or news articles.

4. Multi-representation indexing

Multi-representation indexing stores multiple vector embeddings for the same piece of text within a single retrieval system. Each embedding focuses on a different aspect of the content. One might capture the overall idea, another might emphasize technical language.

When a user types a search query, it is processed across all versions.

This method works well in areas where information can be interpreted in several ways. It is also useful when precision is essential and the language used in your documents is specialized.

5. Domain indexing

Domain indexing is designed to match the terminology of a particular field. For example, in medical data, the system might use drug names or symptom categories to label chunks. In software documentation, the index could be organized by API endpoints or code use cases.

This approach works best when your material follows clear patterns and uses consistent terms.

It improves search relevance because the index is built around how professionals in that field naturally search for information, rather than treating all text the same.

6. Graph-enhanced indexing

Graph-enhanced indexing is a strategy that links text chunks (called nodes) using relationships (called edges). Nodes could include topics, categories, citations, and related details. Graphs allow you to quickly see how multiple chunks are connected.

Use this strategy in situations when relationships are more important than similarity. It is also ideal for complex domains, such as legal matters or academic research, that involve interconnected concepts.

What are common challenges in RAG indexing?

RAG indexing involves choices that affect speed, accuracy, and long-term maintenance.

Here are some common challenges and how you can overcome them:

- Handling large datasets: When you are working with thousands of documents (or millions of chunks) indexing and searching can be incredibly slow. Storage costs also go up. A good solution would be to use sharding, compress embeddings, or remove low-value content rather than indexing everything.

- Picking the right chunk size: Chunks that are too long can provide the wrong context, and chunks that are too short can lose their meaning. To find the right balance, test with different token lengths (e.g., 200, 400, 600) and evaluate their retrieval quality before deciding on a chunk size.

- Keeping embeddings consistent: If you change the embedding model, older vectors may no longer match the new ones. That can lead to bad retrieval. To avoid this, version your embeddings and re-embed only affected documents when the model changes.

- Stress in managing metadata: Messy or inconsistent metadata makes filtering useless. You can fix this by defining an explicit schema (e.g., source, date, format, or access level) from the start and enforcing it during ingestion.

What are the best practices for RAG indexing pipelines?

The quality of your chunks, embeddings, and metadata directly shapes what the AI model can find and use. Here are some best practices for building and maintaining RAG indexing workflows:

- Always start with clean and meaningful data: Never dump everything into the index. Remove duplicates and filter out low-value text, such as headers, navigation menus, or disclaimers. Cleaner input means cleaner retrieval and fewer irrelevant matches later.

- Chunk by meaning, not by fixed length: Instead of automatically slicing text every 300 tokens, break it around natural boundaries. This includes headings, paragraphs, bullet lists, or API endpoints. It keeps context intact and reduces confusion during retrieval.

- Keep embeddings and models aligned: If you update your embedding model or retriever, do not leave the index behind. Re-embed over time or version your vectors so that the old and new chunks stay compatible.

- Always add metadata: Good tags make filtering more powerful. Try adding tags such as source, document type, date, section, or access level. This helps the system narrow results quickly and stay grounded in the proper context.

- Use smart retrieval tricks: Hybrid search (combining keywords and vectors), light reranking, and query rewriting for typos boost recall without flooding the results with junk.

- Monitor and refresh the index: Always track retrieval quality, stale documents, and storage bloat. In addition, replace outdated content and reduce noise instead of letting the index grow unchecked.

How Meilisearch helps

Meilisearch supports vector search and hybrid querying, which makes it a good fit for RAG indexing pipelines. You can store your text chunks and their embeddings directly in the index, instead of using a separate vector database. That means your indexing step can include both chunking and attaching embeddings.

Meilisearch also lets you filter by metadata such as source, tags, or date, which keeps retrieval grounded and avoids random matches.

Since Meilisearch handles both semantic search and keyword search, you can combine exact terms with vector similarity. This is perfect when your users mix natural phrasing with domain-specific words.

Another bonus is speed! Indexing is fast, and updating entries doesn’t require a complete rebuild. That helps when documents change or you need to refresh embeddings. In a RAG setup, Meilisearch acts as a searchable store for meaning and structure without overcomplicating the pipeline.

When should you update a RAG index?

You should update the RAG index whenever the data, documents, or the embedding model change in a way that could affect retrieval quality.

Suppose you add new documents, update policies, change pricing, publish fresh blog posts, or remove outdated material. The index needs to be refreshed so the AI system does not return inaccurate answers.

The same applies if the embedding model gets upgraded. Old vectors will not match new ones, so re-embedding is needed.

In fast-changing domains, updates should be scheduled daily or weekly. Monthly or incremental updates may be enough for slower-moving content.

A good rule of thumb is that if a human wants the new information reflected in the answers, the index should be updated.

How can you evaluate RAG indexing performance?

You can assess RAG indexing by measuring how fast the system retrieves results, how relevant they are, and whether users find the answers helpful.

- Speed: Slow searches usually indicate a poorly structured index.

- Semantic accuracy: A good question to ask here is, “Are the top chunks related to the query, or just loosely similar?” You can answer this with small human-reviewed samples or automated relevance checks. If you are working with structured data (such as product tables or policy fields) and unstructured data (such as PDFs or help articles), make sure your index handles both consistently.

- User satisfaction: Track follow-up questions, unanswered queries, and user feedback to identify areas for improvement. For more structured testing, you can use evaluation tools or custom scripts that measure how often your system retrieves the correct results. If retrieval feels slow or unreliable, your indexing pipeline probably needs fine-tuning, better chunking strategies, or fresher embeddings.

What are the most popular vector stores for RAG indexing?

Some of the most popular vector stores (or vector-backed retrievers) used in RAG pipelines are Meilisearch (with its vector support), Pinecone, Weaviate, Chroma, Milvus, Qdrant, and FAISS (or pgvector).

- Meilisearch: Though not a traditional vector database, you can use it as a vector-backed retriever by storing embeddings alongside documents. It is excellent when you want a unified search layer (both keyword and vector) without managing a separate vector store. Choose Meilisearch if your dataset is moderate and you value simplicity and lightweight operations.

- Pinecone: Excellent if you do not want to deal with servers or scaling issues. It is fully managed, fast, and built for production so that you can focus on retrieval, not infrastructure.

- Weaviate: Open source and flexible. It handles hybrid search, filtering, schemas, and even relationships between data. It’s a good fit when you care about meaning and structure, not just raw embeddings.

- Chroma: Simple and developer-friendly (built with Python users in mind). It is often used in quick RAG prototypes or smaller RAG applications. If you are experimenting or do not have many vectors, Chroma is a good vector store to use.

- Milvus: Milvus is designed for scale. It is open source and works well for storing and querying millions of embeddings across machines. You should use it when performance and control matter more than convenience.

How to choose a vector DB for RAG indexing

When building a RAG system, your vector database is the backbone of the retrieval system. It needs to store embeddings efficiently and return relevant results quickly. The goal is not just picking a popular tool, but choosing one that fits how you plan to use it:

- Speed: If your app needs quick responses, latency matters a lot. A good vector database should return similar results fast, even when working with many embeddings.

- Scalability: Your data might start small, but it will not stay that way. The database you choose should handle growth without turning into a maintenance nightmare. If you expect to move from thousands to millions of vectors, choose a tool that can scale horizontally without losing performance.

- Integration: You do not want to spend hours wiring things together. Choose a database that works efficiently with your infrastructure. Clean APIs and SDK support make the development process smoother.

- Cost: Some options are fully managed and save you time, but they can get pricey as usage grows. Open-source tools are cheaper to run but require more hands-on setup and maintenance. It is all about balancing convenience with budget.

Let’s see how RAG indexing differs from traditional search indexing.

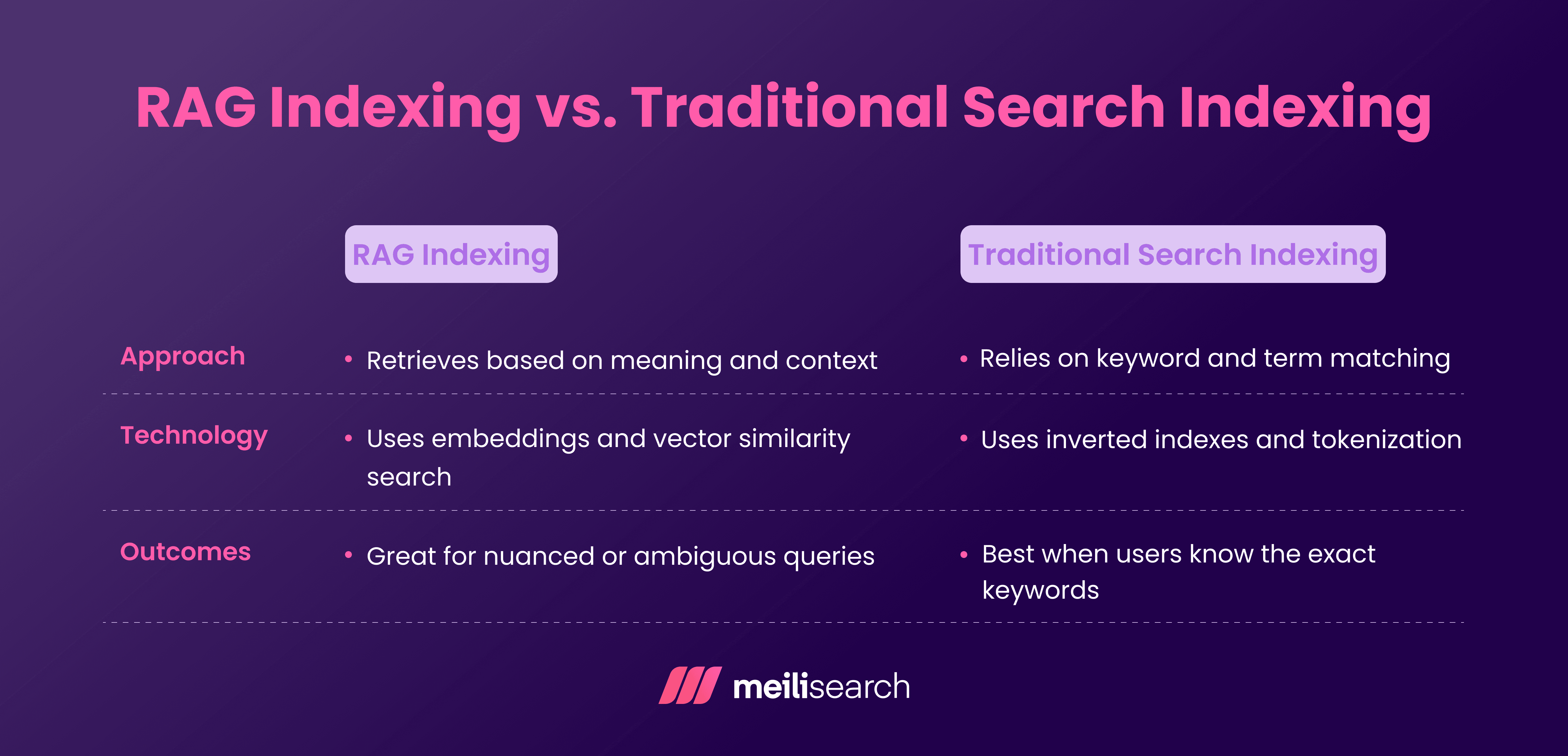

How does RAG indexing differ from traditional search indexing?

RAG indexing and traditional search indexing seem similar on the surface, but they work in very different ways.

Traditional indexing is all about keywords.

RAG indexing, on the other hand, focuses on semantic meaning. Instead of relying solely on words, it uses embeddings to capture context and retrieve relevant chunks based on semantics.

RAG indexing shines when users do not use the exact wording found in the source documents. Examples are conversational queries, domain jargon, or multilingual content.

Traditional indexing is still a good option when speed, exact matches, and lightweight infrastructure are priorities. Examples are documentation sites or e-commerce search results.

What are future trends in RAG indexing?

RAG indexing is evolving rapidly, and the next wave involves combining smarter context handling with more effective retrieval strategies:

- Graph-powered retrieval: Instead of treating documents as isolated chunks, newer trends link concepts using knowledge graphs. This approach captures relationships across topics and returns more connected answers.

- RAPTOR-style hierarchical chunking: Approaches inspired by RAPTOR break content into layers (small chunks are broken for precision, and larger summaries are broken for broader context). This ensures that information retrieval can adapt to the query’s depth.

- Hybrid retrieval pipelines: Mixing keyword search, semantic vectors, and metadata filters is becoming the norm. This combination boosts accuracy and speed without relying on a single retrieval process.

Index smarter, answer sharper

Indexing is a critical component of a good RAG system. When the index is well-chunked and backed by good embeddings, your model will efficiently retrieve the correct information.

However, a smart index is not something you set and forget. As new content springs up, the index should evolve too. Even minor improvements (such as better metadata, refreshed embeddings, or reorganized chunks) can noticeably improve the retrieval accuracy.

At the end of the day, sharper answers do not come from luck or bigger models. They come from indexing that is done with intent.

With Meilisearch, simplify your retrieval stack and keep responses grounded

Meilisearch supports hybrid queries, so your answers stay relevant and grounded instead of drifting off-topic. It is also built for quick updates, so you don’t need to rebuild your index whenever content changes. It is a practical way to keep RAG pipelines fast, tidy, and reliable.