Understanding hybrid search RAG for better AI answers

Learn what hybrid search RAG is, how it blends semantic and keyword search for more accurate retrieval, and how it works, challenges, implementation & more.

In this article

Getting good answers from an AI model depends on how well its retrieval-augmented generation (RAG) system finds the relevant information. Hybrid search boosts the relevance of AI responses by combining keyword search with semantic understanding:

- Hybrid search retrieves results from both keyword search and semantic search. It ranks the output and sends the most relevant documents to a large language model (LLM).

- Hybrid search improves accuracy, reduces AI hallucinations, and performs well across different fields.

- It keeps large language models grounded in real data, preventing overconfident or irrelevant answers.

- Using hybrid search tools like Meilisearch and Qdrant makes it easier to tune weights, cache queries, and manage speed effectively.

In this article, we will dive more deeply into hybrid search, its definition and use cases, and also learn how to avoid common issues, such as poor weighting or outdated embeddings, which can negatively impact performance if left unchecked.

What is hybrid search in RAG?

Hybrid search is a technique that combines two search methods: keyword search (which looks for exact words) and semantic search (which looks for meaning).

RAG is a method where an AI model retrieves relevant information from an external database or document before generating a response.

Hybrid search in the context of RAG is a RAG approach that uses both keyword and semantic matching for information retrieval. Hybrid search RAG systems understand context and meaning more effectively. They are smarter and more reliable at finding the right information when compared to systems that use keyword matching or semantic matching alone.

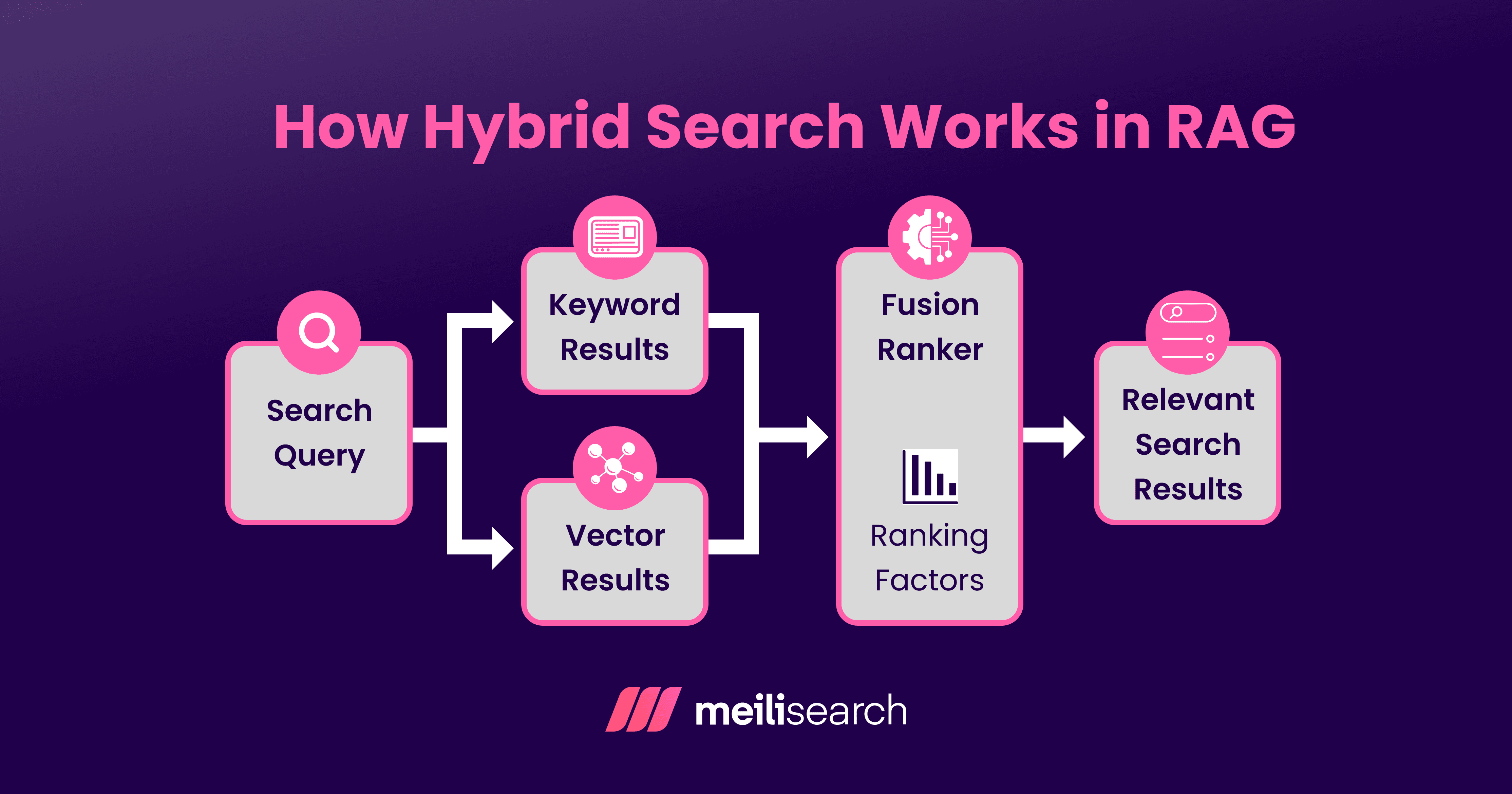

How does hybrid search work in RAG?

Hybrid search in RAG combines two methods of information retrieval: keyword search (also known as sparse search) and vector (or semantic) search.

When you type in a question, the system processes it in two different ways:

- Exact word matches: Only documents that match the exact keywords used in your query are retrieved.

- Vector search: The system tries to understand your intent. It finds relevant information even when the wording does not match perfectly.

The results from both searches are then scored and combined, providing a mix of accuracy and understanding.

The RAG model retrieves the top-ranked results and feeds them into an LLM to generate a final answer.

In short, hybrid search enables RAG systems to think both literally and contextually, ensuring that no useful information is overlooked during retrieval.

What are the benefits of hybrid search for RAG?

Hybrid search in RAG brings together the best of both worlds – precision from keyword search and understanding from semantic search. This combination makes RAG systems smarter and more dependable when retrieving information.

Here are the main advantages of using hybrid search in RAG systems:

- Improved accuracy: Since hybrid search checks the exact words and their meanings, it finds more relevant results. For example, if you ask about ‘crop yield improvement,’ it will also return documents related to the phrase ‘boosting farm productivity.’

- More robust: Hybrid search works well even when the queries are phrased differently. For instance, whether you type ‘how to prevent decay in plants’ or ‘crop disease control,’ it still understands what you mean.

- Able to process complex queries: Hybrid search captures both context and detail, making it ideal for nuanced topics like legal, medical, or agricultural research.

- Enhanced user experience: It reduces the need for users to rephrase or refine questions, allowing them to receive faster, more accurate, and more complete answers.

What is an example of hybrid search in RAG?

Here is an example of a customer-support RAG pipeline that uses both a keyword search (such as BM25) and an embeddings-based vector search to retrieve top information chunks about a product.

It merges and re-ranks the chunks, then passes them to an LLM to generate the answer.

Here’s how it works:

- User query: The user asks an AI model, ‘Why is my blender making a grinding noise?’

- Query dualization: The RAG system creates a sparse query for keyword match (‘grinding noise,’ ‘blender’) and an embedding vector that captures meaning (noise, mechanical failure, bearings).

- Keyword/sparse search: The system fetches the top 10 exact-text matches. It can go through manuals or FAQ lines with the words ‘grinding’ or ‘noise’ in them.

- Vector search: A vector search relies on an artificial neural network (ANN) to retrieve the top 10 semantically similar passages from external sources. It can retrieve guides mentioning ‘grinding,’ ‘rattling,’ or ‘motor issue.’

- Merging and scoring: These combine both result sets, deduplicate overlaps (if any), and score by a weighted sum.

- Selecting contexts: The system then chooses the top three to five passages as the retrieval context.

- Generation: The RAG model then feeds the selected context and the user question into the LLM to produce a grounded answer. In our blender example, you would expect answers relating to worn bearings, including instructions on how to check this and next steps.

What is the difference between hybrid and vector search?

The main difference between hybrid and vector search is that hybrid search combines keyword and vector (semantic) search, while vector search relies only on semantic similarity.

Let’s compare hybrid search with traditional search RAG systems.

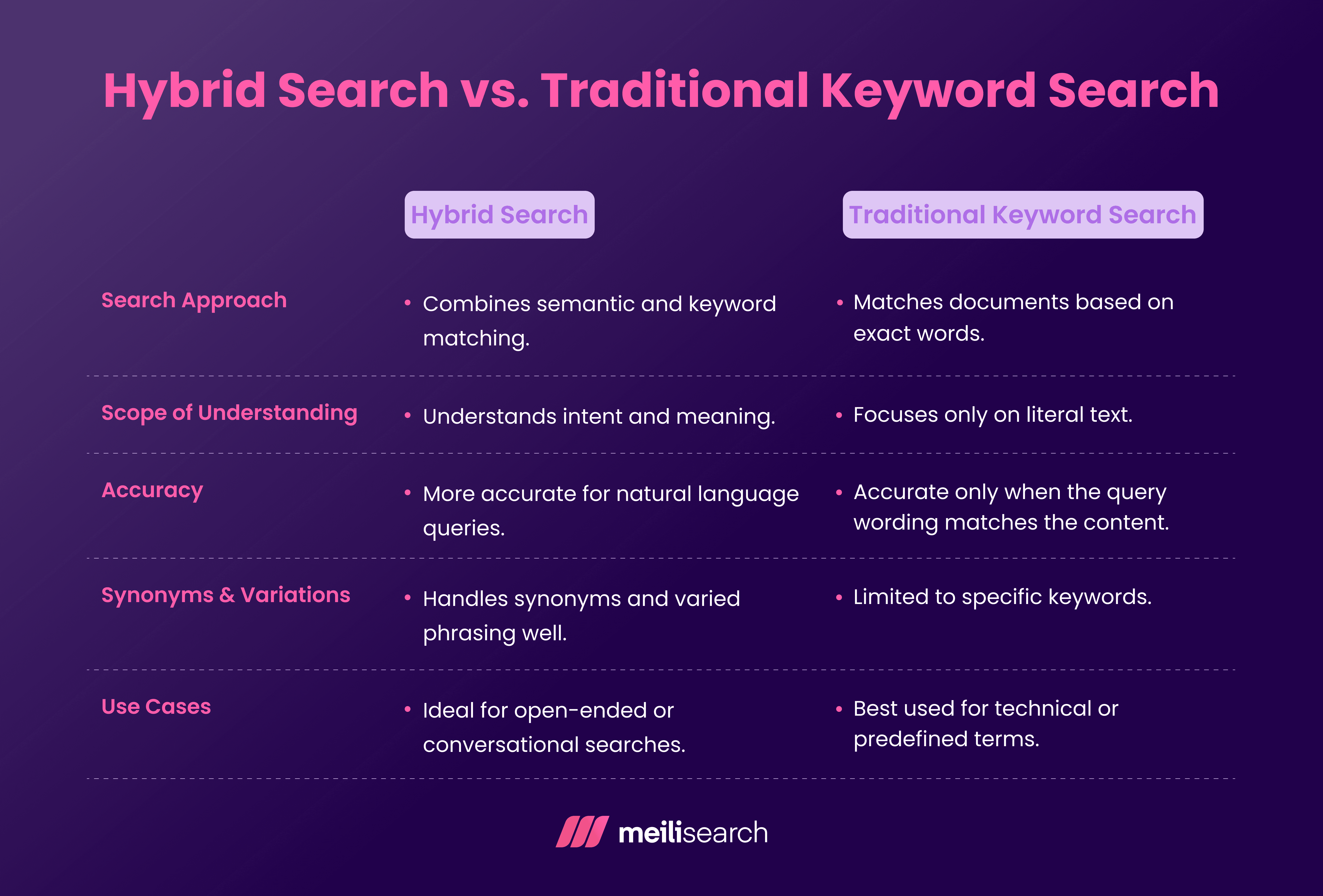

How does hybrid search compare to traditional keyword search?

Hybrid search combines meaning-based and keyword-based methods. In contrast, traditional keyword search relies solely on exact word matching.

Hybrid search thinks beyond words. It also connects ideas and meaning. Traditional keyword search sticks to the text exactly as written.

Why is hybrid search important for large language models (LLMs)?

Hybrid search helps large language models (LLMs) retrieve the most relevant and meaningful information. A user does not want the LLM to lean on irrelevant context to answer a question. Hybrid search ensures the context passed to the LLM from an external source is as relevant as possible.

Imagine a developer asking an AI system, ‘How to fix memory leaks in Python?’

A pure keyword search might only find pages that literally mention ‘memory leaks,’ while a vector (semantic) search might return unrelated content about ‘performance optimization.’

Hybrid search combines both. It captures the exact keywords and the broader meaning behind the query. This means the LLM retrieves relevant guides, code snippets, and explanations about debugging and garbage collection in Python.

By doing so, hybrid search helps LLMs provide more precise and accurate answers.

How to implement hybrid search in RAG pipelines

In this walkthrough, you will learn how to implement hybrid search in a RAG pipeline using Meilisearch.

We will implement keyword search using BM25 and semantic search using FAISS and Sentence Transformers.

We will build a simple recipe assistant chatbot, based on parsed documents.

Let’s get started!

1. Setting Meilisearch up

If you currently do not have Meilisearch, install it with the command:

# Install Meilisearch curl -L https://install.meilisearch.com | sh

Spin up the server with:

# Launch Meilisearch ./meilisearch --master-key="aSampleMasterKey"

We recommend creating a .env file to store the credentials. Create one and add the following credentials: MEILI_URL, MEILI_KEY, and OPENAI_API_KEY.

Note: You will need an OpenAI API key to create this RAG.

2. Install and load the necessary libraries

Install the following Python libraries with the command:

pip install python-dotenv meilisearch faiss-cpu sentence-transformers numpy

In a Python file, load the libraries and environment variables.

from __future__ import annotations import os, time, textwrap from dataclasses import dataclass from typing import Dict, List, Sequence, Tuple import numpy as np from dotenv import load_dotenv import meilisearch from sentence_transformers import SentenceTransformer # --------------------------------------------------------------------- # Config # --------------------------------------------------------------------- load_dotenv() MEILI_URL = os.getenv("MEILI_URL", "http://127.0.0.1:7700") MEILI_KEY = os.getenv("MEILI_KEY", "aSampleMasterKey") MEILI_INDEX = os.getenv("MEILI_INDEX", "hybrid_rag_food_demo") EMBED_MODEL = os.getenv("EMBED_MODEL", "sentence-transformers/all-MiniLM-L6-v2")

3. Creating our sample documents

To simulate external resources for the LLM, let’s create a simple Document class that captures seven recipes. The recipes will contain an ID, title, and content.

@dataclass class Document: id: str title: str content: str def demo_docs() -> List[Document]: # Query we will use: "Quick high-protein breakfast without cooking" return [ Document("D1", "Greek Yogurt Parfait", "Quick high protein breakfast without cooking. Layer Greek yogurt with berries and nuts; ready in minutes."), Document("D2", "Overnight Oats", "Quick breakfast without cooking: soak rolled oats in milk overnight with chia seeds; fiber-rich and customizable."), Document("D3", "Egg Scramble", "Warm breakfast: whisk eggs with veggies and cook in a pan. Protein from eggs; takes 5–7 minutes."), Document("D4", "Protein Smoothie", "Quick high protein breakfast without cooking: blend milk, banana, peanut butter, and protein powder."), Document("D5", "Avocado Toast", "Toast bread and top with mashed avocado, lemon, and salt. Healthy fats; toasting required."), Document("D6", "Cottage Cheese Bowl", "Quick high protein breakfast without cooking: cottage cheese with pineapple or cucumber; add seeds for crunch."), Document("D7", "Tuna Breakfast Wrap", "Protein-rich, ready-to-eat wrap with canned tuna and Greek yogurt. Cold prep, portable breakfast option; no heating needed."), ]

Next, we’ll set up Meilisearch for keyword retrieval.

4. Implementing BM25 retrieval with Meilisearch

A MeiliBM25 class will use Meilisearch to perform a sparse BM25 search.

The search() function executes BM25 queries and returns ranked results as (doc_id, score) tuples, using 1/rank as a score proxy. This captures exact keyword matches that semantic embeddings might miss.

class MeiliBM25: def __init__(self, url: str, key: str, index_name: str): self.client = meilisearch.Client(url, key) self.index_name = index_name self.index = None self._wait_ready() def _wait_ready(self, tries: int = 30): for _ in range(tries): try: self.client.health(); return except Exception: time.sleep(0.2) raise ConnectionError(f"Meilisearch not reachable at {MEILI_URL}") @staticmethod def _wait_task(client: meilisearch.Client, task_obj) -> None: if hasattr(task_obj, "task_uid"): client.wait_for_task(task_obj.task_uid) elif isinstance(task_obj, dict) and "taskUid" in task_obj: client.wait_for_task(task_obj["taskUid"]) def recreate_clean_index(self) -> None: try: t = self.client.delete_index(self.index_name) self._wait_task(self.client, t) except meilisearch.errors.MeilisearchApiError: pass def ensure_index(self) -> None: try: self.index = self.client.get_index(self.index_name) except meilisearch.errors.MeilisearchApiError as err: if getattr(err, "code", "") != "index_not_found": raise t = self.client.create_index(self.index_name, {"primaryKey": "id"}) self._wait_task(self.client, t) self.index = self.client.get_index(self.index_name) t1 = self.index.update_searchable_attributes(["title", "content"]) t2 = self.index.update_displayed_attributes(["id", "title", "content"]) self._wait_task(self.client, t1); self._wait_task(self.client, t2) def add(self, docs: Sequence[Document]) -> None: payload = [doc.__dict__ for doc in docs] t = self.index.add_documents(payload) self._wait_task(self.client, t) def search(self, query: str, limit: int) -> List[Tuple[str, float]]: hits = self.index.search(query, {"limit": limit}).get("hits", []) # Use inverse rank as a proxy BM25 score (for demo purposes) return [(h["id"], 1.0 / rank) for rank, h in enumerate(hits, start=1)]

5. Adding semantic retrieval with Sentence Transformers and FAISS

Next, we convert each document into a vector and store it in an FAISS index. The DenseFAISS class uses the SentenceTransformer model to create a vector embedding and store the document IDs, along with a FAISS index. The FAISS index is Facebook's library for fast similarity search over vectors. The search() method embeds the query, finds the most similar document vectors, and returns (doc_id, score) tuples. This approach will identify conceptually related documents, even when exact keywords are not present.

class DenseFAISS: def __init__(self, model_name: str): self.model = SentenceTransformer(model_name) self.id_map: List[str] = [] self.index = None # faiss.IndexFlatIP def _embed(self, texts: Sequence[str]) -> np.ndarray: vecs = self.model.encode(list(texts), batch_size=64, show_progress_bar=False, normalize_embeddings=True) return np.asarray(vecs, dtype=np.float32) def build(self, docs: Sequence[Document]) -> None: import faiss self.id_map = [d.id for d in docs] mat = self._embed([f"{d.title}. {d.content}" for d in docs]) self.index = faiss.IndexFlatIP(mat.shape[1]) self.index.add(mat) def search(self, query: str, top_k: int) -> List[Tuple[str, float]]: q = self._embed([query]) sims, idxs = self.index.search(q, top_k) return [(self.id_map[i], float(s)) for s, i in zip(sims[0].tolist(), idxs[0].tolist()) if i != -1]

6. Combining results with Reciprocal Rank Fusion (RRF)

Hybrid search combines the results of sparse and dense search. We will use Reciprocal Rank Fusion (RRF) to combine both results. RRF is a simple formula that rewards documents ranked highly in either list.

def rrf_scores(sparse: List[Tuple[str, float]], dense: List[Tuple[str, float]], k: int = 60) -> Dict[str, float]: s_ranks = {doc_id: i + 1 for i, (doc_id, _) in enumerate(sparse)} d_ranks = {doc_id: i + 1 for i, (doc_id, _) in enumerate(dense)} all_ids = set(s_ranks) | set(d_ranks) fused = {} for doc_id in all_ids: s_term = 1.0 / (k + s_ranks.get(doc_id, 10**9)) d_term = 1.0 / (k + d_ranks.get(doc_id, 10**9)) fused[doc_id] = s_term + d_term return fused

7. Bringing it all together

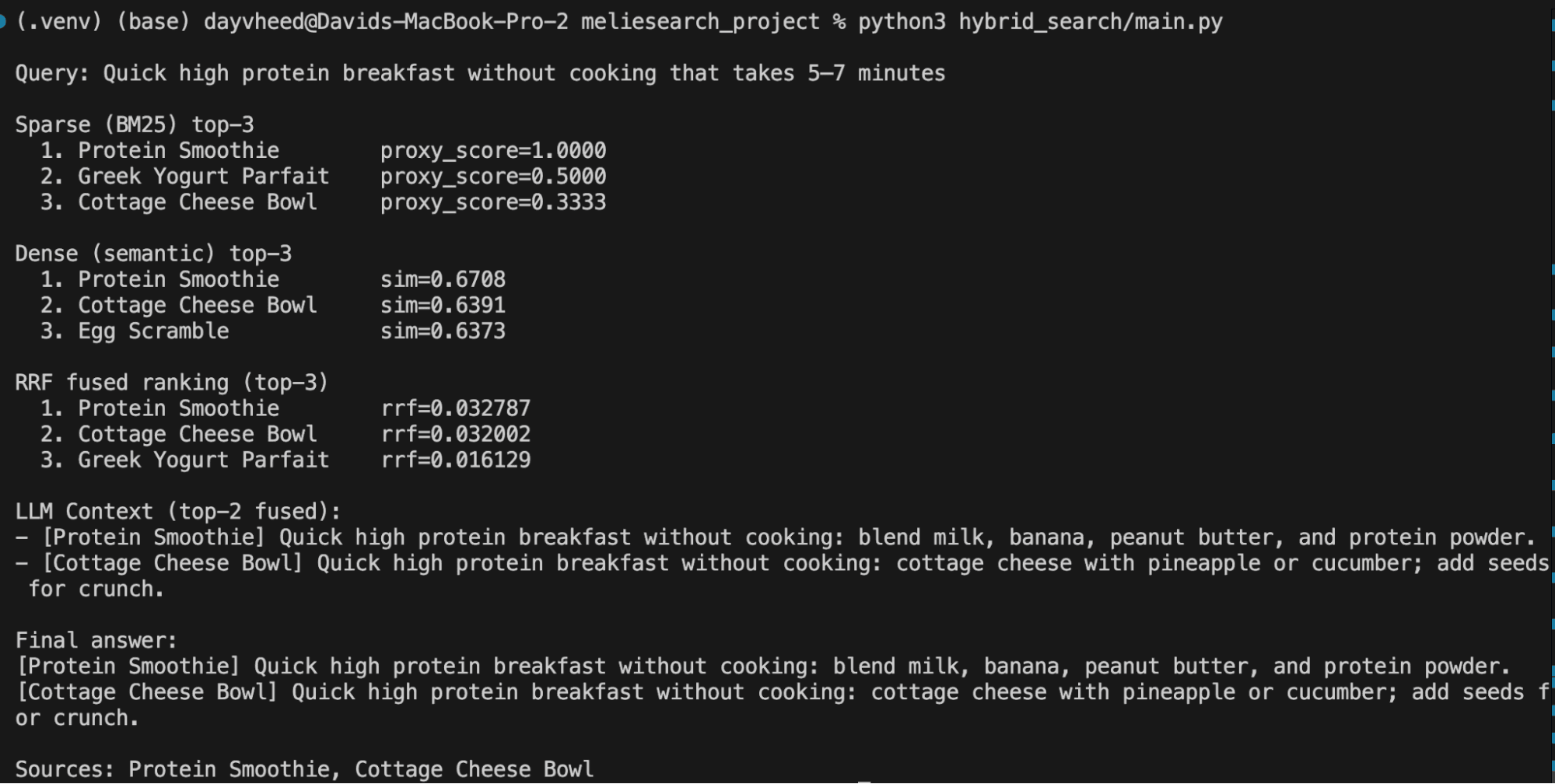

Now, we can test everything. We will query ‘Quick high-protein breakfast without cooking that takes 5–7 minutes’ and print both the BM25 and semantic results, then the RRF-fused ranking and final context.

TOP_K_RETRIEVE = 3 # show top-k for sparse, dense, and fused CONTEXT_K = 2 # how many fused docs go into the final answer def main(): docs = demo_docs() id2doc = {d.id: d for d in docs} # Use a keyword-normalized query for BM25 (no hyphen), and the same text for dense query = "Quick high protein breakfast without cooking that takes 5–7 minutes" # Sparse (BM25) bm25 = MeiliBM25(MEILI_URL, MEILI_KEY, MEILI_INDEX) bm25.recreate_clean_index() # clean slate so demos are deterministic bm25.ensure_index() bm25.add(docs) # Dense (FAISS) dense = DenseFAISS(EMBED_MODEL) dense.build(docs) # Retrieve independently (consistent TOP_K_RETRIEVE) sparse_res = bm25.search(query, limit=TOP_K_RETRIEVE) dense_res = dense.search(query, top_k=TOP_K_RETRIEVE) print() print("Query:", query, " ") print(f"Sparse (BM25) top-{TOP_K_RETRIEVE}") for i, (doc_id, s) in enumerate(sparse_res, 1): print(f" {i}. {id2doc[doc_id].title:22s} proxy_score={s:.4f}") print(f" Dense (semantic) top-{TOP_K_RETRIEVE}") for i, (doc_id, s) in enumerate(dense_res, 1): print(f" {i}. {id2doc[doc_id].title:22s} sim={s:.4f}") print() # RRF fusion (also show TOP_K_RETRIEVE) fused = rrf_scores(sparse_res, dense_res, k=60) fused_sorted = sorted(fused.items(), key=lambda x: x[1], reverse=True) fused_top = fused_sorted[:TOP_K_RETRIEVE] print(f"RRF fused ranking (top-{TOP_K_RETRIEVE})") for i, (doc_id, score) in enumerate(fused_top, 1): print(f" {i}. {id2doc[doc_id].title:22s} rrf={score:.6f}") print() # LLM context from fused top_ids = [doc_id for doc_id, _ in fused_top][:CONTEXT_K] print(f"LLM Context (top-{CONTEXT_K} fused):") for d_id in top_ids: d = id2doc[d_id] # No short(): print a fixed-length slice snippet = d.content[:160] + ("..." if len(d.content) > 160 else "") print(f"- [{d.title}] {snippet}") print() # Final grounded answer (simple extractive composition) print("Final answer:") for d_id in top_ids: d = id2doc[d_id] snippet = d.content[:180] + ("..." if len(d.content) > 180 else "") print(f"[{d.title}] {snippet}") print(" Sources:", ", ".join(id2doc[i].title for i in top_ids)) if __name__ == "__main__": main()

Running this returns:

As shown, the sparse and dense search methods return different results. The RRF combines the scores and returns its own top three. Then the top two contents are fed to the LLM for its final answer.

What are the challenges of hybrid search in RAG?

While hybrid search enhances the intelligence and accuracy of RAG systems, it is not without its limitations.

Let’s examine some of the typical challenges and explore a few simple ways to overcome them.

1. Ranking and scoring results

One of the most significant problems in hybrid search is determining how to effectively combine keyword scores and semantic similarity scores. They are measured differently, so balancing them can be tricky.

If optimization is poor, relevant results may not rank highly and be seen by the user, or irrelevant results may be ranked too high.

To fix this, consider using weighted scoring (for example, 60% semantic and 40% keyword) and test it with real queries.

Regular RAG evaluations help fine-tune these weights for your specific use case.

2. Latency and performance issues

Running two types of searches (keyword and vector) together naturally takes more time. This can slow down response times, especially with large datasets.

To manage this, use RAG indexing strategies that speed up retrieval.

A good example is the use of approximate nearest neighbor (ANN) search for vectors and efficient caching for frequent queries.

Also, try to distribute searches across multiple servers. A well-designed system can make hybrid search nearly as fast as a single search type.

3. Implementation complexity

Setting up hybrid search is not a plug-and-play process. It involves integrating two systems, typically a keyword engine and a vector database (such as FAISS or Pinecone). Managing pipelines, ensuring consistent indexing, and merging results correctly can feel overwhelming.

The solution is to start simple. Test both search methods separately, then integrate step by step.

Using platforms like Meilisearch that support both search types natively can also reduce headaches.

4. Data storage overhead

Hybrid search requires storing both text indexes and vector embeddings, which can quickly increase storage needs. This can become costly as your data grows.

To handle it effectively, ensure you compress embeddings, remove redundant data, and use a cloud storage service that scales dynamically. Regularly removing unused or outdated documents helps to keep the system efficient.

You could also use embedding models with smaller vector sizes to reduce memory usage where applicable.

How do you evaluate hybrid search performance?

Evaluating hybrid search performance involves assessing how effectively a system that combines vector and keyword search retrieves accurate results.

Several key metrics are used for evaluation. They include:

- Relevance: Measures how closely the returned results align with the user's intended search.

- Recall: Checks how many of the right results were found. For example, if ten articles truly contain the answer and the search finds only six of them, recall is 60%.

- Precision: Measures how many of the returned results are actually correct. For example, the search returns ten articles, but only three of them actually contain the answer. In this case, precision would be 30%.

- Latency: Indicates how quickly the system responds, which directly impacts the user experience.

To test a hybrid search retriever’s quality in a RAG setup, create a set of test queries with relevant documents.

Combining both retrieval metrics and end-to-end response quality provides a complete picture of performance.

What are the best practices for optimizing hybrid search in RAG?

Optimizing hybrid search in a RAG system is about getting your retriever to deliver results that are both fast and accurate. When done right, it can make a huge difference in how well your model understands and responds.

Here’s how you get there:

- Leverage external tools: A tool such as Meilisearch is built for speed and keyword accuracy. When paired with a vector engine, it creates a strong hybrid setup that is fast, scalable, and effective for production RAG systems.

- Fine-tune vector and keyword scores: Adjusting the balance between keyword and vector search helps you get both context and precision.

- Clean and normalize data: Messy data slows things down and confuses your retriever. Try to keep your text consistent, remove duplicates, and clearly structure the metadata.

- Retrain embeddings: Updating your embeddings with new data ensures the system remains aligned with current language use and domain-specific terms.

- Cache and batch: Caching frequent queries and batching embeddings or API calls can significantly reduce latency, especially in production environments.

Now, let’s see some scenarios where you would use hybrid search.

When should you use hybrid search in RAG?

You should use hybrid search in a RAG system when you need both semantic understanding and keyword precision to retrieve the most useful results.

It is especially valuable when dealing with complex data types. For example, in combining technical documents, FAQs, and research reports.

In industries like healthcare, it helps match patients' symptoms to treatment protocols even when wording differs.

In finance, it ensures that crucial keywords such as ‘interest rate’ and ‘risk’ are not overlooked.

For legal or research-intensive domains, it is ideal for retrieving documents where both phrasing and context are essential.

In short, use hybrid search when accuracy and understanding are equally important, or when your data encompasses not just words, but also their meaning.

What are the common mistakes to avoid with hybrid search in RAG?

Hybrid search in RAG can be powerful, but it is easy to get it wrong if the setup is not well thought-out.

Here are some common mistakes to watch out for:

- Poor weighting between vector and keyword scores: If you focus on one more than the other, you will either lose context or miss exact matches. Try to test and adjust the weighting until you find the right mix.

- Ignoring latency: A slow retriever can significantly impact user experience. Use caching, batching, and efficient search engines to ensure responses are quick.

- Misconfigured indices: Forgetting to index metadata or using inconsistent text normalization can affect the relevance of search results. Always review your indexing settings.

- Using outdated embeddings: Language shifts quickly and becomes obsolete. You should regularly refresh embeddings to maintain optimal performance.

- Skipping RAG evaluation: Do not just assume the hybrid search works. Ensure that you track precision, recall, and response quality over time to monitor their evolution.

Frequently Asked Questions (FAQs)

Here are some frequently asked questions and answers about hybrid search.

Which algorithms are used for hybrid search?

Hybrid search typically combines BM25 for sparse (keyword-based) retrieval with embeddings from models such as Sentence Transformers or OpenAI embeddings for dense (semantic) retrieval. BM25 matches exact terms, while embeddings capture meaning. The system then merges or re-ranks results from both methods to deliver the most relevant answers.

What are common frameworks for hybrid search in RAG?

Common frameworks for hybrid search in RAG include LangChain, LlamaIndex, and Haystack. LangChain lets you easily combine vector and keyword retrievers in custom pipelines. LlamaIndex integrates structured and unstructured data for better retrieval. Haystack provides built-in support for hybrid retrievers, allowing flexible ranking and evaluation across multiple data sources.

How is Reciprocal Rank Fusion used in hybrid search?

Reciprocal rank fusion (RRF) is a simple method for combining results from various searches. It assigns scores based on how high each document ranks in both keyword and vector searches. The higher the rank, the bigger the score. These scores are then merged to produce a single, balanced result list.

Does Qdrant support hybrid search for RAG?

Yes, Qdrant supports hybrid search for RAG. It allows you to use sparse vectors (keyword-style) and dense embeddings (semantic-style) in the same collection. It also allows you to combine results using either fusion or reranking. You can also use the built-in Query API to merge both RAG types in a single pipeline.

How does hybrid retrieval differ from hybrid search?

Hybrid search combines keyword and vector methods to find relevant documents. Hybrid retrieval merges results from various retrievers or data sources within a RAG pipeline. Hybrid retrieval determines how the data is gathered, while hybrid search determines how it is ranked.

Building reliable AI systems with hybrid search RAG

Building reliable AI systems with hybrid search in RAG is all about striking a balance between trust and performance. This balance enables more accurate, context-aware, and grounded responses, informed by real data. It also helps reduce hallucinations, one of the biggest challenges in generative AI.

Meilisearch simplifies hybrid search RAG implementation and orchestration

Meilisearch makes setting up hybrid search in RAG systems straightforward. It is fast, easy to integrate, scalable, and works well with vector databases, allowing you to combine keyword precision and semantic search without the need for complicated setup or maintenance.