Speculative RAG: A faster retrieval-augmented generation

Discover how speculative RAG improves traditional RAG with faster drafts, smarter retrieval, and better performance for advanced AI workflows.

In this article

Speculative retrieval-augmented generation (RAG) is a smarter way to retrieve information from knowledge bases and generate responses.

It is a bit like giving your AI system a head start. Rather than waiting to find all the information before writing an answer, the AI system begins drafting answers while searching for more details.

This makes it faster than standard RAG, which first retrieves all the information it needs before generating a response.

Here is a summary of what you’ll learn in this article:

- What speculative RAG is and how it works

- How it’s different from traditional RAG systems

- Its key benefits and limitations

- Why drafting is important in speculative RAG

- Specific use cases for speculative RAG

- How to implement speculative RAG, and more

Let’s dive right in.

What is speculative RAG?

Speculative RAG improves how large language models (LLMs) answer questions by performing two steps at once:

- First, a smaller model drafts several possible answers using the first bits of information it finds.

- A larger model then refines these drafts before selecting the best final answer.

Recent papers from Google Research show that speculative RAG can increase accuracy by up to 12.97% while reducing response time by 51% compared to other RAG approaches.

Let’s dive deep into why speculative RAG is better than traditional RAG systems.

How does speculative RAG improve traditional RAG?

Speculative RAG improves on traditional RAG types by changing how the pipeline flows.

In a standard RAG framework, the AI system retrieves all the relevant documents it needs before generating the answer.

With speculative RAG, both the retrieval and generation phases happen simultaneously. While the documents are still being retrieved, a small model starts to generate draft responses using whatever information it already has.

These small-model drafts are not the final output; they are more like quick sketches. Once the rest of the document subsets arrive, a more powerful generalist LM (for example, Mixtral-8x7b or Mistral-7b) checks the drafts, applies self-reflection, and then picks the best draft as the answer.

Each draft is generated from a different slice of the retrieved documents, making this a smart approach. It is like getting several mini-answers from multiple points of view, then having an expert pull together the strongest parts from each one.

What are the benefits of speculative RAG?

Let’s explore the key benefits of using speculative RAG.

1. Lower latency

The speed boost is one of the most noticeable improvements when using speculative RAG. It does not sit idle waiting for all the search results. Instead, it starts sketching answers as soon as the first bits of information come in, then refines them when it gets the rest.

2. Better handling of complex questions

Speculative RAG is a good tool to use for knowledge-intensive questions. Because it explores several diverse perspectives simultaneously, it is better equipped to connect the dots and still produce an accurate answer.

3. Good resource management

Speculative RAG reduces the computational load and token counts by letting a smaller, specialized RAG model (RAG drafter) handle the early work, leaving the larger (and more powerful) model free to focus on finalizing the answer.

This approach reduces token usage and computing costs.

4. Improved response quality

The multi-draft approach of speculative RAG generates better answers because it gathers several perspectives before deciding on the final response. The bigger model picks the best one, which is usually accurate.

During the verification phase, it spots mistakes or gaps that a traditional RAG system might miss.

The different drafts available in speculative RAG also help to capture different angles and details that could be overlooked when dealing with complex questions. This offers a clear improvement over traditional baselines used in RAG research.

Why is drafting important in speculative RAG?

Drafting is the reason speculative RAG works so well. The parallel generation of multiple drafts from various documents allows the model to explore different options before deciding what to do.

The drafter model generates multiple candidate answers from different documents. This diversity is crucial because complex questions often have multiple angles, and you need to consider all of them.

The RAG verifier phase then applies scoring and generated rationale checks. This instruction-tuning style process is central to reducing hallucination and position bias.

A generic language model reviews the drafts, detects any mistakes, and then fills the gaps to choose the best answer.

This two-step approach (parallel generation followed by verification) produces more accurate answers than the traditional single-step RAG systems.

How does speculative RAG work technically?

Speculative RAG works by breaking the traditional RAG workflow into two big steps that operate like a tag team

- Draft generation: A smaller model produces multiple candidates in parallel.

- Verification: A larger LLM applies evaluation benchmarks, assigns a confidence score, and chooses or merges drafts into the final answer.

These big steps can be further broken down into smaller steps:

This process leverages speculative decoding to deliver more accurate outputs.

Drafting

Drafting in speculative RAG is where the model begins sketching answers before completing the retrieval process. It is the first phase that sets the tone for the final output.

- Step 1: Once you input the query, the system begins parallel document retrieval from the knowledge base. Speculative RAG uses standard embedding similarity to find the most relevant matches.

- Step 2: The retrieved documents are ranked by relevance and passed forward for partitioning and draft generation.

- Step 3: The retrieval pipeline can integrate data from multiple sources (such as databases or APIs) when necessary. This process expands the coverage without slowing it down.

- Step 4: The retrieved documents are divided into unique subsets so that each RAG draft can draw from a different perspective.

- Step 5: The smaller model (a specialist RAG drafter) generates multiple candidate responses simultaneously, each based on its own document cluster.

Verification

The verification phase uses a generic language model to evaluate and select the best draft from the candidates generated in parallel.

It also makes sure the answer is accurate and aligns with the query.

- Step 1: The draft evaluation feeds all candidate responses to the large generalist language model for a comprehensive review.

- Step 2: Quality scoring is then applied, which analyzes each draft against criteria such as accuracy and relevance to the original query.

- Step 3: Cross-checking each draft candidate against the retrieved document subsets catches potential inconsistencies.

- Step 4: The final selection chooses the highest-scoring draft or merges the best parts of multiple drafts into a single response.

Final output

This stage delivers the refined response after all the verification checks are complete.

- Step 1: First, the system shapes the chosen draft into the correct output format, ensuring it is clear and easy to read.

- Step 2: Citations and links to the sources are integrated so that users can easily trace and verify every draft claim.

- Step 3: A quality assurance check ensures accuracy and alignment with the original query intent.

- Step 4: Finally, the response is packaged with useful metadata (such as confidence scores, processing time, and source attribution) before it is presented to the user.

When should you use speculative RAG?

Speculative RAG is useful when you need quick and accurate answers, especially to complex questions requiring multiple information sources.

Some use cases of speculative RAG are:

- Chatbots: With speculative RAG, bots can rapidly respond while ensuring the answers are from verified sources. Users can get quick and reliable replies in NLP systems despite thousands of conversations happening simultaneously.

- Summarization: Instead of skimming and scanning through long documents, speculative RAG helps create a few quick summaries and return the one that best captures the query's original intent.

- Customer service: When dealing with convoluted questions, speculative RAG can pull relevant information from past tickets or FAQs. It then verifies the response so customers get the correct answer the first time.

- Technical question answering systems: Speculative RAG can help developers verify that the LLM output is accurate. The verification can be from multiple external knowledge sources, such as code repositories or API documentation.

- Financial analysis and reporting: Investment analysts often need to pull complex datasets from market reports, news, and financial statements quickly. With speculative RAG, they can generate multiple analytical angles simultaneously and produce the most accurate investment appraisal.

- Legal document analysis: Lawyers working on complex cases usually review many precedents. Speculative RAG can help explore different argument angles from multiple sources at once, then select the strongest case strategy after verifying it against trusted legal databases.

How to implement speculative RAG

Implementing speculative RAG involves:

- A retriever (e.g., Meilisearch, FAISS, or Hugging Face embeddings)

- A drafter model

- A RAG verifier

- Integration of evaluator functions and metadata (like confidence scores, input token usage, and response times)

Let’s build a simple speculative RAG system that searches documents with Meilisearch, creates several possible answers with OpenAI, and then uses AI to decide which one is best.

1. Setting up Meilisearch

If you do not already have Meilisearch installed, you can install it with:

# Install Meilisearch curl -L https://install.meilisearch.com | sh

Once installed, start the server with a master key:

# Launch Meilisearch ./meilisearch --master-key="aSampleMasterKey"

For security, store your credentials in a .env file. Add the following values to the file:

MEILI_URL=http://127.0.0.1:7700 MEILI_KEY=aSampleMasterKey OPENAI_API_KEY=your_openai_api_key_here

Note that you will need to generate an OpenAI API key to complete the setup.

2. Set up the environment and dependencies

In this step, we will create a Python file. We will import the necessary libraries and load environment variables.

We will also create an API client that will communicate with the Meilisearch server.

Lastly, we create an index to store the knowledge base.

import meilisearch import asyncio import os import time from dotenv import load_dotenv from openai import OpenAI # Setup environment variables load_dotenv() MEILI_URL = os.getenv("MEILI_URL", "http://127.0.0.1:7700") MEILI_KEY = os.getenv("MEILI_KEY", "aSampleMasterKey") OPENAI_API_KEY = os.environ["OPENAI_API_KEY"] # Initialize clients ms = meilisearch.Client(MEILI_URL, MEILI_KEY) oa = OpenAI(api_key=OPENAI_API_KEY) index = ms.index('simple_kb')

3. Create a knowledge base

Now comes the foundation of our RAG system – the knowledge base itself. We will build a simple customer support knowledge base, but the same principle applies whether you're dealing with legal documents, technical manuals, or any other domain.

Each document in our knowledge base has an ID, a topic (for easy categorization), and content (the actual information). This structure makes it easy for Meilisearch to find relevant information when users ask questions.

# Sample knowledge base - just 3 simple docs docs = [ {"id": 1, "topic": "password reset", "content": "To reset password: 1) Click forgot password 2) Check email 3) Follow link"}, {"id": 2, "topic": "slow internet", "content": "For slow internet: 1) Restart router 2) Check other devices 3) Call ISP"}, {"id": 3, "topic": "email issues", "content": "Email problems: 1) Check spam folder 2) Verify settings 3) Try different browser"} ] def setup_kb(): """Add documents to Meilisearch""" try: index.delete() time.sleep(1) except Exception: pass # Index doesn't exist yet # Add documents and wait for indexing index.add_documents(docs) time.sleep(3) print("Knowledge base ready")

4. Smart document search

This is where we implement our RAG system's ‘retrieval’ part. When a user asks a question, we find the most relevant documents from our knowledge base.

However, we should address how users might phrase the same question differently.

Meilisearch excels at this because it handles typos and different phrasings and can search across multiple fields simultaneously.

Our fallback logic catches cases where the primary search finds nothing, ensuring we always return something useful.

def search_docs(question): """Find relevant docs""" results = index.search(question, { 'limit': 2, 'attributesToSearchOn': ['topic', 'content'] }) # Try keyword search if no results if len(results['hits']) == 0: if 'password' in question.lower(): results = index.search('password', {'limit': 2}) elif 'internet' in question.lower() or 'slow' in question.lower(): results = index.search('internet', {'limit': 2}) elif 'email' in question.lower(): results = index.search('email', {'limit': 2}) return results['hits']

5. Generate multiple drafts in parallel

Now, we build the core of the speculative RAG. We generate multiple parallel responses and pick the best one.

To do this, we use different temperature settings for each draft. This way, the LLM creativity changes: lower values produce more focused, conservative responses, while higher values encourage more creative, varied outputs.

async def generate_draft(question, docs, draft_num): """Generate one draft response""" context = " ".join([f"- {doc['content']}" for doc in docs]) prompt = f"Context: {context} Question: {question} Answer briefly:" response = oa.chat.completions.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": prompt}], max_tokens=100, temperature=0.3 + (draft_num * 0.3) # Different creativity levels ) return response.choices[0].message.content

Notice the async function here. With async, all three calls happen simultaneously. Temperature also changes (0.3, 0.6, 0.9) giving three distinct ‘personalities.’

6. Pick the best draft

Now we need a judge. We use an AI model to evaluate all options and select the best.

GPT-4 (or GPT-4o-mini) is better at evaluation than GPT-3.5-Turbo but it's also more expensive. By using it only for the selection step, we get the best of both worlds: quality evaluation at reduced cost.

def pick_best_draft(question, drafts): """Use GPT-4 to pick the best draft""" drafts_text = " ".join([f"Draft {i+1}: {draft}" for i, draft in enumerate(drafts)]) prompt = f"Question: {question} {drafts_text} Which draft is best? Reply with just the number (1, 2, or 3):" response = oa.chat.completions.create( model="gpt-4o-mini", messages=[{"role": "user", "content": prompt}], max_tokens=10 ) try: best_num = int(response.choices[0].message.content.strip()) return drafts[best_num - 1] except (ValueError, IndexError): return drafts[0]

7. Put it all together

This demonstrates the full speculative RAG pipeline: retrieving relevant documents, generating multiple candidate responses in parallel, and selecting the best one.

async def answer_question(question): """Main function: speculative RAG in action""" print(f" Question: {question}") # Search for relevant docs docs = search_docs(question) # Generate 3 drafts in parallel - this is the key improvement tasks = [generate_draft(question, docs, i) for i in range(3)] drafts = await asyncio.gather(*tasks) # Pick best draft using GPT-4 best_answer = pick_best_draft(question, drafts) # Show results print(" All drafts:") for i, draft in enumerate(drafts, 1): print(f"{i}. {draft}") print(f" Best answer: {best_answer}")

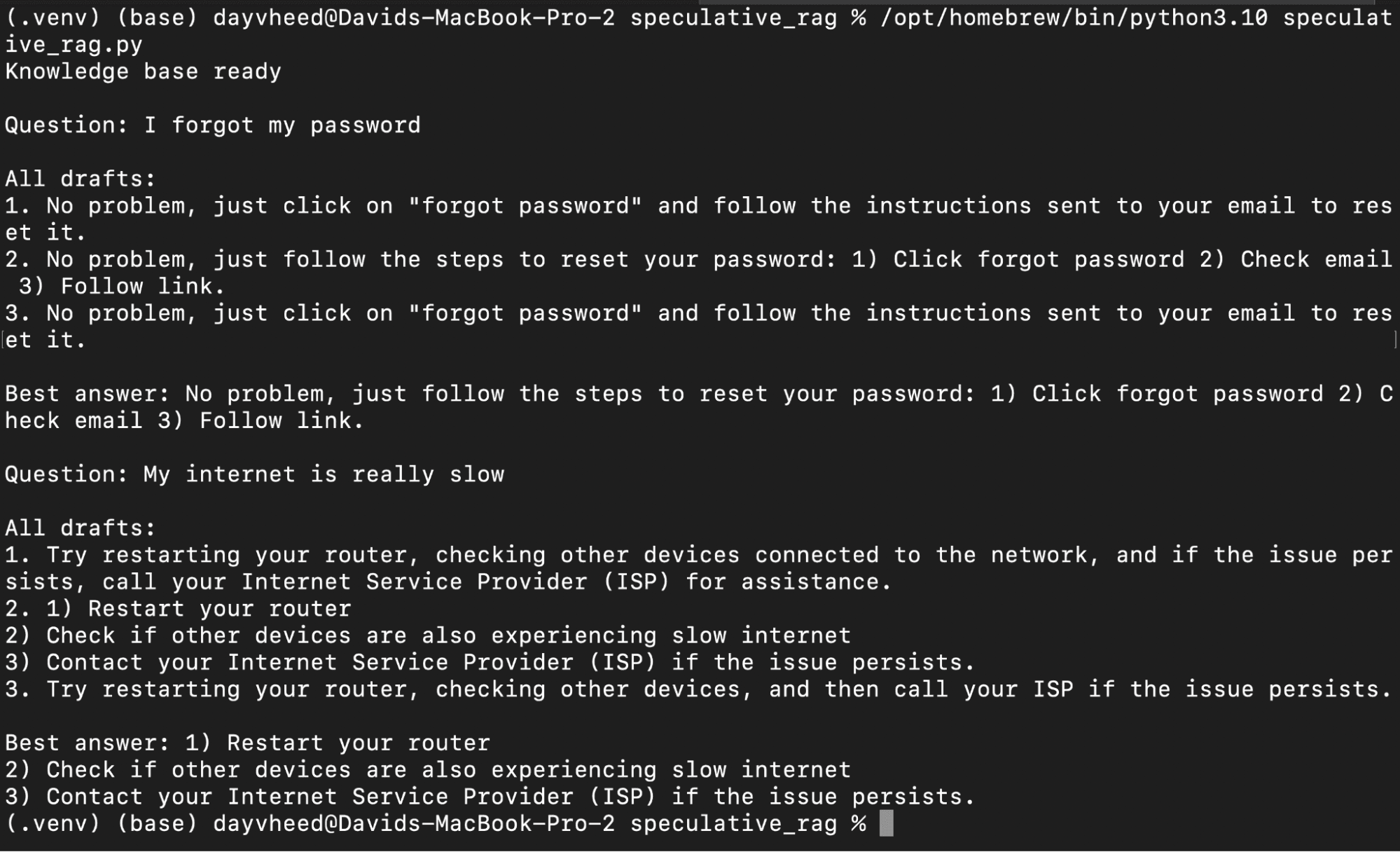

8. Demoing the project

Let's test the system with some realistic questions:

async def main(): setup_kb() questions = [ "I forgot my password", "My internet is really slow" ] for q in questions: await answer_question(q) if __name__ == "__main__": asyncio.run(main())

When we run the Python file, we see our system's ability to understand intent and match it to the right knowledge base entries.

What are the limitations of speculative RAG?

While speculative RAG has many benefits, there are also a few limitations you should be aware of. Here are some of them:

- Model compatibility: Some language models are not optimized for fast back-and-forth between generation and verification. To use speculative RAG efficiently, you may need to use specific models, which can limit your choices if you already have a preferred provider.

- Implementation complexity: Building a solid RAG flow is already complex. You must select the right vector database, embedding model, text splitter, retriever, and the LLM that ties it together. With speculative RAG, you are adding an extra layer: building a drafting and verification pipeline and ensuring your models (both small and large) work well together. This requires additional planning and a clear idea of how every moving piece works together, making it more complex.

- Potential accuracy trade-offs: Even with verification, mistakes can sneak through, especially if the retrieved sources are outdated or incomplete. If your drafter model generates poor-quality drafts, even the best verifier can only pick the least bad option. Careful fine-tuning of both the drafter and verifier models is required to minimize these issues, especially when handling long-context inputs.

- Higher computational costs: Running multiple drafts and verification checks in parallel can be expensive in terms of computing time and API costs, especially at a large scale.

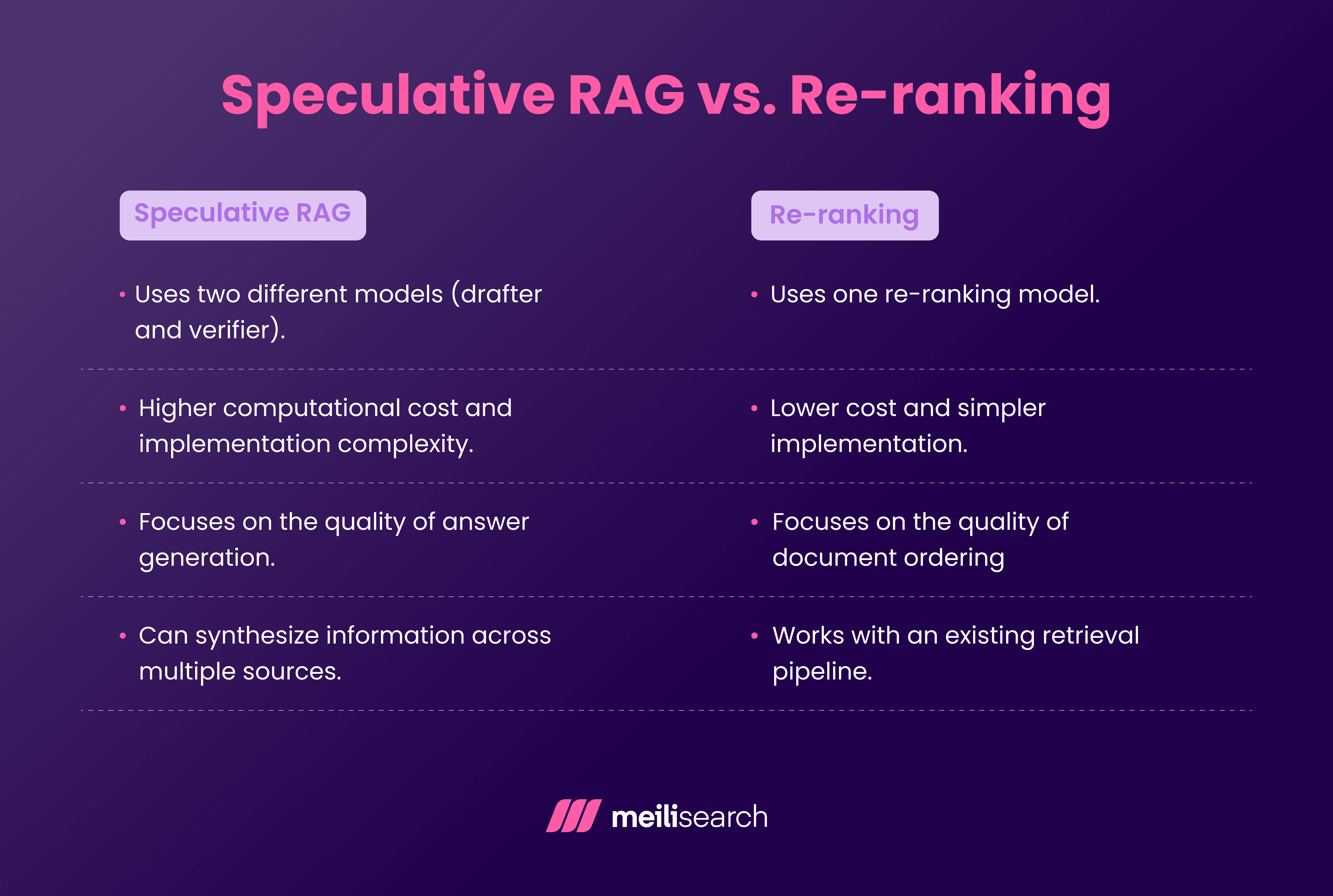

How does speculative RAG compare to re-ranking?

The main difference between speculative RAG and reranking is that speculative RAG generates multiple answer drafts, verifies them, and picks the best one, while re-ranking focuses on arranging the retrieved documents by relevance before generating a single answer.

Both are appropriate for different scenarios.

You use re-ranking when your main problem is getting the right documents to the top of your search results, especially with straightforward queries.

Speculative RAG is better when you need genuinely better answers and can afford the extra computational cost.

Final thoughts on using speculative RAG in production

Speculative RAG shows how RAG systems are evolving toward better chatbots, smarter algorithms, and faster response times.

It is one of those techniques that makes you realize how bright the future is with search.

If you are dealing with complex queries where answer quality matters and you need the responses quickly, speculative RAG should be your go-to tool.

You might need a higher computational budget and technical expertise compared to other standard RAGs, but the boost in user experience is hard to miss.

How Meilisearch can support efficient retrieval in speculative RAG systems

Meilisearch can be used in speculative RAG as the quick, first-step retriever that swiftly provides top documents for drafting multiple responses. Its hybrid search features offer relevant context efficiently, allowing the speculative process to start with high-quality candidates.