10 Best RAG Tools and Platforms: Full Comparison [2026]

Discover 10 of the best RAG tools alongside their key features, pricing, pros and cons (based on real users), integrations, and more.

![10 Best RAG Tools and Platforms: Full Comparison [2026]](/_next/image?url=https%3A%2F%2Funable-actionable-car.media.strapiapp.com%2F10_Best_Rag_Tools_and_Platforms_363d8b5e0b.png&w=3840&q=75)

In this article

What’s the most popular way to connect large language models (LLMs) with up-to-date or private data sources?

Retrieval-augmented generation (RAG) tools.

RAG tools improve the reliability of LLMs in real-world AI applications by reducing AI hallucinations and improving accuracy.

RAG software tools index your data, find the most relevant information through vector search, keyword, or hybrid search, and pass that context to the language model for more accurate LLM responses. There are different types of RAG searches you can use.

Here’s a quick overview of the 10 top-rated RAG tools with their most useful functions and pricing structures:

1. Meilisearch

Meiliesearch is an intuitive yet powerful search engine that scales with your business. Our core aim with this product is to empower RAG pipelines, semantic search, and lightning-fast information retrieval for devs and product teams.

Source: Meilisearch

Key features:

- Hybrid search (BM25 + vectors): Relevance is key in Meilisearch, which combines keywords and semantic search to provide better answers in LLM workflows.

- Typo-tolerant search: It is capable of handling user input errors without needing extra logic.

- Custom ranking rules: As a core feature, Meilisearch allows you to fine-tune how results are scored and sorted.

- Multilingual tokenization: It supports over 20 languages, including CJK and Thai.

Pricing:

- Build – $30/month: Includes 50K searches and 100K documents.

- Pro – $300/month: Includes 250K searches and 1M documents. Adds priority support and extended analytics.

- Custom – Contact sales. Resource-based with volume discounts, premium SLA, SSO (SAML), and compliance features like SOC2.

- Open Source – Free: Self-host Meilisearch on your own infrastructure.

A 14-day free trial is available for all new Cloud users, and no credit card is required.

Integrations:

We offer SDKs for JavaScript, Python, PHP, Go, Rust, Java, .NET, Dart, and Swift. You can also integrate with frameworks like Laravel Scout, Rails, and Symfony.

For the frontend, we support Vue, React, InstantMeilisearch, and Autocomplete.

LangChain integration is available for vector and hybrid search, and we work well in cloud-native environments like Firebase, GCP, and Kubernetes.

Pros and cons (based on real G2 reviews):

These pros and cons are based on feedback from real users who left verified reviews on G2.

Pros:

- Fast setup and onboarding: Multiple reviewers mention going from install to search in under 10 minutes.

- Excellent performance: Users consistently praise speed and responsiveness, even with large datasets.

- Excellent developer experience: Clear documentation and a flexible API make integration easy.

Cons:

- Dashboard limitations: Some users want more advanced filtering and better metrics visibility.

- Evolving enterprise features: A few users noted that enterprise-specific controls are still being built.

Best for:

- Developers who need a simple, high-speed way to retrieve relevant data for prompts or AI assistants.

- ML engineers and data scientists who want a tunable vector store layer that blends keyword relevance with semantic recall.

- Product teams and startups looking to ship AI-powered search with minimal setup and a smooth growth path from prototype to production.

What a real user says:

Here’s a quick quote from a verified G2 reviewer at a small business in the Health and Wellness space, using Meilisearch Cloud for internal asset search:

“Meilisearch has been a great tool to work with. It’s really easy to use, and the setup process is straightforward, which saved me a lot of time. The documentation is clear … I’ve also had a really good experience with their support team… It’s fast and handles searches and indexing smoothly, even with larger datasets.”

Final verdict:

We build Meilisearch for developers and teams without compromise on speed or flexibility. With multiple pricing and integration options, our aim is to give users the complete package to empower their RAG pipelines and business development.

2. LangChain

LangChain structures workflows through prompts, tools, memory, and vector stores. If you’re building an AI agent, chatbot, RAG system, or document question-answering tool, you cannot go wrong with LangChain.

Source: LangChain

Key features:

- Chains and agents: LangChain enables devs to set up multi-step workflows or use agents that intelligently choose tools and paths based on user inputs.

- Prompt and memory handling: With LangChain, you can create reusable prompt templates and persistent memory to maintain conversation context.

- Data loaders and integrations: As an all-encompassing framework, it can ingest files, databases, and web content, and use built-in support for major LLMs, vector databases, and APIs.

Pricing:

LangChain offers flexible plans for teams of any size, with a pay-as-you-go model:

- Developer – Free

- Plus – Starting at $39/month per seat

- Enterprise – Custom pricing

Integrations:

LangChain works with major LLM providers (OpenAI, Anthropic, Azure), vector databases (Pinecone, Weaviate, Milvus), document loaders (CSV, PDFs, websites, Notion), and supports LangSmith and LangGraph for observability and orchestration.

It also integrates easily with frameworks like Hugging Face and tools like ChromaDB and Supabase.

Pros and cons (based on G2 user reviews):

We analyzed genuine G2 reviews to understand what users love and what frustrates them. Here’s the verdict:

Pros:

- The modular architecture is a favorite. It makes it easy to build flexible workflows.

- Extensive integration options across the LLM ecosystem.

- “great” for quickly prototyping RAG and agent-based applications.

Cons:

- The documentation is overloaded with new releases and leaves the users confused.

- The learning curve can be steep for those new to chains or agents.

- Some developers are not fond of the latency and maintainability issues at the production scale.

Best for:

- Developers building agentic RAG applications.

- ML engineers and data scientists looking to experiment with vector retrieval, evaluation, and fine-tuned control.

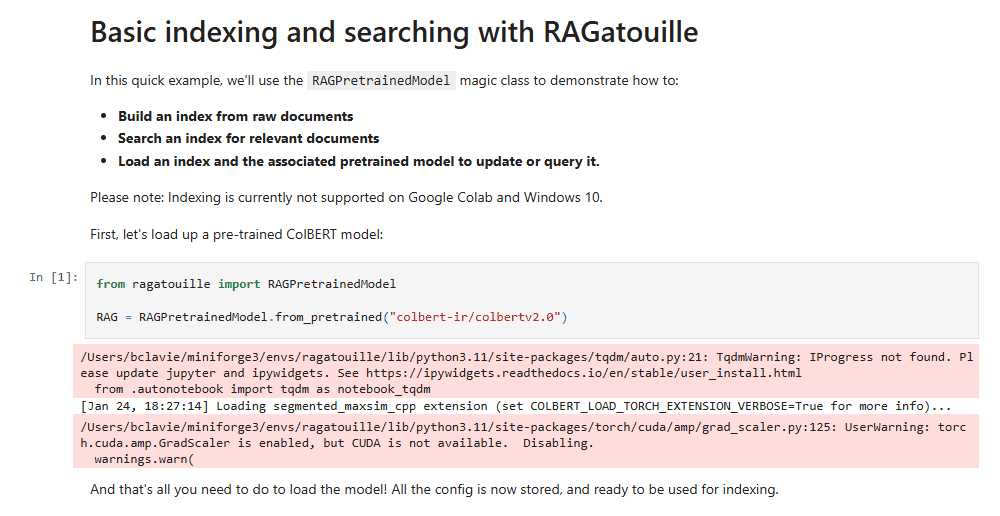

3. RAGatouille

RAGatouille is a lightweight Python package that brings ColBERT-style late interaction retrieval into real-world RAG pipelines. It’s open source, easy to install, and compatible with LangChain, LlamaIndex, and other frameworks.

Source: RAGatouille GitHub

Key features:

- ColBERT-based retrieval: RAGatouille uses token-level late interaction scoring for better accuracy, which is key in domain-specific tasks.

- Training pipeline: It also includes tools like RAGTrainer and triplet mining for creating high-quality indexes or fine-tuning.

- Reranking with contextual compression: You can also use it as a reranker for existing retrievers without reindexing everything.

Pricing:

RAGatouille is entirely free and open source. At this time, there is no paid tier, hosted version, or enterprise offering.

Integrations:

RAGatouille integrates with LangChain as a retriever and reranker. It supports contextual compression workflows, works with any base retriever (like FAISS or Milvus), and is often used alongside document loaders like PDFs, CSVs, or web content.

It’s also been tested in setups with LlamaIndex and Vespa.

Pros and cons (based on GitHub feedback and LangChain community use):

Pros:

- Very simple to bring ColBERT to production without writing custom code.

- Works as both a retriever and a reranker across multiple stacks.

- High accuracy for long documents and domain-heavy content.

Cons:

- Only available in Python, so not quite ready for full cross-platform production.

- Requires decent computing power and memory for indexing.

- No managed dashboard or enterprise support.

Best for:

- ML engineers working on retrieval quality who need granular, token-level scoring for tighter LLM responses.

- Data scientists building RAG prototypes.

- Academic teams or IR researchers looking to test ColBERT-style algorithms in live pipelines.

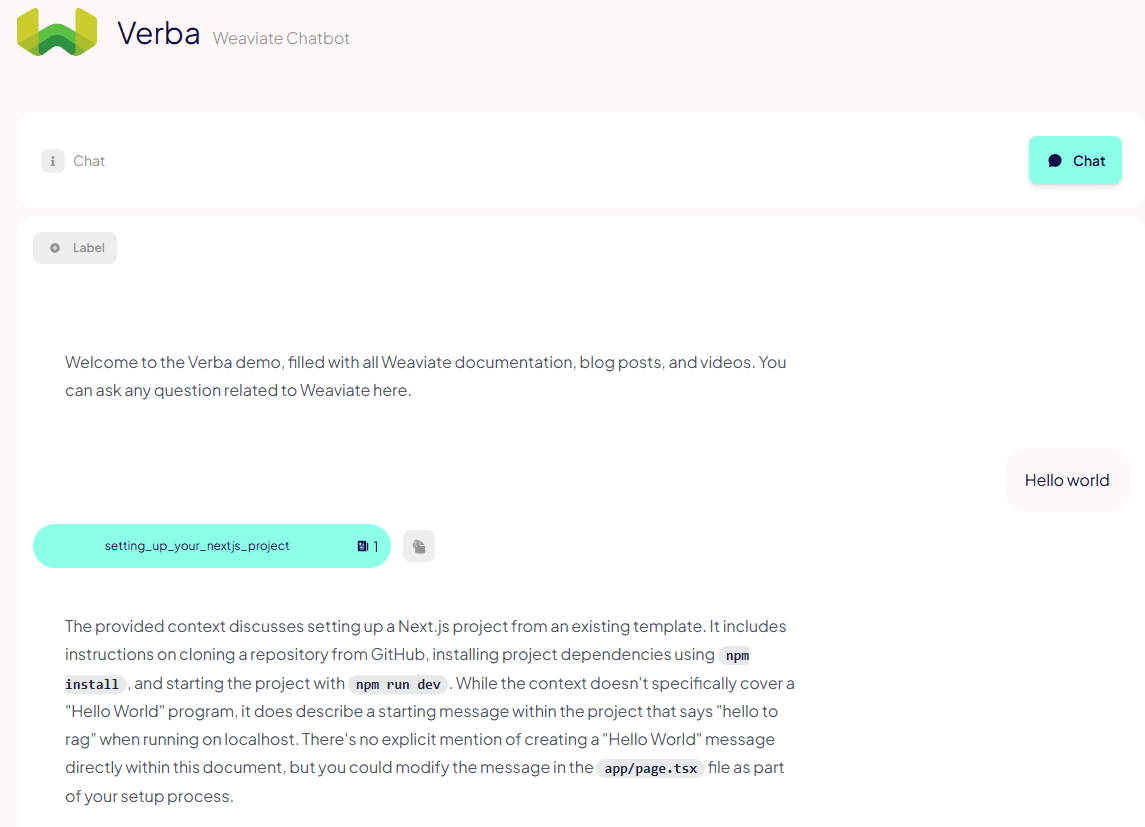

4. Verba

With Verba, you get a user-friendly UI where you can upload documents and index them into Weaviate’s vector database. Verba’s chat interface guides non-technical users through document ingestion, chunking, vectorization, and chat-based querying.

Source: Verba

Key features:

- Web-based chat UI: You can upload PDFs, Markdown, CSV, GitHub repos, and more; chat directly with your data and see sources highlighted.

- Hybrid search + semantic cache: Lets you combine vector and keyword search and keeps a semantic cache of past queries for faster repeat responses.

- Flexible chunking strategies: Has modern token-based, sentence, semantic, or recursive chunking powered by spaCy for better indexing.

- Model choice and deployment: Supports multiple LLM providers and can run locally with Ollama or via Docker.

Pricing:

Verba is 100% open source and free to use. Currently, there is no managed cloud service or enterprise tier.

Integrations:

Verba Integrates with Weaviate’s vector database, embedding providers (OpenAI, Cohere, HuggingFace, Ollama), document loaders like UnstructuredIO, and frameworks like LangChain and LlamaIndex.

Pros and cons (based on community feedback and documentation):

Pros:

- Very easy to install and start, even for non-developers.

- Users enjoy the transparent chat experience with visible sources and highlighted chunks.

Cons:

- Not suitable for multi-user or enterprise-scale deployments.

- Resource-heavy as indexing large datasets needs decent RAM/CPU.

- Community-driven enterprise features like dashboard analytics and support are still evolving.

Best for:

- Small teams and solo developers who need simple chatbots or knowledge bases.

- Data scientists and ML practitioners who need to prototype data chatbots using custom models.

- Education and research groups exploring document QA and RAG without infrastructure overhead.

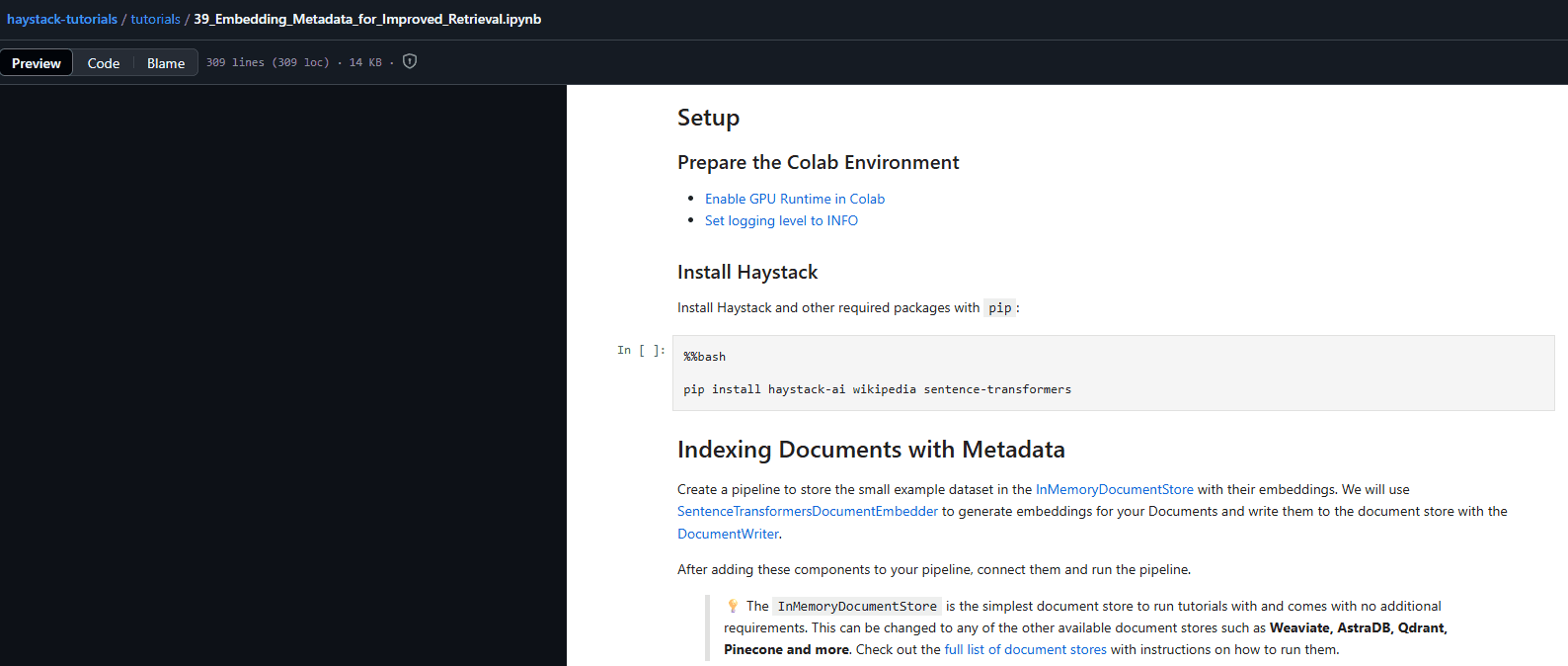

5. Haystack

Haystack is an open-source Python framework developed by deepset for building production-grade RAG pipelines, AI agents, and semantic search systems.

Source: Haystack GitHub

Key features:

- Modular pipelines that connect retrievers, generators, rankers, and evaluators.

- A REST API to deploy the RAG system with minimal setup.

- Easy support for agents that dynamically route user queries through tools or models.

Pricing:

Haystack is free and open source under Apache 2.0.

For visual pipeline orchestration and managed hosting, deepset offers deepset Studio and deepset Cloud/Enterprise with custom pricing for production.

Integrations:

Haystack works with vector stores like FAISS, Elasticsearch, Pinecone, Qdrant, LLMs from OpenAI, Anthropic, Cohere, and Hugging Face, and tools like Langfuse and Chainlit for monitoring or UI.

The pipelines are also fully customizable through YAML or Python.

Pros and cons (based on user and expert reviews):

Pros:

- Built for complex, multi-stage AI applications.

- Provides you with enterprise-ready tools that are observable and allow for team workflows.

- A very solid, strong community and documentation.

Cons:

- Python only; no SDKs for JS, Java, or other languages.

- Has a steeper learning curve than lightweight RAG libraries.

- Requires you to have orchestration knowledge for advanced use cases.

Best for:

- Teams going from demo to deployment.

- Companies that want full control and need observability, structure, and zero vendor mystery.

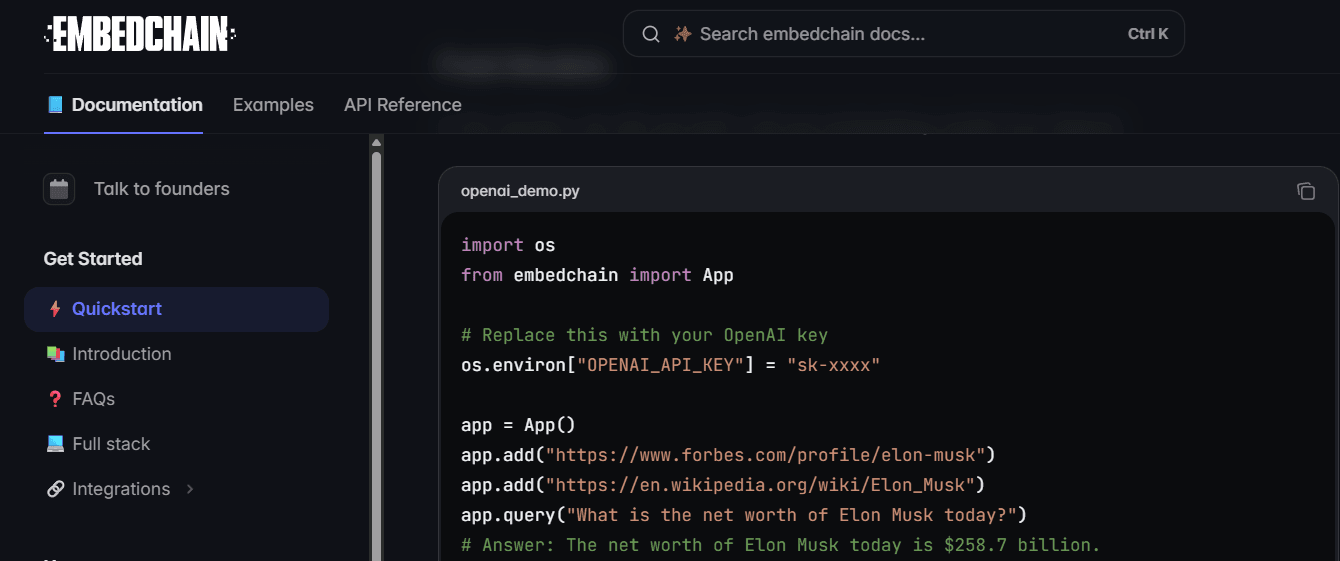

6. Embedchain

Embedchain is a minimal, open-source RAG framework for building ChatGPT-like apps over your data. It excels at simplifying ingestion, indexing, embedding, and querying into just a few lines of Python. This is perfect if you want to prototype or deploy lightweight bots.

Source: Embedchain documentation

Key features:

- Embedchain can ingest PDFs, websites, YouTube transcripts, and more using one-liners.

- Has a built-in chat interface that connects to your indexed documents.

- Provides support for embedding providers like OpenAI, Cohere, Hugging Face, and Ollama.

Pricing:

Embedchain is entirely free and open source. There are no paid tiers or hosted options available today.

Integrations:

It is built to be compatible with LangChain, ChromaDB, Supabase, FAISS, and other common vector stores.

Pros and cons (based on GitHub activity and community reviews):

Pros:

- Extremely easy to use and perfect for quick POCs and demos.

- It integrates smoothly with multiple embedding models.

Cons:

- Has limited customization compared to LangChain or Haystack.

- Not designed for large-scale or multi-user production.

- It has community support only.

Best for:

- Solo developers who want fast results.

- Hackathon teams that can easily ship working demos without spending hours reading docs.

- Educators (great for tutorials) and learners.

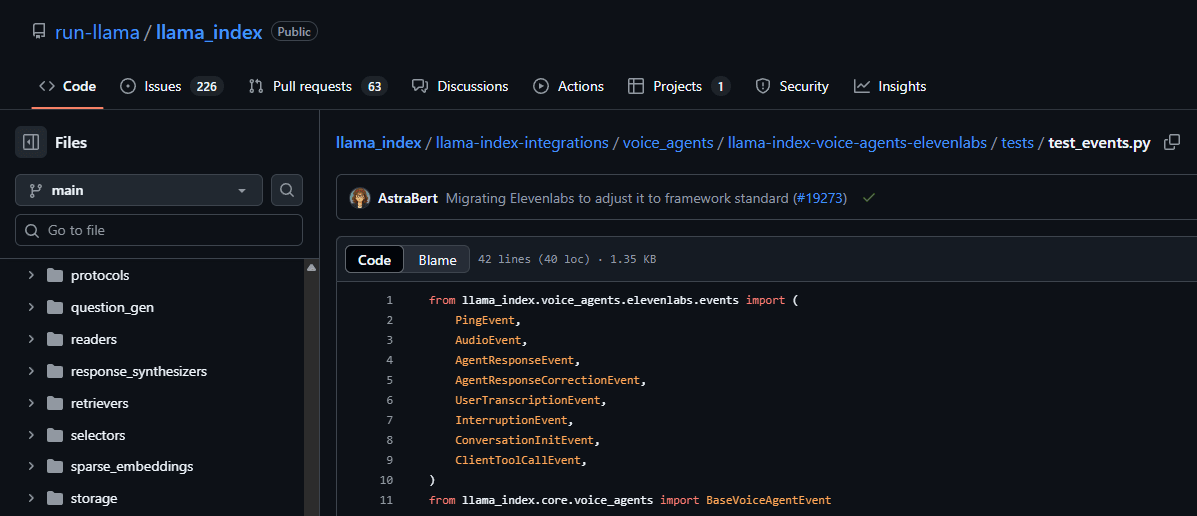

7. LlamaIndex

LlamaIndex (formerly GPT Index) is an open-source framework that helps connect external data to LLMs for building context-aware applications. It streamlines how you load, transform, index, and query data.

Source: LlamaIndex GitHub

Key features:

- Has the ability to ingest data from PDFs, websites, Notion, SQL, and more with structured or unstructured loaders.

- You can create composable indexes for fast, hybrid, or metadata-filtered retrieval.

- It helps you build advanced agents using built-in tool calling, routing, and memory across documents.

Pricing:

- Free plan

- Starter ($50/month)

- Pro ($500/month)

- Enterprise (custom pricing)

Integrations:

LlamaIndex is compatible with LangChain, OpenAI, Anthropic, Cohere, Pinecone, Weaviate, Qdrant, FAISS, and MongoDB.

It also supports deployment via Docker, Flask, and FastAPI.

Pros and cons (based on community feedback):

Pros:

- Has built-in tools for ingestion, indexing, retrieval, and agent routing.

- Strong flexibility with hybrid, metadata, and vector search.

- Ideal for building fully custom pipelines with minimal glue code.

Cons:

- The Python-only support limits use outside that ecosystem.

- Users complain that documentation sometimes lags behind feature development.

- It can feel complex for new users compared to simpler RAG libraries.

Best for:

- Full-stack developers who want to go from raw data to a custom LLM application without glue code.

- ML researchers.

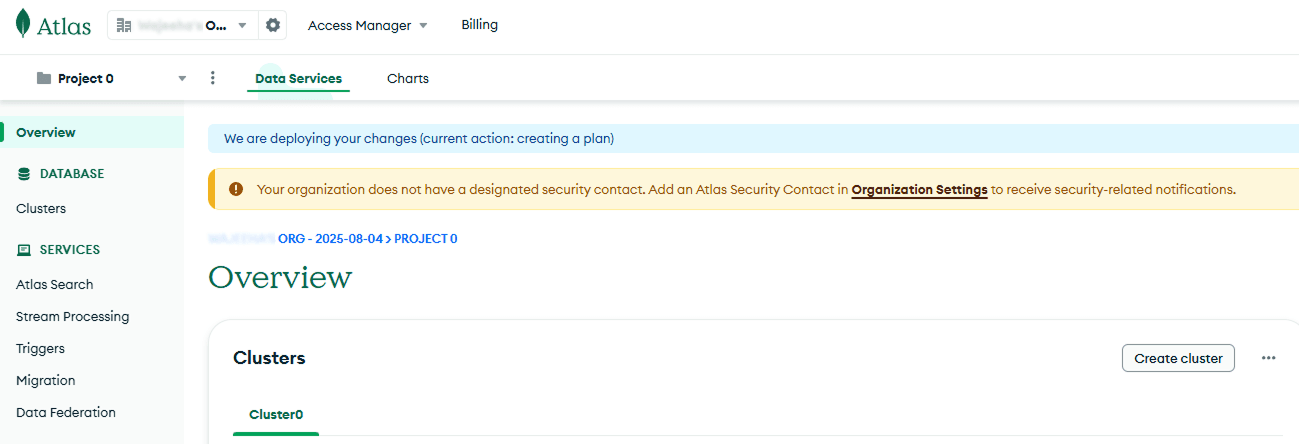

8. MongoDB

MongoDB Atlas Vector Search brings semantic retrieval directly into your primary database, allowing you to store and query vector embeddings alongside application data.

Source: MongoDB Atlas documentation

Key features:

- Native HNSW-based vector search built into the Atlas cluster.

- Query embeddings and metadata in a single pipeline using Atlas Aggregations.

- Unified search, filter, and ranking logic across structured and unstructured data.

Pricing:

MongoDB Atlas Vector Search is included in Atlas usage. You only pay based on your cluster tier and workload.

Atlas Plans:

- Free ($0/hour)

- Flex ($0.011/hour, up to $30/month)

- Dedicated ($0.08/hour)

- Enterprise Advanced (custom pricing)

Integrations:

MongoDB Atlas Vector Search Integrates with all the big names like LangChain, LlamaIndex, and Node.js/Python SDKs.

It also supports common embedding models (OpenAI, Hugging Face) and RAG pipelines via LangChain’s MongoDB retriever module.

Pros and cons (based on user reviews and technical guides):

Pros:

- Eliminates the need for a separate vector database since everything is kept in Atlas.

- Has flexible aggregation pipelines for combining filters and embedding queries.

- Works natively with existing application collections.

Cons:

- Tied to MongoDB Atlas and is less suitable if your data stack is elsewhere.

- Vector performance tuning may require DB-level expertise.

- Still maturing compared to standalone vector DBs like Pinecone or Weaviate.

Best for:

- MongoDB power users.

- Backend engineers.

- Ops-focused teams who want minimal moving parts and full observability.

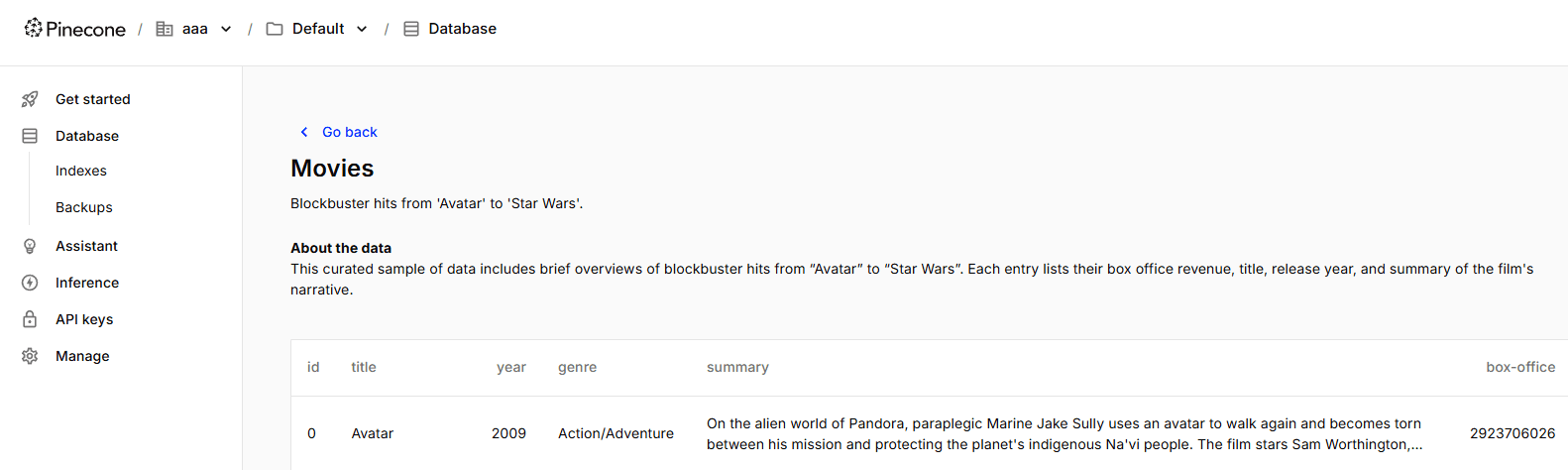

9. Pinecone

Pinecone is a managed, cloud-native vector database built for high-performance similarity search in AI applications, especially for RAG workflows. It offers hybrid search capabilities (dense + sparse vectors), serverless scaling, and global reliability, all accessible through a simple REST API.

Source: Pinecone

Key features:

- Comes with scalable vector indexing with serverless scaling, and you can adjust resources based on demand.

- Has a hybrid search combining sparse and dense embeddings for precision.

- Includes metadata filtering and namespaces for tenant isolation and multi-tenancy.

- Renowned for real-time index updates and optional reranking models for better relevance.

Pricing:

- Starter (Free)

- Standard ($50/month minimum)

- Enterprise ($500/month minimum)

- Dedicated (Custom)

Integrations:

Pinecone integrates smoothly with tools like LangChain, LlamaIndex, Supabase, and leading embedding models from OpenAI, Cohere, and Hugging Face.

Pros and cons (based on product docs and community feedback):

Pros:

- Has a serverless model that simplifies scaling and infrastructure.

- Very low latency (<100 ms) even at millions of vectors.

- Comes with strong hybrid search and filtering capabilities.

Cons:

- The costs increase with usage, and reads and writes can become expensive at scale.

- Users fear a vendor lock-in risk with serverless API-based architecture.

- Limited options for self-hosting or non-cloud deployment.

Best for:

- Founders and tech leads.

- Startup ML teams that can run embeddings quickly without hiring an infra team.

- Product engineers.

10. Vespa

Vespa is an open-source, high-performance AI platform developed by Yahoo. It combines traditional and multimodal search, vector similarity, and machine learning ranking in a unified system optimized for real-time, large-scale RAG and retrieval applications.

Source: Vespa

Key features:

- Native support for hybrid search combining lexical, vector, and tensor-based ranking.

- On-node ML inference to run ranking models directly where data resides.

- Real-time indexing with zero lag and automatic horizontal scaling.

- Custom ML ranking pipelines and support for geospatial or faceted search.

Pricing:

- Self-managed: pricing upon request

- Startup: Starts at $0.05/vCPU per hour

- Basic: Starts at $0.10/vCPU per hour

- Commercial: Starts at $0.145/vCPU per hour

- Enterprise: Starts at $0.18/vCPU per hour, with a $20,000 monthly minimum

Integrations:

Vespa is compatible with vector stores, LLM providers, and frameworks like LangChain and Deepset.

Pros and cons (based on official docs and benchmark studies):

Pros:

- Best-suited for massive-scale search and ML-enriched retrieval at ultra-low latency.

- Has a flexible ranking architecture that supports multi-phase models and real-world signals.

- Proven in production at firms like Spotify, Yahoo, and Farfetch.

Cons:

- Infrastructure and operational complexity are higher than with managed tools.

- There’s a steeper learning curve that requires understanding search/RAG internals.

- No out-of-the-box SaaS; self-hosting requires significant setup or use of Vespa Cloud.

Best for:

- Infra-heavy engineering teams

- ML platform builders deploying ranking models across massive datasets

- Enterprise architects

What are the best open-source RAG tools?

The best open-source RAG tools are:

- Meilisearch: A top pick for its blazing-fast performance, typo tolerance, and support for hybrid search (BM25 + vectors). It’s easy to deploy, has a robust REST API, and is maintained by a strong open-source community.

- LlamaIndex: A flexible framework for connecting external knowledge sources to LLMs. It supports composable pipelines and complex retrieval logic and integrates with various vector stores.

- Haystack: Built for production, Haystack supports modular pipelines, real-time indexing, and advanced RAG models. It’s ideal for developers needing complete control.

What are the best RAG search engine tools?

The best RAG search engine tools are:

- Meilisearch: It combines traditional full-text and vector search with advanced ranking rules, synonyms, typo tolerance, and blazing-fast response times through a developer-first API.

- Vespa: Designed for large-scale use, Vespa supports real-time indexing, hybrid search, on-node ML inference, and custom ranking pipelines.

- Pinecone: A cloud-native vector database with serverless scaling, metadata filtering, and support for billions of vectors. Popular for high-precision similarity search in RAG apps.

What makes a good RAG search engine? Speed, hybrid search (dense + sparse), customizable ranking, metadata filtering, and seamless integration with LLMs.

Meilisearch, for example, supports semantic tuning, multilingual tokenization, and customizable APIs, which are all critical features in RAG pipelines.

What are the best RAG tools for enterprises?

The best RAG tools for enterprises are:

- Meilisearch: It offers SOC2 compliance, enterprise SLAs, SAML SSO, and fast search with customizable ranking. And that’s all you want for scaling secure, multilingual AI search.

- Vespa: It is trusted by global brands due to its low-latency hybrid search. Vespa is ideal for RAG pipelines because it supports on-node ML inference and advanced ranking logic.

- MongoDB Atlas Vector Search: MongoDB Atlas Vector Search enables vector embeddings and structured data search within your existing MongoDB collections. This is perfect for companies already using MongoDB.

How to choose a RAG tool

You start by identifying the specific goals of your application. Are you focused on accurately retrieving answers from documents, building a chatbot, or scaling retrieval-augmented generation across enterprise data?

Different tools are built for different contexts, so matching their strengths to your requirements is key. Let’s look at some key features:

- Retrieval method: You need to consider what type of retrieval method you need. Is it keyword-based retrieval (like BM25), vector-based semantic search, or a hybrid approach?

- Performance and scalability: You must assess how well the tool performs with large datasets. Can it handle fast queries across millions of documents? The trick is to look for low-latency indexing, real-time updates, and sizable concurrent user traffic.

- Ease of integration: Always favor tools with clean APIs, SDKs, or native support for frameworks like LangChain or LlamaIndex, especially if you follow a build-your-own RAG setup. Developer experience significantly affects how fast you can go from prototype to production.

- Hosting and deployment options: Depending on your infrastructure, choose between open-source tools you can self-host or cloud-native services that offer managed scalability, monitoring, and backups.

- Cost and licensing: Budget always matters. Some tools are free and open-source, others offer usage-based pricing, and some require commercial licenses for enterprise deployment.

- Community and support: Strong documentation, responsive support, and a vibrant user base are signs of a tool that won’t leave you stuck when things break.

Ready to power up your RAG pipeline?

Your final decision should strike the perfect balance between speed, flexibility, transparency, and control. Your tool of choice will make or break the experience, whether you’re building a chatbot, a knowledge assistant, or a context-smart search engine.

Great RAG pipelines start with great retrieval. That’s why the tools on this list, from developer-first search engines to full-scale AI orchestration platforms, focus on delivering relevant answers, fast. But not every team needs the same setup.