9 advanced RAG techniques to know & how to implement them

Discover 9 key advanced RAG techniques and learn which tools can help you implement them.

In this article

RAG techniques help optimize the accuracy and flexibility of RAG pipelines. From smarter chunking to hybrid search and context distillation, these methods are key to delivering faster, more accurate responses in real-world AI applications.

Why do we need them?

Because basic RAG systems often struggle with noisy results, irrelevant context, or poor ranking.

When the retrieval system cannot surface relevant information, users lose trust, and your LLM wastes time processing the wrong context.

This guide breaks down nine advanced RAG techniques worth knowing:

- Text chunking

- Reranking

- Leveraging metadata

- Hybrid search

- Query rewriting

- Autocut

- Context distillation

- Fine-tuning the large language model (LLM)

- Fine-tuning the embedding models

You’ll also learn how to implement these strategies using tools like Meilisearch, LangChain, and vector databases, plus how to evaluate which ones actually move the needle.

Let’s get into it.

1. Text chunking

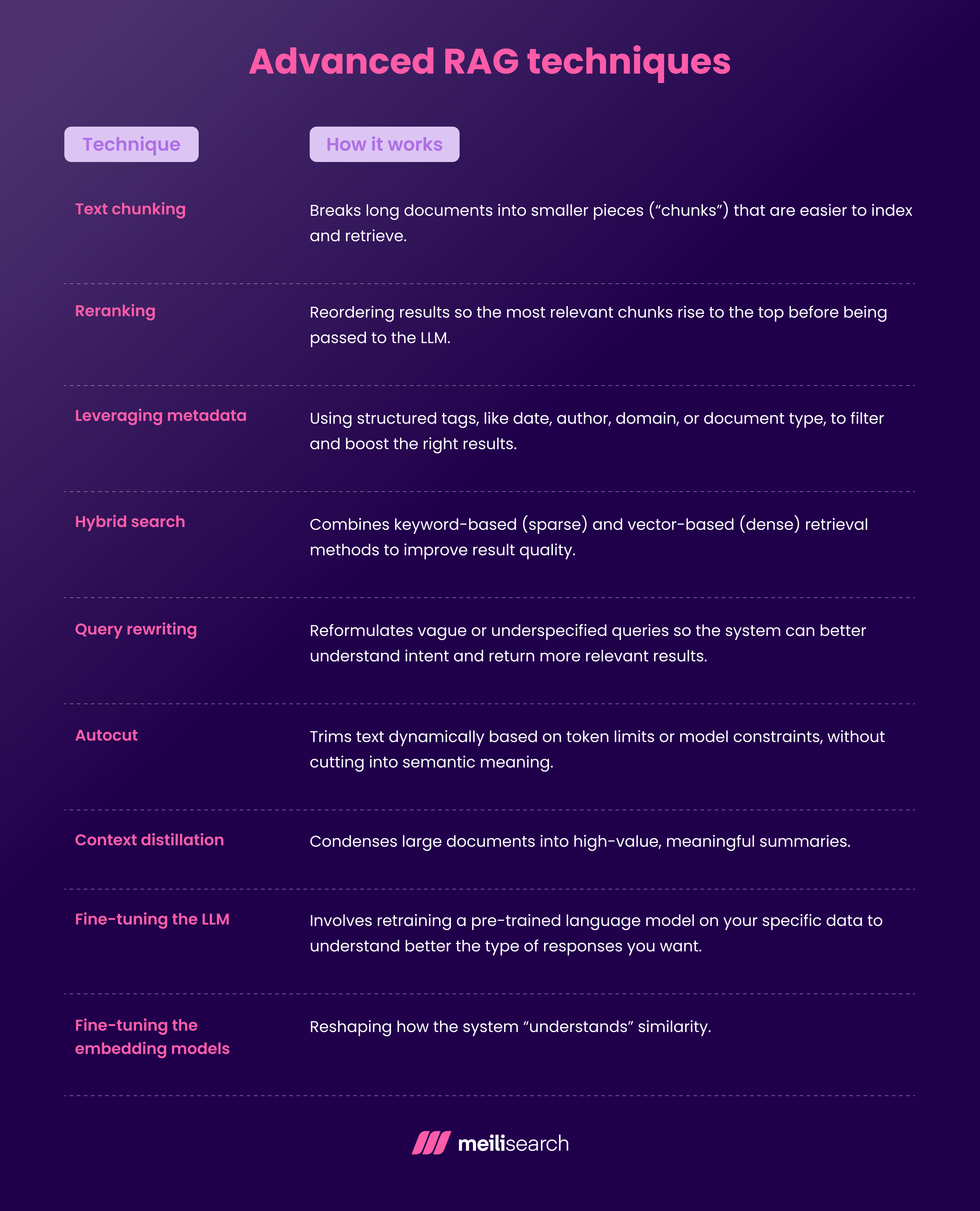

Text chunking breaks long documents into smaller pieces (‘chunks’) that are easier to index and retrieve.

Instead of pulling in whole documents, RAG pipelines work with these chunks, and the quality of the chunks directly affects retrieval accuracy and answer clarity.

If your chunks are too long, the artificial intelligence model will get distracted. On the other hand, if they’re too short, context will be lost. The right balance helps deliver the best results.

Here are a few types of chunking:

- Simple chunking: Splits documents into fixed-size blocks with a bit of overlap (e.g., 35 characters with a five-character overlap). Easy to implement, ignores semantic structure, and may split key ideas across chunks.

- Semantic chunking: Groups sentences by similarity using embeddings and cosine thresholds. Keeps related ideas together and improves retrieval precision, but requires embedding models like BERT and higher-performance computing.

- Language model–based chunking: Uses an LLM to segment text into self-contained propositions. Produces highly coherent results but is computationally expensive and may require fine-tuning.

Not to anyone’s surprise, each method has its trade-offs. Semantic or LLM-based chunking will likely pay off if you work with product manuals or technical docs. For simpler content, fixed-size chunks will do the job just fine.

2. Reranking

In RAG systems, the initial retriever pulls in a wide net of retrieved information, some good and some not so good. Reranking is the process of reordering results so that the most relevant information rises to the top before being passed to the LLM.

Here are a few common approaches to reranking:

- Cross-encoder reranking: Feeds the user query and each candidate chunk into a transformer model (like BERT) that scores how well they match. Very accurate but slow and resource-intensive. Best when quality matters more than latency.

- Score-based reranking: Adjusts order using heuristics or relevance scores (e.g., BM25 boosts, keyword search matches). Fast and lightweight, but less nuanced.

Both techniques are valid, but your choice depends on how much latency you can afford.

3. Leveraging metadata

Leveraging metadata means using structured tags, like date, author, domain, or document type, to filter and boost the right results.

Metadata filtering is a low-lift, high-impact technique since it doesn’t require any heavy computing.

Here are its key sub-types:

- Date filtering removes outdated data and only focuses on recent documents. This is useful in fast-changing fields like finance. However, this focus on recency can sometimes exclude essential documents.

- Author/source filtering boosts retrieved documents from trusted individuals or sources. There’s a very low chance of hallucinations, but you might also miss out on less well-known but highly relevant data.

- Document type filtering includes filters based on format (e.g., guide vs. blog vs. policy doc). While it can help match user intent, there’s an added risk of over-filtering if metadata is inconsistent.

4. Hybrid search

Hybrid search combines keyword-based (sparse) and vector-based (dense) retrieval methods to improve result quality.

This dramatically increases relevance, especially in edge cases or ambiguous user queries.

Here are the two main approaches to hybrid search:

- Score fusion: Combines results from sparse and dense methods using weighted scores. Balanced accuracy. Needs careful tuning to avoid noisy results.

- Result merging: Retrieves top results from both methods, then merges and reranks them. Simple to implement. Risk of duplication or inconsistent ranking logic.

5. Query rewriting

Query rewriting refines user input before the retrieval process. It reformulates vague or underspecified queries so the retrieval system can better understand intent and return more relevant results. This is especially helpful when dealing with short or typo-heavy queries.

Most modern generative AI systems use various algorithms to upgrade queries behind the scenes. Here are a few types:

- Synonym expansion: This technique automatically adds equivalent terms to cover more variations. While this may improve recall, it can also introduce some irrelevant matches.

- Spelling correction: Any typos or misspellings are automatically fixed. It boosts success for casual users, but there’s a chance it might ‘correct’ niche or valid jargon.

- Intent clarification: This process transforms a broad query into a clearer one using rules or machine learning. It improves relevance but requires training data and adds complexity.

6. Autocut

Autocut trims text dynamically based on token limits or model constraints, without cutting into semantic meaning. There’s a method for removing the less relevant context without displacing the critical info.

This matters because many LLMs have strict token limits. If you overload the context window, the AI model may hallucinate, skip over key info, or underperform.

Here are two common strategies for autocutting:

- Scored trimming helps rank content by importance and cuts the lowest-scoring sections. While the prioritization is smart, it needs a scoring mechanism in place.

- Rule-based trimming applies fixed rules (e.g., drop intro paragraphs or footnotes). It’s easy to implement, but it lacks adaptability to nuanced content.

7. Context distillation

Parsing large documents at every instance isn’t optimal for high-level RAG systems. Context distillation helps condense large documents into high-value, meaningful summaries.

As you know, LLMs only require the right information, not all the information. More context doesn’t necessarily mean better results; it might even dilute the final output. Context distillation helps the model stay focused and accurate when it comes to response generation.

Two ways to approach this:

- Summarization-based distillation uses extractive or abstractive summarization to retain key points. Produces concise context but may miss details.

- Question-driven distillation tailors context based on the specific question being asked. Highly relevant, but depends on accurate query understanding.

8. Fine-tuning the LLM

Fine-tuning the LLM involves retraining a pre-trained language model on your specific datasets to understand better the type of generated responses you want.

It’s useful when prompt engineering alone can’t guide the model well enough, especially in regulated industries or domain-specific contexts.

- Supervised fine-tuning: Retrains with labeled Q&A, code, or instructions. Offers precision and control but requires curated data and resources.

- Reinforcement learning from human feedback: Humans rate outputs, and the model learns to favor preferred responses. Aligns with values or brand tone but is resource-intensive.

9. Fine-tuning the embedding models

Embedding models turn documents into vectors for document retrieval. Fine-tuning them means reshaping how the retrieval model ‘understands’ similarity, which is essential if off-the-shelf embeddings underperform in your field.

- Contrastive learning: Trains embeddings to pull semantically similar text closer and push dissimilar text apart. Improves retrieval accuracy but requires labeled positives and negatives.

- Domain adaptation: Continues training on your corpus to align embeddings with your field. Easier to set up, especially with unlabeled data, but risks overfitting.

Fine-tuning the LLM and embedding models gives you complete control over both retrieval and generating responses, setting the foundation for a high-performing, domain-aware RAG model.

What are advanced RAG techniques?

Advanced RAG techniques go beyond the basics of retrieval-augmented generation. They are more detailed and nuanced when it comes to chunking, retrieval, and ranking content. The end goal, however, is the same: to generate better and more relevant search results.

Whether you're optimizing hybrid search, rewriting queries, or tweaking metadata, these approaches build on the core principles of retrieval-augmented generation to scale both accuracy and efficiency.

Why are advanced RAG techniques needed?

The problem with basic RAG setups is that they often struggle with complex queries. Also, they aren’t enough when scaling across diverse datasets or maintaining relevance in high-stakes situations.

As user expectations grow and LLMs become more central to production-grade workflows, brute-force retrieval is no longer enough. We need smart AI applications to understand nuance and prioritize recent data from trusted sources.

Advanced techniques help you move from ‘retrieve and hope’ to ‘retrieve with intent.’ They address key pain points like hallucination, latency, and content mismatch, while unlocking improved precision and performance.

How can you implement advanced techniques in RAG?

You can implement advanced retrieval techniques using different tools, libraries, and frameworks.

Some of the RAG tools you can use to implement advanced retrieval-augmented generation techniques are the following:

- Meilisearch

- Weaviate

- LangChain

- Pinecone

Let’s learn a little more about them:

1. Meilisearch

Developed with user experience in mind, Meilisearch is an open-source tool that is perfect for implementing advanced RAG pipelines. It supports customizable ranking rules and handles large document collections easily.

You can implement hybrid search (combining keyword-based and vector search relevance) using Meilisearch’s hybrid search plugin. This balances semantic search understanding with traditional precision.

POST /indexes/products/search { "q": "secure laptop", "vector": [0.128, 0.98, 0.45, …], "attributesToRetrieve": ["title", "description"], "limit": 10 }

When dealing with large documents, you can boost search quality by indexing them as structured chunks:

{ "title": "AI Compliance Guide", "content_chunk": "RAG systems rely on fast indexing and retrieval...", "chunk_id": 12 }

Meilisearch’s performance and easy integration make it a go-to solution for building smart RAG pipelines without overcomplicating things.

2. Weaviate

Weaviate is a vector-native search engine built for high-performance semantic search. It integrates with transformers and embedding models directly, making it a good fit for RAG implementations.

3. LangChain

LangChain is a Python/JavaScript framework that chains together LLMs, retrieval components, and tools. It makes RAG workflows modular and configurable.

You can plug in reranking models, write your own query rewriters, and manage chunking pipelines all within one orchestrated flow.

4. Pinecone

Pinecone is a managed vector database built for high-scale similarity search. It’s useful for projects that need lightning-fast, scalable vector retrieval, reranking, and time-filtered queries.

Combined with Meilisearch or LangChain, Pinecone adds scalability and production-readiness to your RAG setup.

How can you evaluate advanced techniques in RAG systems?

To evaluate advanced RAG techniques, you need to test how each one impacts retrieval quality. The aim is to achieve high precision, context, low latency, and solid real-world usability.

To assess effectiveness, focus on metrics like:

- Retrieval accuracy: Are the most contextually relevant chunks returned? This affects everything downstream, including hallucination rate and answer quality.

- Latency: Do techniques like reranking or hybrid search slow down your system? Evaluate end-to-end response time.

- Precision vs. recall: Are you returning too few (or too many) relevant documents? You want the sweet spot that balances coverage with focus.

- User satisfaction: How often do end users get exactly what they need? Consider running A/B tests or manual evals.

When you’re optimizing for speed without sacrificing quality, Meilisearch’s fast and accurate hybrid engine stands out.

Why smarter RAG matters in practice

Advanced RAG techniques are ultimately about making information retrieval smarter, not just faster. They help systems move beyond brute-force search toward context-aware retrieval that scales across domains.

By layering strategies such as hybrid search, reranking, and context distillation, teams can design RAG pipelines that deliver more precise, reliable, and efficient results in production settings.

Build efficient RAG systems with Meilisearch

When performance, relevance, and speed matter most, Meilisearch delivers. Its lightweight architecture and intuitive features make plugging into advanced RAG workflows easy. It doesn’t matter whether you're chunking large volumes of text, tuning hybrid search, or boosting results with metadata.