How do you search in a database with LLMs?

Discover how do you search in a database with LLM using MCP, RAG, and SQL translation. Unlock fast, natural language access to your business data now!

In this article

Your database contains the answer to every customer question, yet your support team still spends hours digging through tables and documentation.

While most companies treat database querying as a technical skill reserved for developers and analysts, LLMs are quietly revolutionizing how we interact with structured data. The question isn't whether LLMs can search databases, it's which approach will transform your team from data archaeologists into instant answer machines.

We will present three distinct methods, each solving different pieces of the puzzle: direct protocol integration through MCP, conversational RAG systems, and natural language-to-SQL translation.

The core problem: bridging natural language and structured data

A sales manager late on a Thursday might stare at a dashboard and want a simple answer: “Which customers bought more than $10,000 of product X last quarter?” The data exists but hides behind tables and cryptic field names. Without SQL skills or a data analyst, that answer remains out of reach.

This problem affects teams in SaaS, ecommerce, and enterprise settings daily. The knowledge exists, but accessing it feels complicated.

This gap between how we ask questions and how data is stored slows decision-making, frustrates non-technical staff, and pulls engineers away from their main tasks to handle one-off data requests. In fast-paced environments, this disconnect feels like a locked door when you need to get out quickly.

The database could answer in seconds if it understood the query. Instead, users wrestle with filters, dropdowns, or raw SQL. This leads to missed insights, slow responses, and a sense that data is more obstacle than asset.

Large language models (LLMs) are changing this. But LLMs don’t automatically understand your database structure, business rules, or data specifics. Without careful design, they can make mistakes, misinterpret questions, or reveal sensitive information.

Approach 1: MCP, the integration glue

In databases and AI, every new tool or data source often needs its own custom connector. Engineers end up assembling adapters, plugins, and scripts. MCP acts as a universal plug for AI and search integrations.

What is MCP?

MCP standardizes communication between your AI assistant and all data silos. It’s not just another API wrapper. Think of MCP as the USB-C of AI integrations: one protocol, many possibilities. Instead of building a new connector for every tool, you plug into MCP. Your language model can then discover, search, and act across your entire stack.

The protocol defines how AI models communicate with external systems, structure requests, and discover available actions, without hard-coding every integration. This flexibility lets you swap out your LLM provider or database without overhauling your setup.

Example: Searching in Meilisearch with Cursor

Presenting Meilisearch and Meilisearch MCP

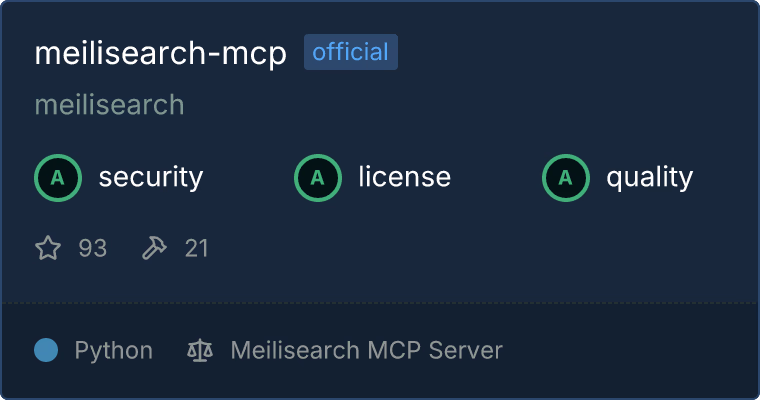

Meilisearch is a blazing-fast, open-source search engine that emphasizes speed, relevance, and developer experience. It handles both keyword and vector-based search, making it ideal as the foundation for Retrieval-Augmented Generation (RAG) applications.

To bridge Meilisearch with AI assistants and LLM tools more seamlessly, Meilisearch now offers an MCP server. Instead of building bespoke integrations, the MCP server exposes a standard protocol and a rich set of tools.

Installing and connecting takes minutes, and works with any MCP-capable client, such as Claude, Cursor, or custom in-browser integrations. This unlocked new scenarios, like chatting directly with your Meilisearch data, instant analytics dashboards, or automated knowledge bots.

ChatGPT will soon be compatible with MCP, empowering you to connect external tools, APIs, and private databases directly into your workflows. This feature—rolling out to Pro, Team, and Enterprise users—will enable seamless, secure integration with apps like Google Drive, Box, OneDrive, and even custom data sources.

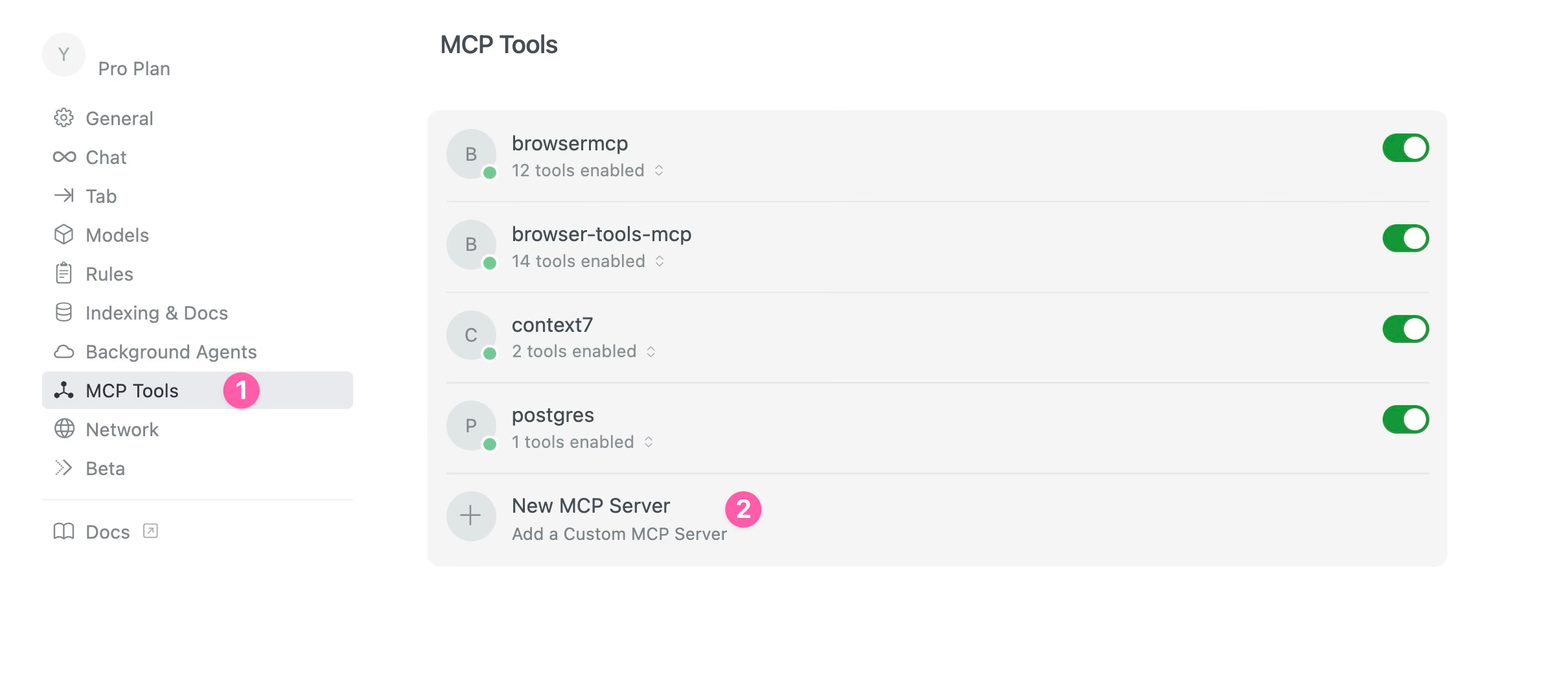

The process to connect Cursor to Meilisearch's MCP

- Open Cursor and access the MCP tool. Press Cmd + Shift + J, open the MCP tool, and click “New MCP server.”

-

Configure the Meilisearch MCP server. Add this JSON config into the

mcp.jsonfile:"meilisearch": { "command": "uvx", "args": ["-n", "meilisearch-mcp"], "env": { "MEILI_HTTP_ADDR": "https://ms-ad593910acfe-24699.fra.meilisearch.io/", // Your Meiliseach instance URL "MEILI_MASTER_KEY": "key" } }

This connects the LLM securely to a live Meilisearch instance with full control.

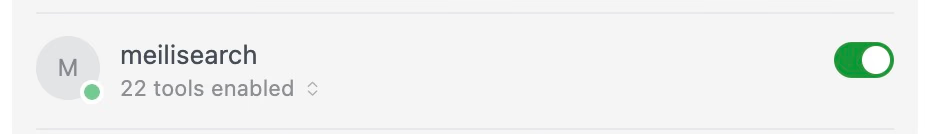

- Ensure that the MCP has been added properly and is ready to be used.

-

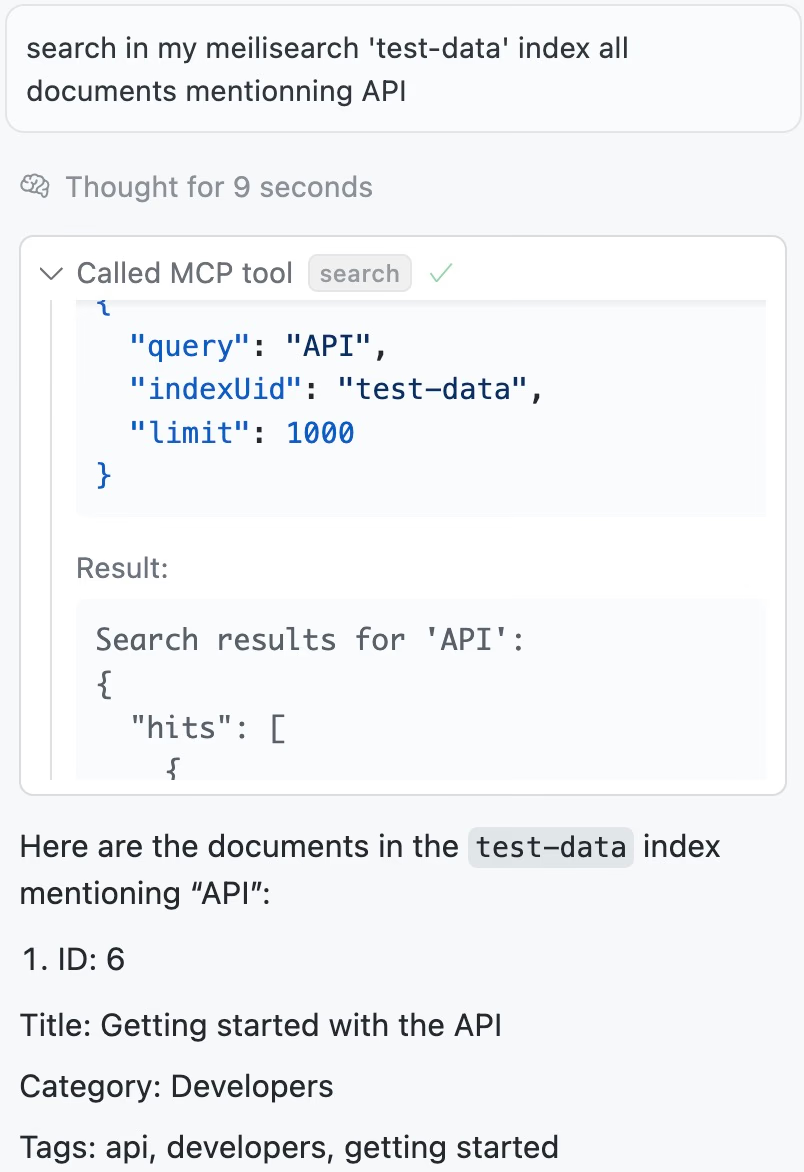

Then, issue a natural language search. In the Cursor chat, you could type "search in my meilisearch index all documents mentioning API"

-

MCP translates the request.The LLM uses the MCP server’s capabilities to convert the request into a structured search call. The Meilisearch MCP server runs the query, applies filters or limits, and returns results—no manual SQL or custom API calls.

-

Results appear in the chat.The developer sees a list of relevant documents with context and metadata, ready to use or refine.

This workflow extends far beyond just searching. With MCP, you can:

- Search documents across any connected data source

- Update and edit records directly via the LLM interface

- Monitor background tasks and track system status

- Manage API keys and adjust access permissions, all in one place

For developers, this integration is incredibly powerful: it enables faster debugging and troubleshooting by collaborating with an AI that has direct access to all your Meilisearch data. Instead of manually writing queries or navigating complex documentation, and copy-pasting it into Cursor, you can ask natural language questions and get immediate, contextual responses from your actual search index.

Approach 2: Create a RAG chatbot using Flowise

Building a chatbot that understands and retrieves information from your company’s knowledge base used to feel like piecing together a puzzle with missing parts. Flowise changes that by offering a visual platform for orchestrating RAG systems. Every block you drag and drop brings you closer to a chatbot that truly knows your business.

The Flowise RAG pipeline: from chaos to clarity

Flowise’s Document Store is the unsung hero. Instead of juggling scripts, you upload your PDFs, set chunking rules, and let Flowise handle the rest. The interface is visual and intuitive, but the mechanics are solid.

You can:

- Split documents into overlapping chunks

- Attach metadata for filtering

- Test retrieval with a click

Flowise shows you if your data is indexed correctly right in the GUI.

Embeddings, vector stores, and the power of hybrid search

Flowise doesn’t just store your text—it transforms it. Every chunk becomes a vector, a mathematical fingerprint of its meaning. These vectors go into a vector database such as Pinecone, Qdrant, or Meilisearch, ready for semantic search.

This hybrid approach outperforms keyword-only search. For example, a support agent typed “API throttling rules,” and the chatbot surfaced a section titled “Rate Limiting” buried deep in a 40-page PDF. The agent didn’t know the exact phrase, but vector search made the connection.

How to build a chatbot with Flowise in minutes

Building your own AI chatbot with Flowise is a visual drag-and-drop experience, but under the hood it’s powered by sophisticated document handling, embeddings, and custom conversational logic. Here’s a step-by-step recipe to get your first intelligent bot running—no code (or frustration) required.

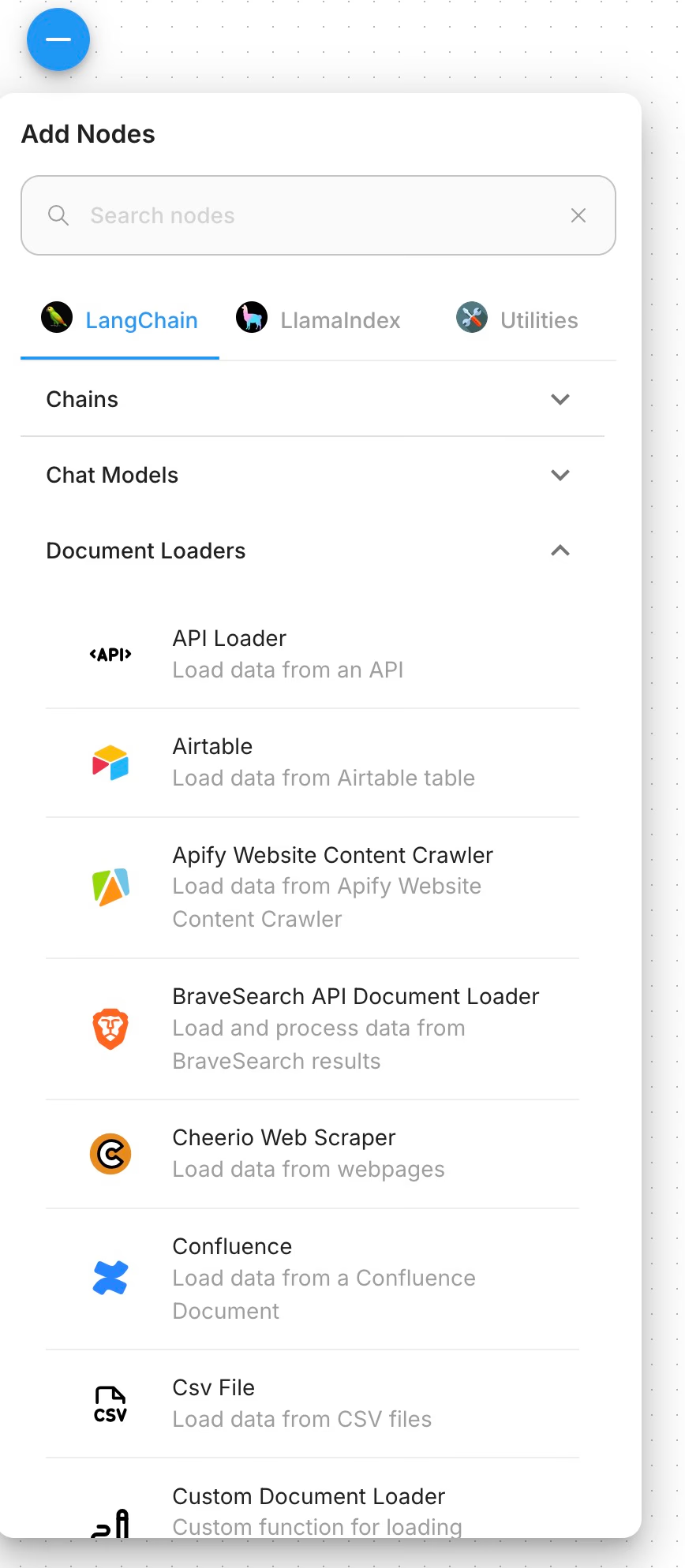

1. Add a document loader

Start by bringing your knowledge base into Flowise. Click “Add Node,” choose from a wide range of loaders—PDF, TXT, CSV, Google Drive, Confluence... For most internal wikis, the “Folder” or “PDF File” node is ideal. Provide the file path or link, authenticate if required, and hit connect.

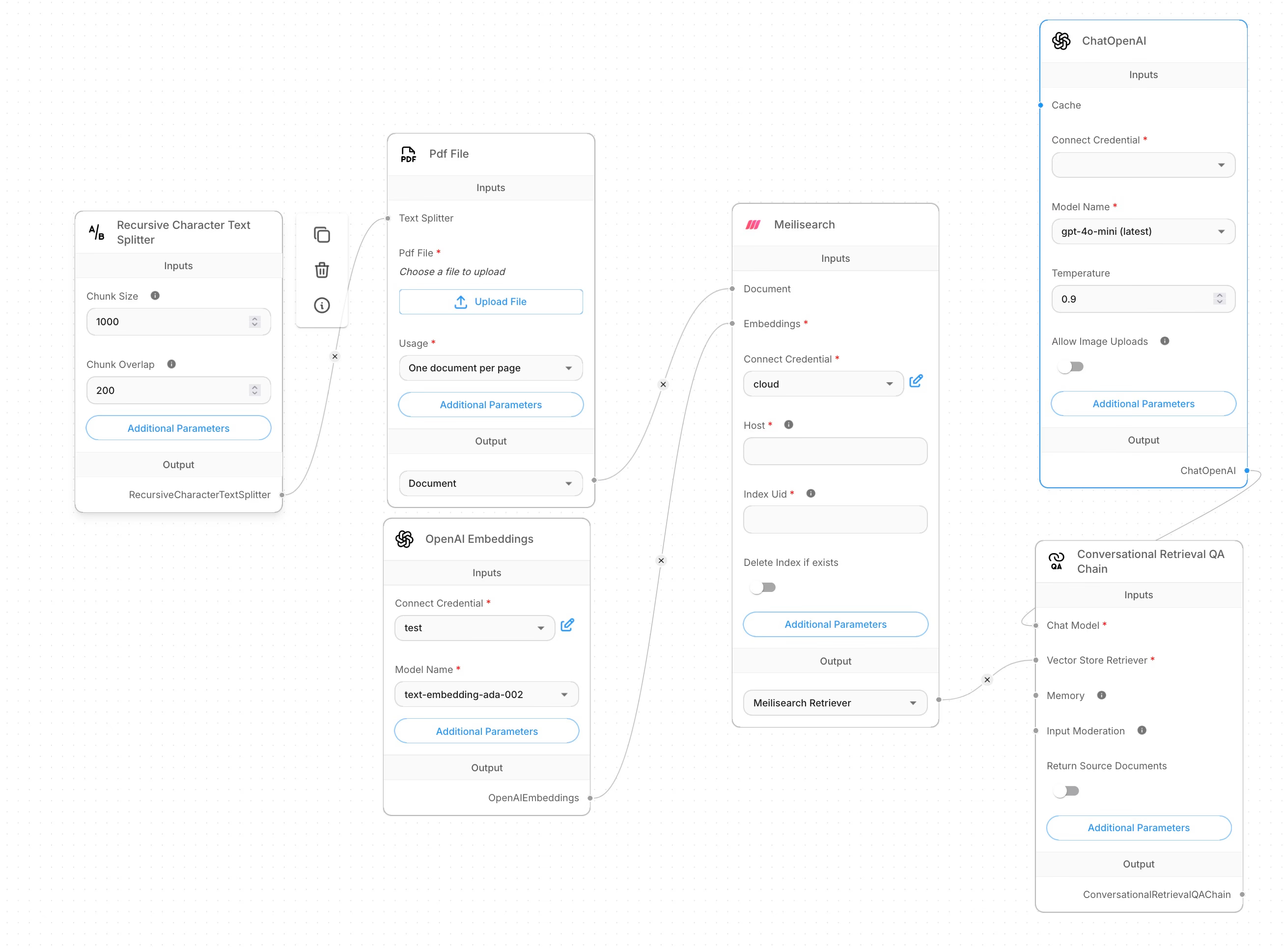

2. Split your documents for smarter search. Long documents overwhelm chatbots. Inline a “Text Splitter” node after your loader. Configurable chunk sizes (typically 500–1000 words) let you strike the right balance: smaller chunks improve answer accuracy, larger ones keep more context. Choose how you want to split (by paragraph, sentence, or Markdown).

3. Generate embeddings. Drag in an “OpenAI Embeddings” or “HuggingFace Embeddings” node, dropping your API key into the settings.

4. Plug in a vector store. Now, you need those vectors to be searchable. Snap in a Meilisearch vector store node.

5. Add your language model. Add a “ChatOpenAI” or similar LLM node to power your chatbot’s answers. Paste in your chosen model’s API key, set temperature (creativity control), and tuning parameters. Most people start with gpt-4o or gpt-4o-mini.

6. Create the conversational logic

Drag over the “Conversational Retrieval QA Chain” node. This connects your LLM with the vector store so user queries first fetch relevant context. Enable the “Return Source Documents” option for debugging or citations. Memory settings (e.g., last 5 exchanges) can be configured for richer back-and-forth.

7. Test directly in Flowise. Name and save your Flow. Use Flowise’s built-in “Predict” panel to start chatting! Tweak chunk sizes, memory, or prompts until the answers sound just right.

8. Deploy and collect your endpoint. When satisfied, click the Code icon in Flowise to get your API endpoint. This connects your chatbot to any front-end—Slack, Typebot, or a custom web app. Enable authentication before deploying to production for security.

The final should look like this:

Pro tip: Try loading a few common file types together (for example: a “Folder” with PDFs and a “CSV File” of FAQs), so your chatbot synthesizes answers from different knowledge silos—all managed visually in Flowise.

This no-code pipeline is robust enough for team support bots, product Q&As, and internal helpdesks. Flowise turns formidable LLM tech into instant business value—all through simple connections and a few API keys.

Unlock advanced search potential with customizable relevancy, typo tolerance, and more. Enhance your search strategy with powerful search capabilities. Explore Features

Approach 3: LLM-to-SQL/NoSQL – when the model becomes your data whisperer

When natural language meets structured data, it creates both opportunity and challenges. Business teams have long wanted to ask simple questions like, “Show me all orders from last week over $500,” and get answers without writing SQL. Large language models (LLMs) now make this possible. Sometimes the results are accurate; other times, the model is overly confident.

How it works: the art of translation

LLM-to-SQL (or NoSQL) translation acts like a skilled interpreter. You type a question in plain English, and the LLM—trained with your database schema and examples—creates a query the database understands.

For example, a product manager might ask:

“List all customers who upgraded to the Pro plan in the last 60 days.”

With the right context, the LLM generates:

SELECT * FROM customers WHERE plan = 'Pro' AND upgraded_at >= NOW() - INTERVAL '60 days';

This process depends on clear prompt design, detailed schema documentation, and sometimes trial and error. The LLM needs to know table names, field types, and relationships. If the information is incomplete or unclear, the model guesses—sometimes correctly, sometimes not.

A real-world scenario: the perils and promise

Consider a support team at a mid-sized ecommerce platform. They ask, “Which users reported payment issues in March?” The LLM, connected to the ticketing database and given schema plus sample queries, produces:

SELECT user_id FROM tickets WHERE issue_type = 'payment' AND created_at BETWEEN '2025-03-01' AND '2025-03-31';

The team gets answers in seconds—no data analyst needed. But if the schema changed last week or if “payment” is stored as “billing,” the LLM might invent fields or generate queries that expose sensitive data.

Risks and how to stay out of trouble

LLM-to-SQL offers great potential but carries risks. Common issues include:

- Schema drift: If the database changes and the LLM isn’t updated, it may reference nonexistent fields.

- Ambiguity: Natural language can be vague. For example, “recent customers” could mean last week, month, or quarter.

- Security: Without guardrails, the LLM might generate queries accessing restricted tables or leaking sensitive data.

- SQL injection: LLMs are less prone to classic injection attacks but remain vulnerable if user input is handled carelessly.

To reduce risks, systems should:

- Validate generated SQL against an allowlist of tables and columns.

- Enforce row-level permissions.

- Log every query for auditing.

- Use fine-tuned models trained on specific schemas and query patterns to limit wild guesses.

A glimpse under the hood: code in action

Here’s a simple example of setting up an LLM-to-SQL pipeline with schema awareness:

# Pseudocode for clarity

prompt = f"""

You are a SQL expert. The table 'orders' has columns: id, user_id, amount, created_at, status.

Write a SQL query to find all orders over $1000 in the last 30 days.

"""

response = llm.generate(prompt)

sql_query = response.text

# Validate the query before execution

if validate_sql(sql_query, allowed_tables=['orders']):

results = db.execute(sql_query)

else:

raise Exception("Query not allowed")

This example highlights that validation acts as a seatbelt. Without it, a simple typo could cause serious problems.

Perspective: when to use, when to pause

LLM-to-SQL works best when the schema is stable, data is well-understood, and risks are controlled. It boosts productivity for internal dashboards, ad hoc reporting, and customer support. However, it’s not a universal solution. For mission-critical tasks or strict compliance, human review and layered security remain essential.

The best systems combine LLM-to-SQL with other methods like vector search for unstructured data, MCP for tool integration, and RAG for context-rich answers. Each method has strengths, and blending them ensures the right tool fits each task.

Ultimately, LLM-to-SQL empowers teams to ask better questions faster. With proper safeguards, it makes data accessible without requiring SQL expertise.

Tooling & ecosystem: the real-world stack for LLM-powered search

The world of LLM-powered search resembles a city: each part has its own role, and the best solutions combine different tools. Developers and tech leads face the challenge of not only choosing the right tools but also understanding how they work together as needs evolve, data grows, and users expect fast, clear answers.

The cast of characters

The ecosystem includes several layers, each with notable tools and unique strengths:

- LLMs: OpenAI, Claude, Mistral, Cohere. Each has its own style; Claude excels at tool use, OpenAI offers broad knowledge.

- Orchestration: LangChain, LlamaIndex, Semantic Kernel. These connect components, route queries, and manage context.

- Vector databases: Meilisearch, Pinecone, Weaviate, Chroma. Meilisearch combines classic and AI search, Pinecone focuses on pure vector search, and Weaviate supports schema-rich search.

- SQL/NoSQL databases: PostgreSQL, MySQL, MongoDB, Firebase. Reliable and structured but not designed for semantic search.

- Protocols: MCP, OpenAPI, LangChain agents. MCP acts like a “USB-C” for AI integrations (plug and play without custom adapters).

- Interfaces: Next.js, Gradio, Streamlit, LangServe. These handle user interaction, where speed and user experience matter.

- No-code/low-code platforms: Flowise, Voiceflow. They enable non-coders to build flows and bots.

Each tool offers something unique. For example, Meilisearch provides hybrid search that combines full-text and vector similarity, making it approachable and powerful. Pinecone handles large-scale pure vector search, while Weaviate adds schema-rich capabilities.

The hidden costs and quiet superpowers

The exciting parts—LLMs, vector search, instant answers—often overshadow the behind-the-scenes work. Tasks like schema mapping, prompt engineering, and access control are essential.

Security is critical. LLMs can hallucinate, overreach, or leak sensitive data if not managed properly. Protocols like MCP enforce boundaries, log every access, and allow swapping tools without rewriting the stack. This creates a system with clear rules, avoiding confusion and dead ends.

When to reach for no-code, and when to go deep

Not every team can build everything from scratch. Platforms like Flowise and Voiceflow let product managers and support leads create RAG chatbots or workflow automations without coding. They are quick, flexible, and surprisingly capable.

However, as needs grow (custom chunking, advanced filters, multi-agent flows) you’ll value the control and transparency of a custom-built stack.

The art of blending: hybrid approaches

The best systems combine multiple methods. Using SQL for structured queries, Meilisearch for hybrid search, and an LLM for summarization delivers both speed and depth.

For example, you might:

- Use SQL to pull all orders from last week.

- Use vector search to find orders with unusual customer comments.

- Ask the LLM to summarize trends.

This blend supports collaboration among engineers, analysts, and business users, even when the underlying tools differ.

The future of database search is conversational

The question of how do you search in a database with LLM has three compelling answers: MCP for seamless tool integration, RAG systems for knowledge-driven applications, and direct SQL translation for structured queries.

Each approach serves different needs, but they all point toward a future where natural language becomes the primary interface between humans and data, making complex information accessible to anyone who can ask a question.