Corrective RAG (CRAG): Workflow, implementation, and more

Learn what Corrective RAG (CRAG) is, how it works, how to implement it, and why it improves accuracy in retrieval-augmented generation workflows.

In this article

Corrective RAG, or CRAG, is about fixing what standard RAG systems often get wrong.

Instead of allowing irrelevant or weak context to enter your large language model (LLM) pipeline, CRAG performs checks and corrections, resulting in sharper, faster, and more trustworthy answers.

This guide will show you how CRAG works, why it matters, and where it fits into modern AI workflows. By the end, you’ll know when to use CRAG, where its limits are, and how it compares to other methods like speculative RAG.

Here’s what we’ll cover:

- What CRAG is, how it is an upgrade to traditional RAG, and why it matters in real LLM applications.

- The key components, the workflow that ties them together, and how to implement CRAG in practice.

- When CRAG should be used, what limitations to expect, and how it differs from speculative RAG.

Now, let’s examine the basics more closely.

What is corrective RAG (CRAG)?

Corrective retrieval-augmented generation, or CRAG, is a type of RAG-based approach used to improve the reliability of AI-generated answers.

Instead of simply passing retrieved documents to a language model without any adjustments, CRAG introduces an additional step – it evaluates, filters, and refines the retrieved information before an answer is generated.

This dramatically reduces the risk of AI hallucinations and boosts confidence in the generated response.

Let’s see how CRAG improves the traditional RAG in workflows.

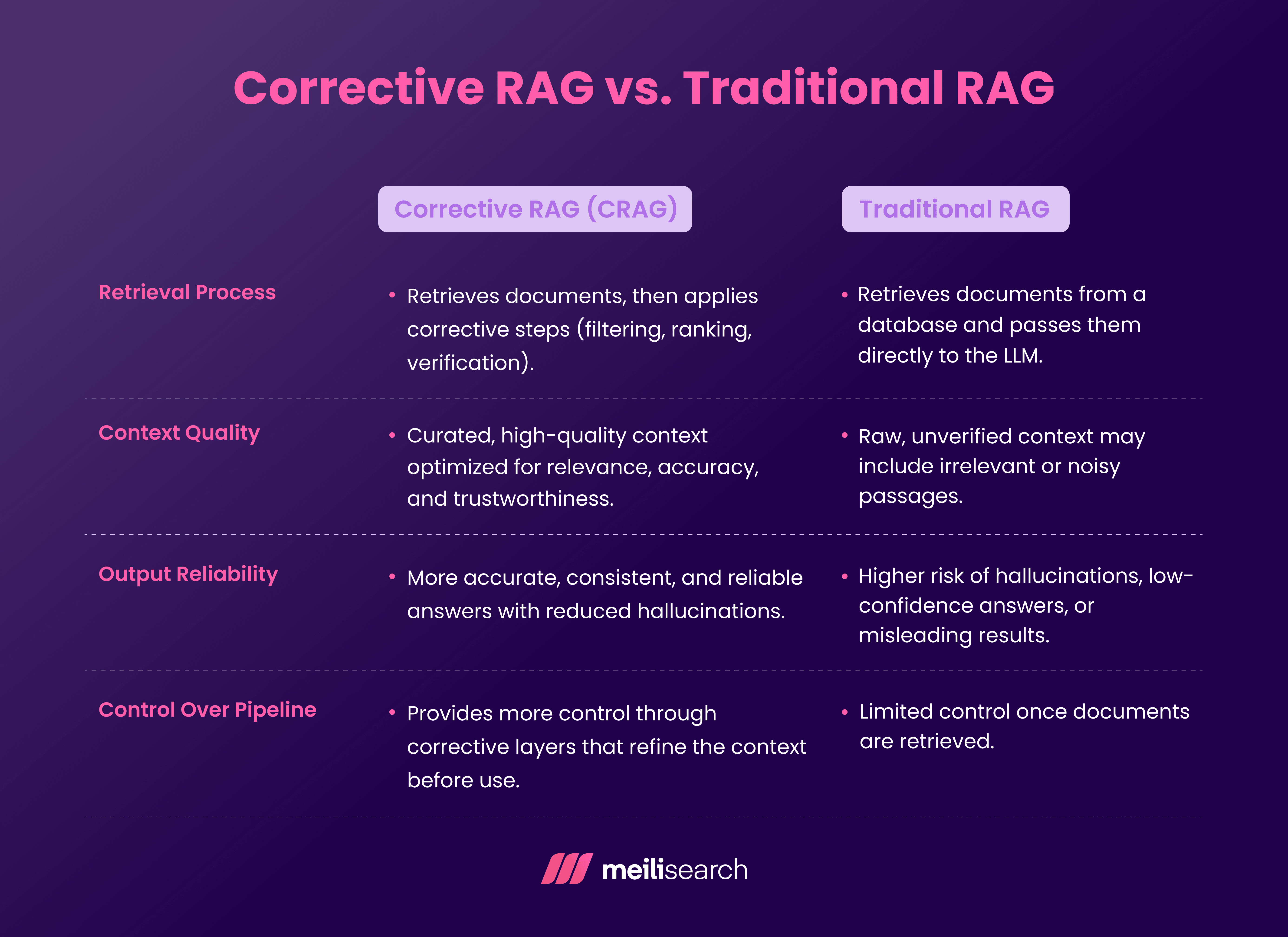

How does corrective RAG improve traditional RAG?

A traditional RAG performs document retrieval from a knowledge base and feeds the docs directly to the LLM. The LLM then uses this raw context to generate answers.

In a traditional RAG pipeline, irrelevant, noisy, or incomplete retrieval results can sometimes slip through, leading to inaccurate outputs.

CRAG, on the other hand, applies corrective steps before the context reaches the language model. Retrieved documents are filtered, ranked, verified, and even enriched if needed before being passed on to the LLM.

That way, the LLM receives high-quality, relevant documents before it generates an answer.

Why is CRAG important in LLM applications?

CRAG makes LLMs more dependable for real-world use cases.

Here’s why CRAG matters in LLM applications:

- Reduces hallucinations by eliminating weak or misleading inputs before they reach the LLM.

- Improves user trust by consistently providing relevant, accurate, and explainable answers.

- Supports high-stakes use cases such as customer support, healthcare, finance, or enterprise search.

In short, CRAG is vital in production-ready systems that organizations can truly rely on. It also naturally connects with knowledge refinement strategies, helping ensure that external data sources are clean before being used.

What are the key components of corrective RAG?

Corrective RAG works by layering corrective mechanisms over traditional RAG's basic retriever–generator setup.

CRAG includes the following key components:

1. Retriever

The retriever is responsible for gathering candidate documents from the knowledge base.

In CRAG, retrieval isn’t limited to a single method – it often combines vector store search, keyword filtering, and hybrid search to reduce the chances of irrelevant results.

The retriever creates a pool of potential context, which can be evaluated and refined before the next step.

2. Evaluator

As the name suggests, the retrieval evaluator assesses the quality of the retrieved information. Based on relevance and confidence score metrics, it scores the content for relevance and filters out the low-confidence results.

This is to ensure that the generator isn’t exposed to poor context. In some implementations, evaluators can even run a binary score check or a grade_documents style function to validate candidate results.

3. Generator

The generator is the LLM that produces answers. Unlike in a basic RAG pipeline, the generator in CRAG is never fed unchecked inputs. It receives only validated context.

4. Feedback loop

The feedback loop is how the system is continually improved. It uses feedback from outputs to improve its confidence scores, logs user questions, and identifies user feedback to pinpoint errors or gaps that may be causing issues.

Over time, this loop can expand the knowledge base, retrain retrieval models, and refine evaluator criteria, making CRAG more effective with each iteration. Techniques like self-reflection or even query re-writing can enhance this stage further.

Next, we will map out how these pieces fit into a corrective RAG workflow.

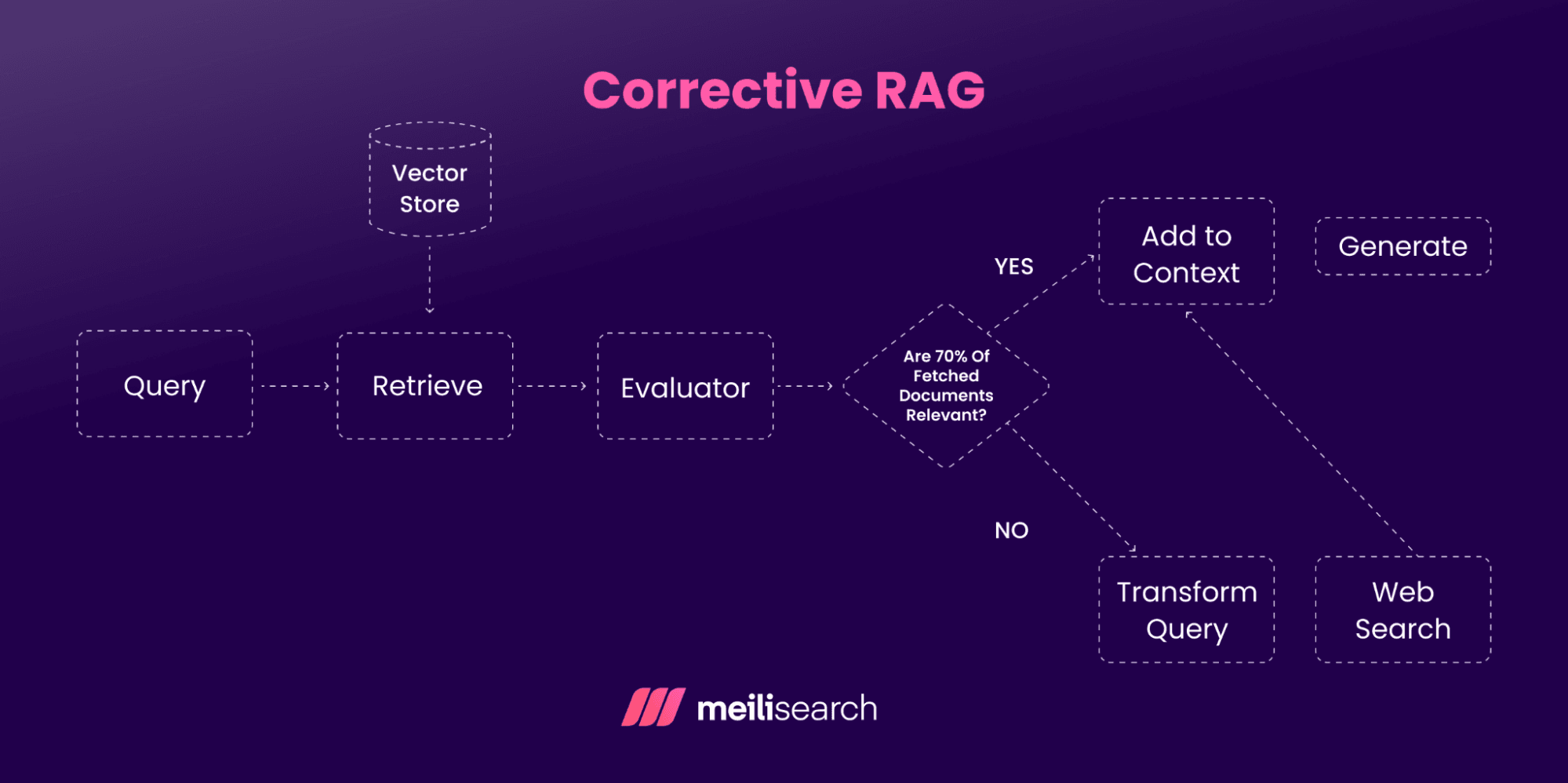

What does a corrective RAG workflow look like?

A corrective RAG workflow adds evaluation and correction layers to the standard retrieval–generation cycle. The goal is to ensure the language model works only with the most reliable and relevant context.

- Query input: The process starts with a user query. The system interprets intent and sets up retrieval.

- Retrieval: The retriever fetches candidate passages from the knowledge base, often using hybrid methods like keyword plus vector search, as detailed in our guide to RAG techniques. Tools like Meilisearch, LangChain, LlamaIndex, or even Tavily as a web search tool can be integrated here.

- Evaluation: The evaluator reviews both the context and the draft response. It filters out weak or irrelevant passages and ensures only high-confidence context continues forward. Evaluators might rely on a grader system powered by OpenAI embeddings or transformers.

- Generation: The retrieved context is passed to the language model. At this stage, the model drafts a response based on the available information.

- Correction: Before finalizing the answer, the system reranks, replaces, or augments weak passages. In some cases, it may even expand retrieval to fill in gaps using a text_splitter or a transform_query step.

- Iteration: User feedback, confidence scores, and query logs feed into the feedback loop, helping the system improve retrieval quality over time.

This workflow resembles a graphstate architecture, where different nodes—retriever, evaluator, generator, feedback—connect through a structured schema. Frameworks like langgraph or langchain_core allow developers to compile such pipelines and extend them with as_retriever utilities.

How to implement corrective RAG

Corrective RAG adds a quality gate between document retrieval and generation. You start by setting up fast recall, then add steps to rerank, validate, and refine context before passing it to the language model.

The implementation can be done in Python, integrating libraries from GitHub. Advanced users sometimes explore self-rag systems, where the model runs an internal web search or external knowledge lookup before committing to a final response.

1. Index data with Meilisearch

The first step is to load documents into a search index and adjust retrieval controls so you can quickly recall candidates and apply filters or synonyms before performing any corrective checks.

# pip install meilisearch from meilisearch import Client client = Client("http://127.0.0.1:7700", "MASTER_KEY") idx = client.index("docs") docs = [ {"id": "1", "title": "FAQ: Billing", "body": "Upgrade rules and discounts."}, {"id": "2", "title": "Guide: Exports", "body": "CSV, JSON, API sync details."}, {"id": "3", "title": "Policy: Refunds", "body": "Refund window is 30 days."} ] idx.add_documents(docs) idx.update_settings({ "searchableAttributes": ["title", "body"], "filterableAttributes": ["title"], "typoTolerance": {"enabled": True}, "synonyms": {"refund": ["return", "money back"]} })

2. Retrieve candidates and rerank with corrective signals

After recall, the next step is to rerank and validate results. This filters out noisy passages and guarantees that only high-confidence context is passed to generation.

query = "What is the refund policy?" # Fast recall from Meilisearch hits = idx.search(query, {"limit": 10})["hits"] # Example: simple heuristic reranker def relevance_score(hit, q): text = (hit.get("title","") + " " + hit.get("body","")).lower() return sum(1 for term in q.lower().split() if term in text) scored = sorted(hits, key=lambda h: relevance_score(h, query), reverse=True) # Keep high-confidence candidates only CUTOFF = 3 candidates = scored[:CUTOFF] # Optional: LLM-as-judge to validate each candidate against the query def llm_validate(q, passage) -> float: # Return a 0–1 score. Stub here for brevity. return 0.9 if "refund" in passage.lower() else 0.4 validated = [] for c in candidates: score = llm_validate(query, c["body"]) if score >= 0.7: validated.append({**c, "crag_score": score})

With clean and reliable candidates, the next step is to check for contradictions or missing pieces, then correct or augment the context before generation.

3. Evaluate and correct the context

We will validate the shortlisted passages and correct them when needed. This step catches contradictions, fills gaps, and removes duplicates so only strong evidence reaches the model.

from collections import defaultdict def detect_duplicates(items): seen, out = set(), [] for it in items: key = (it.get("title",""), it.get("body","")) if key not in seen: seen.add(key); out.append(it) return out def needs_more_evidence(query, validated): has_refund = any("refund" in c["body"].lower() for c in validated) return not has_refund def expand_query(query): return [query, "refund window", "return policy", "money back"] validated = detect_duplicates(validated) if needs_more_evidence(query, validated): aug_terms = expand_query(query) more = [] for t in aug_terms: more.extend(idx.search(t, {"limit": 5})["hits"]) # simple merge then rerun validator merged = detect_duplicates(validated + more) revalidated = [] for c in merged: score = llm_validate(query, c["body"]) if score >= 0.7: revalidated.append({**c, "crag_score": score}) validated = revalidated

Next, only the corrected and validated context is passed on to the language model.

4. Assemble the prompt and generate the final answer

We need to combine the refined passages into a compact prompt with clear instructions. For this, you will need to ask for citations and require the model to ignore low-confidence content.

def make_context(validated, max_chars=1800): out, used = "", [] for c in validated: piece = f"[{c.get('id','')}] {c.get('title','')}: {c.get('body','')} " if len(out) + len(piece) <= max_chars: out += piece; used.append(c) return out, used ctx_text, used_passages = make_context(validated) prompt = f""" You are a careful assistant. Question: {query} Evidence: {ctx_text} Instructions: 1) Answer strictly from Evidence. 2) Cite sources by their IDs like [1], [2]. 3) If Evidence is insufficient, say "Not enough information" and list missing facts. Return a concise, factual answer. """ from openai import OpenAI import os client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY","YOUR_API_KEY")) response = client.chat.completions.create( model="gpt-4o", messages=[{"role":"user","content": prompt}] ) answer = response.choices[0].message.content print(answer)

Next, close the loop with feedback so the system learns and improves over time.

5. Log feedback and improve continuously

By closing the loop with feedback, you help the system record confidence, missing fields, and user signals so the pipeline can self-correct. This data allows us to tune retrieval settings in Meilisearch to expand coverage where gaps are common.

feedback_log = [] CONFIDENCE_THRESHOLD = 0.7 def log_feedback(query, answer, confidence, missing=None): feedback_log.append({ "query": query, "answer": answer, "confidence": confidence, "missing": missing or [] }) def should_expand(entry): return entry["confidence"] < CONFIDENCE_THRESHOLD or len(entry["missing"]) > 0 def auto_expand(missing_terms): for term in missing_terms: hits = idx.search(term, {"limit": 10})["hits"] # here you would extract entities, relations, and upsert to your store # or keep the hits for future retrieval tuning # example usage log_feedback(query, answer, confidence=0.68, missing=["refund timeframe", "exceptions"]) for entry in feedback_log: if should_expand(entry): auto_expand(entry["missing"])

This completes the corrective flow.

You now have fast recall with Meilisearch, corrective scoring and validation, targeted augmentation, safe generation, and a learning loop that keeps improving.

When should you use CRAG?

Like traditional RAG, corrective RAG is most useful in industries or situations where precision is essential. As it improves upon traditional RAG with better context and retrieval, CRAG becomes a much more valuable tool.

Let’s see where we can use it:

- Customer support: A chatbot fed the proper context performs far better than one with misleading context.

- Healthcare: CRAG plays a significant role in healthcare by validating retrieved studies and records, so clinical support tools rely only on trustworthy medical evidence.

- Legal research: Corrective RAG grounds answers in the right case law, statutes, and precedents to avoid hallucinations or imprecise citations.

- Financial services: CRAG screens market data, reports, and filings before generation, reducing the risk of misleading compliance or poor investment insights.

- Enterprise knowledge management: In knowledge bases, CRAG improves internal search by resolving contradictions and filtering out duplicate or noisy content across teams.

- Education and training: In education and training, CRAG can support tutoring systems by delivering fact-checked explanations rather than shallow or misleading answers.

CRAG adoption in these high-stakes domains adds a layer of trust to AI systems.

What are the limitations of corrective RAG?

CRAG offers developers a plethora of perks and benefits, but these come with certain tradeoffs. For instance, it dramatically improves factuality but can also limit scalability and performance.

Here are some other CRAG limitations to keep in mind:

- Computational cost: More detailed steps mean more computational costs. If we want to run retrieval, evaluation, and correction, the latency infrastructure requirements go up. For applications needing real-time responses, this can be a challenge.

- Dependency on feedback mechanisms: CRAG relies heavily on evaluators or human-in-the-loop feedback to refine outputs. If feedback quality is poor or inconsistent, the corrections can be less effective.

- Potential overcorrection: With correction, there’s always potential for overcorrection, and you might lose useful but unconventional information.

- Complexity of implementation: Layers upon layers mean a higher chance of something going wrong.

- Limited adaptability: If the correction logic is too rigid, the system may struggle to adjust quickly to new datasets or changing data sources without retraining or fine-tuning.

Despite these challenges, CRAG is still a powerful approach for domains where accuracy outweighs latency or cost.

Let’s see how corrective RAG differs from speculative RAG.

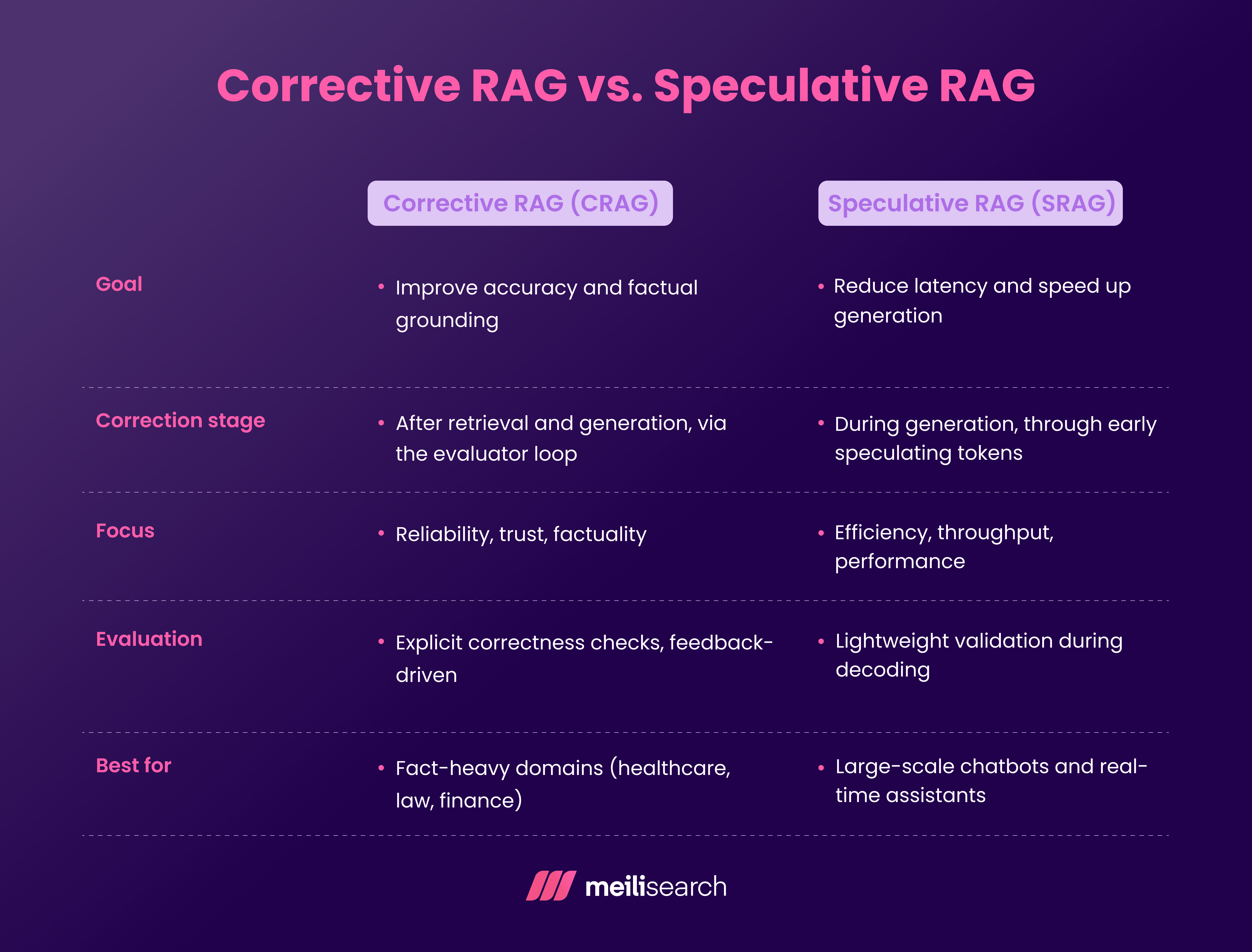

How does corrective RAG differ from speculative RAG?

The main difference between corrective RAG and speculative RAG is when and how corrections or predictions occur during the retrieval–generation process.

Corrective RAG evaluates the generated output and fixes errors after retrieval, while speculative RAG speeds up response generation by predicting multiple candidate outputs early and pruning them later.

In short, CRAG emphasizes trustworthiness, while speculative RAG prioritizes speed. Both can complement each other depending on system goals.

Final thoughts on building with corrective RAG

Corrective RAG helps close the gap between speed and reliability in generative AI systems. Adding evaluation and correction loops reduces hallucinations and gives you more trustworthy outputs in real-world applications.

How Meilisearch can power faster, more accurate retrieval in corrective RAG

Meilisearch gives your CRAG pipeline a high-performance retrieval layer. Lightning-fast text and hybrid search guarantee that the context you feed into the correction stages is accurate and relevant.

Start building more reliable RAG workflows today with