What is agentic RAG? How it works, benefits, challenges & more

Discover what agentic RAG is, how it works, the benefits, the challenges, the drawbacks, common tools used in agentic RAG pipelines & much more.

In this article

Agentic RAG (retrieval-augmented generation) gives your RAG pipelines agent-like decision-making ability. Instead of retrieving blindly, agentic RAG plans, retrieves, and validates steps to provide context-rich answers.

Why is this important? It helps developers by improving reliability, reducing fragmented responses, and enabling large language models (LLMs) to handle more complex tasks.

The key benefits of agentic RAG are stronger reasoning, higher accuracy, and better explainability, while the key drawbacks are more computation, dependency on orchestration, and added complexity.

Agentic RAG works through a loop of retrieval, planning, and evaluation. Tools like Meilisearch, LangChain, and LlamaIndex make these pipelines possible.

Let’s break down what agentic RAG is in detail.

What is agentic RAG?

Agentic RAG is an advanced method that equips a RAG pipeline with agent-like reasoning.

Instead of relying on static information retrieval, it uses planning, decision-making, and iterative evaluation to determine when and how to retrieve context. Naturally, this makes the process more adaptive and precise.

Technically, agentic RAG refers to retrieval systems that embed autonomous agents into the pipeline to refine context, rerank sources, and validate outputs before passing them to the AI model. This ensures that accurate responses are generated consistently.

Curious to see an example? Let’s get right to it.

An example of agentic RAG in action

While there are plenty of examples of agentic RAG in action, we’ll stick to two.

The first example would be an AI customer support chatbot that actually considers a query instead of returning the first matching document it finds.

It takes time to think whether to search product manuals, FAQs, or previous tickets before giving you an answer.

This can be applied in finance as well. Imagine a compliance assistant that’s smart enough to retrieve regulatory filings and cross-check them against internal policies before it generates an output.

In both these cases, agentic RAG acts as a decision-maker instead of just a retriever, leveraging tool use and automation to improve results.

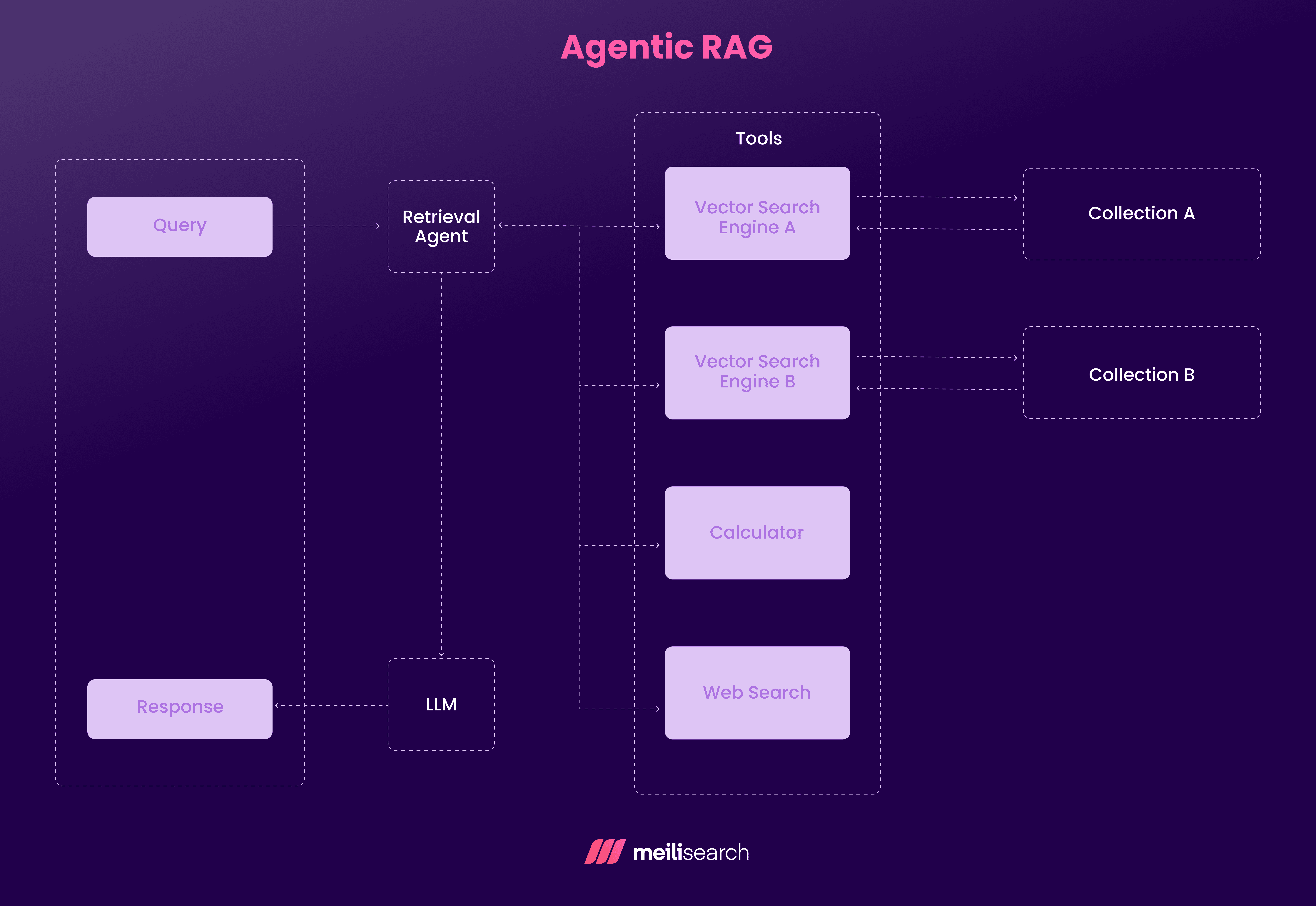

How does agentic RAG work?

Agentic RAG turns retrieval into a step-by-step process with reasoning behind it. The ‘agent’ plans, validates, and iterates instead of just generating the top result ASAP.

The workflow of agentic RAG looks like this:

- Query understanding: It starts by understanding the user query. Then, if needed, it breaks it down into sub-tasks.

- Planning retrieval: The agent decides which data sources to consult and in what order, be they structured databases, document collections, or APIs.

- Context retrieval: The relevant documents are retrieved through vector, hybrid, or keyword search. Tools like Meilisearch make this stage efficient and customizable based on your preference.

- Evaluation and correction: The retrieved data is scored for relevance, consistency, and completeness. The passages with low confidence are reranked, filtered, or replaced.

- Generation with oversight: The LLM produces a draft response, but the agent may call for additional data retrieval steps or corrections before finalizing.

- Feedback loop: Agent logs, confidence scores, and user feedback are used to refine retrieval and planning for future queries.

For the pipeline to work, specific key components are critical:

- Retriever for fast recall (e.g., Meilisearch)

- Planner that breaks down queries and decides retrieval order

- Evaluator that checks factual grounding and confidence

- Feedback loop to continuously improve outputs

Combining these elements allows agentic RAG to transform retrieval from a static lookup into a proper decision-making process with multi-agent systems coordinating tasks.

Now, let’s see why this approach is important for LLM applications.

Is agentic RAG important?

Yes, agentic RAG is important because it addresses the core weaknesses of traditional RAG pipelines.

Right off the bat, it reduces hallucination by validating context. Structured planning eliminates fragmented retrieval and automatically improves reliability for complex queries.

For example, it helps in compliance research, where accuracy is critical, and in customer support, where context switching can cause irrelevant answers.

Where is agentic RAG used?

Agentic RAG is applied in domains with significant reliance on accurate retrieval and adaptive reasoning.

Let’s see some common use cases below:

- Customer support: Customers love accurate and context-aware responses, so agentic RAG in this field is becoming a must-have.

- Healthcare: Agentic RAG can be applied in the form of clinical assistants capable of validating medical literature and patient records before producing an output.

- Legal research: The legal domain can benefit from agentic RAG, which maps statutes, precedents, and case law and cross-checks context before drafting summaries or legal arguments.

- Financial services: In finance, compliance bots retrieve regulatory filings, compare them with internal policies, and highlight discrepancies for auditors.

- Education: You will find intelligent tutoring systems that plan which sources to pull from and explain answers with supporting context.

- Enterprise knowledge management: Agents review large document repositories to rank and validate sources, which helps resolve contradictions and eliminate noise.

- Scientific research: RAG systems explore datasets and published papers, iteratively validating evidence to support hypothesis building.

- Multimodal applications: Agentic RAG integrates text, images, and structured data for real-time data analysis in media and research contexts.

By adapting retrieval to domain-specific needs, agentic RAG improves the reliability and usability of artificial intelligence systems.

What are the benefits of implementing agentic RAG?

The key benefits of implementing agentic RAG are:

- Improved accuracy: Agentic RAG brings about a major difference in accuracy of responses by improving context and reducing hallucinations.

- Context-aware reasoning: Planning retrieval steps connects multiple sources into a coherent response.

- Explainability: Each decision, including what was retrieved, why it was chosen, and how it influenced the output, can easily be traced. Hence, the output becomes transparent to both developers and end users.

- Adaptability: Agentic RAGs dynamically adjust retrieval strategies for different queries, making pipelines more flexible than static RAG implementations.

- Continuous improvement: With feedback loops, the system learns from past queries to refine retrieval and planning over time.

- Smarter routing: Agents determine which sources or external tools to consult, reducing noise and improving function selection.

Together, these benefits make agentic RAG a strong candidate for mission-critical AI systems.

Are there drawbacks in agentic RAG implementation?

Indeed, there are tradeoffs for agentic RAG’s advanced usability and benefits. These include:

- Higher computational cost: With great power comes a higher recurring cost. The added steps of planning, reranking, and evaluation increase latency and resource usage, which can be a bottleneck for real-time applications.

- Complexity of setup: Unlike standard RAG, agentic pipelines require orchestration between retrievers, evaluators, and feedback loops. This raises the barrier to adoption.

- Dependency on evaluators: The accuracy of corrective steps often relies on evaluator models or heuristics. If these are weak or misaligned, the whole system can crumble.

- Risk of overcorrection: In some cases, agentic RAG is smarter than it needs to be. This leads to overcorrection, which might discard useful retrieved information.

These drawbacks highlight the need for careful infrastructure planning before implementing agentic RAG.

We also need to consider the challenges of deploying agentic RAG.

What are the challenges of implementing agentic RAG?

Beyond drawbacks, teams face practical challenges when deploying agentic RAG. Let’s look at some below:

- Data integration: Many enterprises operate across siloed systems and formats. Building pipelines that unify structured, semi-structured, and unstructured data is not easy, to say the least.

- Scalability: Agentic RAG requires extensive strategic planning and evaluation, which becomes more challenging during scaling.

- Security and compliance: Security is obligatory and keeping up with compliance adds a lot of complexity to the architecture.

- Developer expertise: Implementing orchestration logic demands experience with frameworks, retrievers, and agent systems. Keeping up with the adoption could be difficult if your team is not skilled enough.

Next, let’s look at the tools commonly used in agentic RAG pipelines.

What tools are used in agentic RAG pipelines?

Different retrieval tools support agentic RAG:

- LangChain: LangChain is a widely used orchestration framework that connects retrievers, evaluators, and generators into an agentic workflow. It allows the LLM to decide when to retrieve, when to generate, and when to validate. It’s central to many agentic setups.

- LlamaIndex: A tool designed for indexing and structuring data, which helps agents plan retrieval strategies across multiple external knowledge sources. It is often used to build a hierarchical context or combine unstructured and structured data.

- Vector databases (e.g., Weaviate, Pinecone): These provide scalable vector search that supports semantic similarity through embedding-based methods. Agents use them for dense retrieval before applying correction or validation steps.

- Meilisearch: A fast, developer-friendly search engine that fits as the initial retrieval layer in agentic RAG. It stands out thanks to its hybrid search, filters, and typo tolerance, which help agents recall precise candidates before running corrective or validation loops. For AI agent deployment, it provides speed and fine-grained control, which keeps pipelines responsive while maintaining relevance. It’s an all-in-one package for developers.

These tools create the infrastructure agents need to plan, retrieve, evaluate, and refine responses.

Now, let’s walk through how to implement an agentic RAG pipeline.

How can you implement agentic RAG?

There’s a methodical implementation for agentic RAG. It requires a step-by-step process that includes layering planning, validation, and feedback on top of a standard retrieval–generation pipeline.

The following steps show how to set it up using Meilisearch for retrieval and an LLM for reasoning:

1. Ingest and index documents with Meilisearch

The first step is preparing your training data. The documents are indexed in Meilisearch so the agent can query them quickly. This provides a fast recall layer before validation and reasoning.

from meilisearch import Client client = Client("http://127.0.0.1:7700", "MASTER_KEY") index = client.index("docs") docs = [ {"id": "1", "title": "Onboarding Guide", "body": "Steps to onboard a new user securely."}, {"id": "2", "title": "Security Policy", "body": "MFA is mandatory for admins."} ] index.add_documents(docs)

This gives the agent a searchable knowledge base to work with.

2. Retrieve candidates and build context

Next, the agent recalls relevant information and organizes it into a usable context for reasoning.

query = "How do I onboard a new user with MFA?" hits = index.search(query, {"limit": 5})["hits"] context = " ".join([f"{h['title']}: {h['body']}" for h in hits])

By structuring evidence, the system avoids sending raw, noisy text directly to the model.

3. Validate and refine context

Before passing context to the generator, the agent checks for gaps or contradictions. If key terms are missing, it expands the query with synonyms or related phrases.

def needs_more_context(hits): return not any("MFA" in h["body"] for h in hits) if needs_more_context(hits): more = index.search("multi-factor authentication", {"limit": 5})["hits"] hits += more

This step ensures the generator sees comprehensive and accurate information.

4. Generate the final answer and log feedback

Finally, the agent generates a response with citations and logs feedback to improve future runs.

from openai import OpenAI client = OpenAI(api_key="YOUR_API_KEY") prompt = f"Q: {query} Context: {context} Answer with citations." resp = client.chat.completions.create(model="gpt-4o", messages=[{"role":"user","content":prompt}]) answer = resp.choices[0].message.content print(answer)

The feedback on confidence and missing facts can be stored to refine retrieval strategies over time.

In the following section, let’s tackle some of the most frequently asked questions about the performance of agentic RAG.

How does agentic RAG perform?

A recent survey on agentic RAG highlights that it enables flexibility, scalability, and context awareness across complex domains like healthcare, finance, and education.

Another study on Search-R1 found that effective retrievers shorten reasoning steps and improve answer quality, with query performance prediction strongly correlating with final accuracy.

Finally, in recommendation systems, an agentic RAG framework achieved a 42.1% improvement in NDCG@5 and 35% in Hit@5 compared to standard RAG approaches.

How does agentic RAG differ from traditional RAG?

We already know that traditional RAG systems use one-shot retrieval followed by generation. Agentic RAG is more capable and comprehensive. It plans, iterates, and retrieves adaptively through context validation.

How does agentic RAG differ from naive RAG?

Naive RAG uses static retrieval rules with no feedback, while agentic RAG defers retrieval decisions to the agent within a reasoning and feedback loop. This enables retrieval to evolve based on the context.

How does agentic RAG differ from graph RAG?

Graph RAG uses structured relationships for retrieval, but is still a single-pass retrieval system. Agentic RAG layers planning and feedback on top of graph or vector retrieval, enabling reasoning over evolving multi-hop paths.

How does agentic RAG differ from adaptive RAG?

Adaptive RAG adjusts retrieval parameters based on query difficulty. Agentic RAG, on the other hand, goes further. It actively generates new retrieval queries mid-reasoning to fill gaps or refine context.

How does agentic RAG differ from an MCP?

Agentic RAG is a retrieval-planning paradigm. In contrast, an MCP (multi-choice prompt) is a prompting technique focused on structured output design. It’s not associated with retrieval optimization or adaptive querying.

Start building intelligent RAG systems

By treating retrieval as a process of reasoning rather than a static lookup, agentic RAG forces us to design RAG agents that think before they answer.

That added deliberation may cost more computing and complexity. Still, it sets the foundation for agentic AI that is not only more accurate but also more trustworthy, explainable, and adaptable to real-world challenges.

Meilisearch can help build a powerful RAG system

With specialized features like hybrid search, filters, and typo tolerance, Meilisearch ensures your agents get precise, relevant context before reasoning begins. Its developer-friendly API makes integration simple, so you can focus on building smarter workflows instead of managing search infrastructure.