Adaptive RAG explained: What to know in 2026

Learn how adaptive RAG improves retrieval accuracy by dynamically adjusting to user intent, query type, and context—ideal for real-world AI applications.

In this article

Traditional RAG systems work the same way every time. You ask a question, the RAG system retrieves relevant documents, and then produces a generated answer.

But what if your GenAI could think before giving out information? That’s how adaptive retrieval-augmented generation works.

Adaptive RAG decides when and how to retrieve results based on question complexity.

Some benefits of using adaptive RAG include:

- Eliminating wasted searches

- Generating quick answers for simple questions

- Getting accurate answers for more complex questions

Common use cases for adaptive RAG include customer support systems and research assistants.

Tools like Meilisearch, LangChain, Weaviate, and Chroma can be used to build an adaptive RAG system.

Implementing adaptive RAG involves training a query classifier to understand the query type, setting up routing logic to decide the answering strategy, and generating the response.

Let’s dive into each aspect to learn more about adaptive RAG and how to apply it in the real world.

What is adaptive RAG?

Adaptive RAG is a smarter version of Retrieval-Augmented Generation. Unlike standard RAG, which blindly retrieves documents for every query, adaptive RAG decides when and how to retrieve based on the complexity of the question.

A key attribute of adaptive RAG is its smartness. It skips retrieval for simple prompts, performs a web search for fresh information, or retrieves external knowledge from multiple sources when needed.

The idea is to make large language models (LLMs) more efficient by helping them think before taking action.

How does adaptive RAG work?

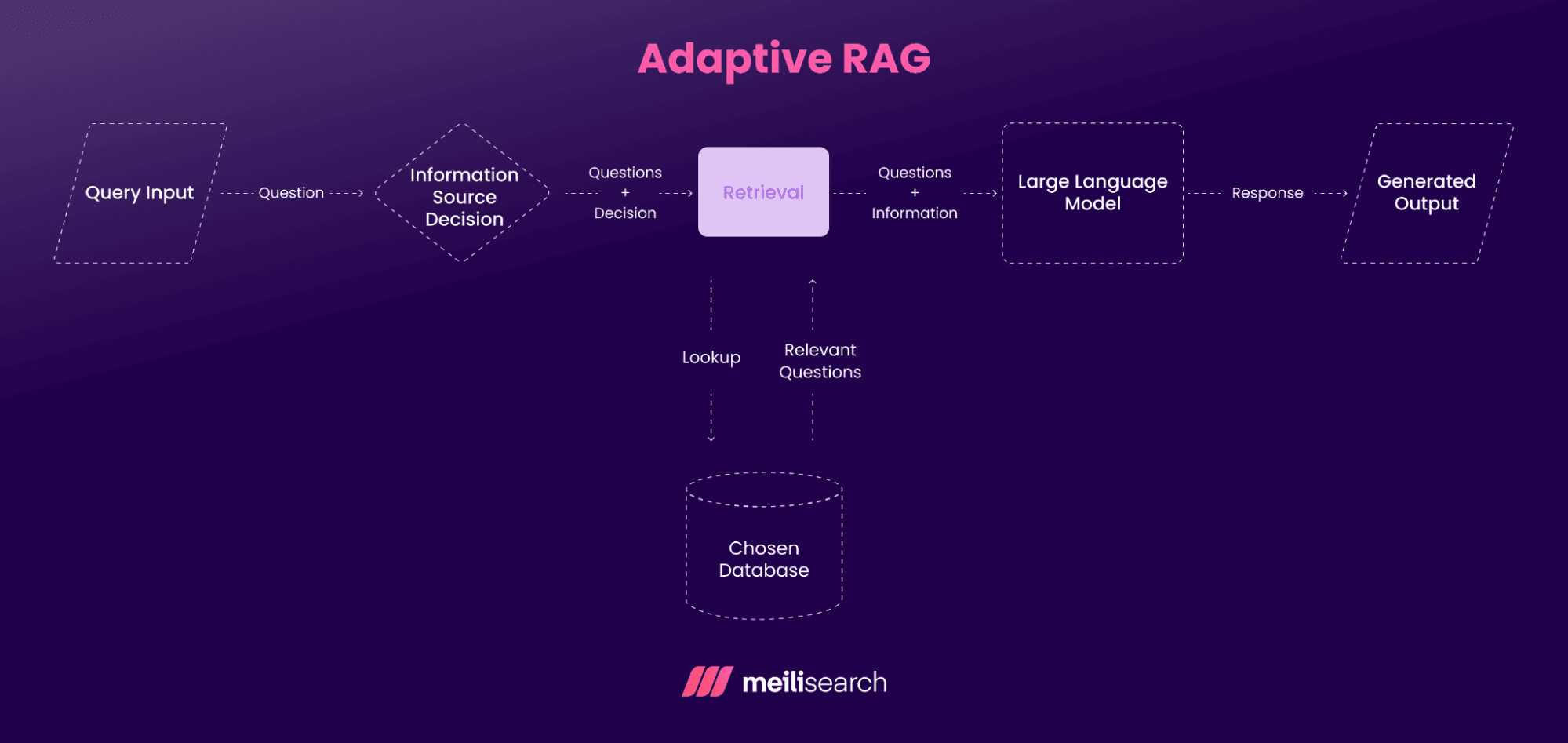

Adaptive RAG works by integrating decision-making into the traditional RAG. It understands your question before deciding on how best to approach it.

Here is how it works, step by step:

- Query analysis: Adaptive RAG first analyzes the type of user query it receives. Is it simple or complex? Does it just require the latest, up-to-date information or more thorough research? This analysis usually involves a query complexity classifier (a smaller language model trained to evaluate the complexity of the query) and helps direct the system toward question-answering or retrieval.

- Strategy selection: Based on the analysis, the system determines the appropriate strategy. For simple queries, it might skip retrieval entirely and let the model answer directly. If the question is complex, it might trigger a search through internal documents or an external knowledge base (such as arXiv and GitHub for research and code). It might even use multiple sources or real-time datasets to answer the question.

- Retrieval phase: If retrieval is required for a query, the system pulls relevant documents using retrieval methods such as vector search, keyword search, or both. That can involve a vectorstore or vector database populated with embedding vectors, or conventional keyword indexes. It can search more than once for complex questions, refining its approach each time.

- Generation phase: This step involves the language model. The system generates a response using the retrieved documents (if there are any). If no documents were retrieved, it will just reply directly. You can also add automated metrics, such as an answer grader or retrieval grader, to score the generated answer and refine the workflow.

Let’s see the key features of adaptive RAG.

What are the key features of adaptive RAG?

Let’s list the most important features of adaptive RAG and why they’re useful.

1. Dynamic retrieval

Dynamic retrieval decides whether information retrieval is necessary. When it is, this feature evaluates the usefulness of the results and can adapt by choosing the most suitable retriever for the context rather than relying on a single system.

2. Query complexity analysis

Not every query needs a deep search. The first thing adaptive RAG does is to analyze how complex the query is. This helps to avoid wasting resources on simple questions while ensuring the complex ones are accurately answered.

3. Using multiple sources

Adaptive RAG is not limited to a single data source. It can pull information from internal databases, external APIs, and real-time web searches. This allows it to generate more comprehensive answers.

4. Iterative retrieval approach

Sometimes, one search is not enough, especially when dealing with open-ended questions. Adaptive RAG performs multiple searches, adjusting retrievers, re-ranking hits, and refining queries through a small orchestration router or pipeline composed of nodes, to converge on better results.

Let’s check out the benefits of adaptive RAG.

What are the benefits of adaptive RAG?

Adaptive RAG is more than just applying RAG techniques like retrieving and generating; it’s about doing both intelligently.

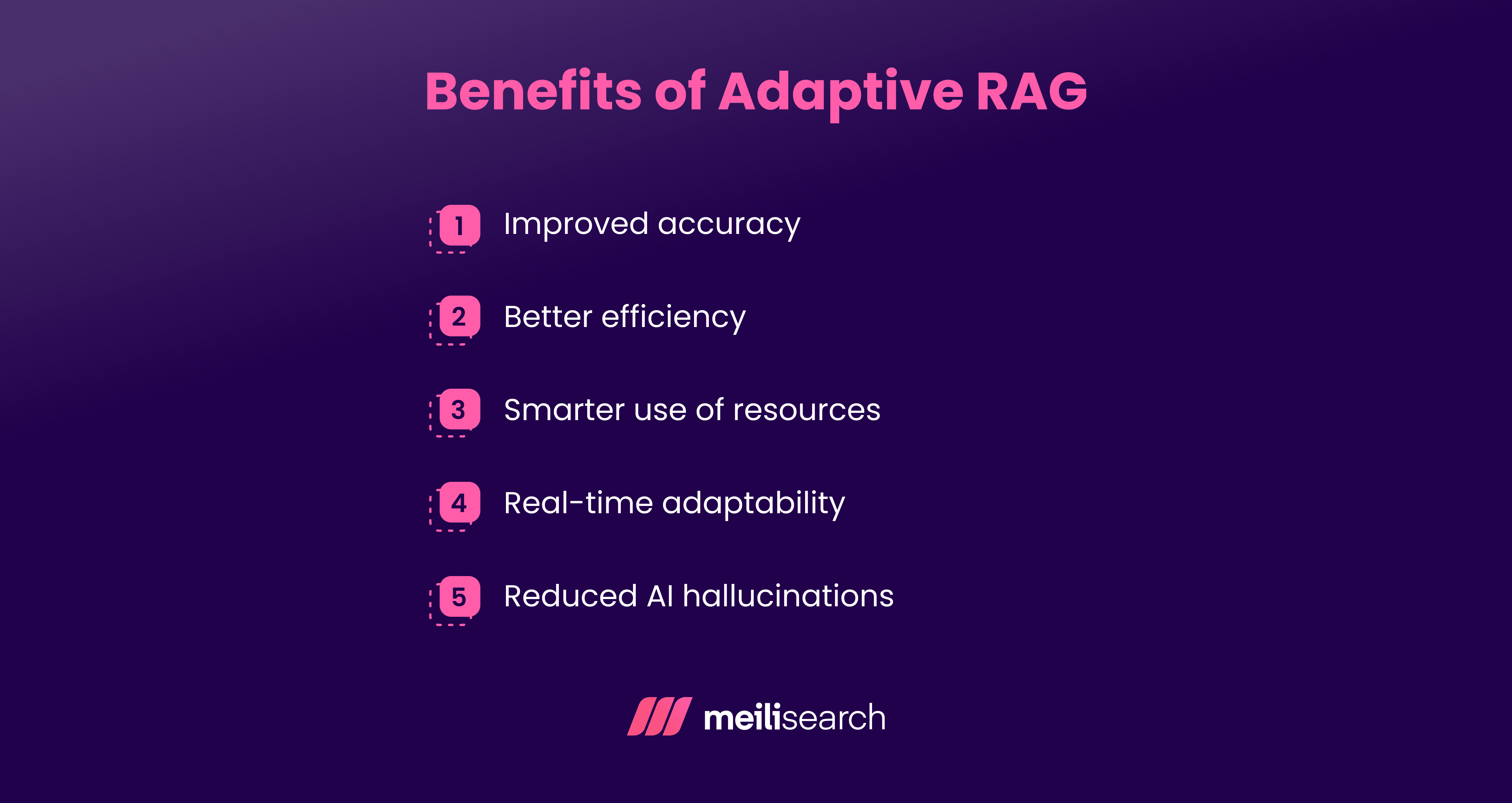

Here are the benefits of adaptive RAG that make artificial intelligence responses accurate.

- Improved accuracy: Adaptive RAG generates more accurate answers because it only retrieves highly relevant information.

- Better efficiency: Not every question requires a full search, and adaptive RAG understands that. You get faster answers for simple queries, and the system only pulls in extra information when it helps improve the final response.

- Smarter use of resources: Adaptive RAG avoids unnecessary searches, thereby requiring less computing power. This is valuable for scalability, as it also speeds up responses and lowers costs.

- Real-time adaptability: As the name implies, adaptive RAG can adjust to answer any query. It is built to stay flexible and responsive on demand.

- Reduced hallucinations: Adaptive RAG is less likely to guess when returning results because it only pulls in real data when needed. The search is more focused, and the answers are based on facts.

When should you use adaptive RAG instead of standard RAG?

Adaptive RAG and standard RAG are two different RAG types that can be used in different scenarios.

Let’s look at scenarios where adaptive RAG can be used instead of standard RAG:

- Looking for real-time information: Imagine you are writing a research paper about electric cars and you ask, ‘What is the market share of Tesla cars in 2025?’ Standard RAG might provide outdated information based on previous years. Adaptive RAG recognizes that this requires real-time data, triggering a web search and getting the latest information.

- Checking for health symptoms: If someone types ‘I have a sore throat,’ that is easy to respond to. But questions such as ‘Why do I keep getting sore throats after flying?’ require deeper context. Adaptive RAG retrieves relevant information from trusted health sources only when needed, reducing the risk of providing incorrect medical advice.

- Working with limited computing resources: Adaptive RAG is better if you work in a setting with limited processing power, such as mobile apps. It avoids complete retrieval unless necessary, which means faster responses and reduced computing demands on your system.

- Asking research-based questions: Adaptive RAG is excellent for combining multiple sources during research, escalating from single-step retrieval to multi-step retrieval as needed.

What are common use cases for adaptive RAG?

Adaptive RAG is useful in cases where search quality, relevance, and speed matter.

Here are some practical applications of using adaptive RAG in the real world:

- Customer support for e-commerce: In a retail chatbot, you might ask, ‘When is my order ready?’ (a simple query) while another person asks, ‘Why was I charged twice last month?’ (a complex query). Adaptive RAG can directly answer the first question and retrieve the order history or billing policies for the second question.

- Conversational AI: Imagine a student chatting with an AI tutor and asking, ‘What is photosynthesis?’ Then, they follow up with, ‘How does light intensity affect photosynthesis in different plant species?’ Adaptive RAG knows that the second question requires more research. It keeps the conversation flowing by giving quick answers when it can and pulling in research materials when it matters.

- Knowledge management: Organizations use adaptive RAG to make their internal knowledge accessible and usable. Instead of employees wasting time digging through hundreds of documents, it figures out where the answer is and retrieves it.

Let’s see some tools used to develop adaptive RAG.

What tools support adaptive RAG development?

Instead of forcing developers to piece everything together from scratch, adaptive RAG tools handle the complex parts, such as knowing when to search, where to search, and how to combine results.

The following tools are used in different adaptive RAG applications:

- Meilisearch: A lightweight, fast, and customizable retriever in adaptive RAG setups. It supports real-time indexing and semantic filtering and can refine search results based on the intent behind the query, making it a valuable tool for dynamic retrieval.

- LangChain: A robust framework that connects language models to external data. It comes with pre-built components for different retrieval strategies and makes it easy to switch between them. It is good for building adaptive pipelines that adjust to query complexity.

- Weaviate: An open-source vector database combining traditional keyword and semantic searches. It is excellent for AI systems that need to decide whether a question requires an exact match or a meaning-based retrieval.

- Chroma: A lightweight, open-source embedding database that is simple to set up and integrate. It works well for building adaptive RAG prototypes, especially when you want to fine-tune retrieval strategies based on query types.

Most adaptive RAG tools fall into three categories: fast keyword search engines, vector databases for semantic matches, and orchestration frameworks that tie them all together.

Your choice depends on whether you prioritize speed, accuracy, or semantic understanding. You can also balance all three to fit your use case.

How do you implement adaptive RAG?

We will build a coding example to demonstrate how to implement adaptive RAG.

Let’s say we need a smart assistant to answer questions about our company. We will build an adaptive RAG that:

- Automatically tries a broader search if the first attempt doesn't find enough results.

- Uses exact keyword matching or RAG based on its understanding of your question.

Let's build this step by step!

1. Setting Meilisearch up

If you currently do not have Meilisearch, install it with the command:

# Install Meilisearch curl -L https://install.meilisearch.com | sh

You spin up the server with:

# Launch Meilisearch ./meilisearch --master-key="aSampleMasterKey"

In Python, it is good practice to put credentials in a .env file. Create one and add the following credentials: MEILI_URL, MEILI_KEY, and OPENAI_API_KEY.

**Note: **You need to get an OpenAI API key to create this RAG.

2. Importing relevant libraries and credentials

Create a Python file to store the code. Let’s call it ‘adaptive_news_demo.py’. First, import the libraries we will use and the necessary credentials.

We have also named our search index ‘news’.

from __future__ import annotations import os from typing import Any, Dict, List, Optional from dataclasses import dataclass from dotenv import load_dotenv import meilisearch from openai import OpenAI # Load environment variables (your API keys) load_dotenv() MEILI_URL = os.getenv("MEILI_URL", "http://127.0.0.1:7700") MEILI_KEY = os.getenv("MEILI_KEY", "aSampleMasterKey") OPENAI_API_KEY = os.environ["OPENAI_API_KEY"] # Create connections to our services ms = meilisearch.Client(MEILI_URL, MEILI_KEY) oa = OpenAI(api_key=OPENAI_API_KEY) INDEX = "news" # Name of our search index

3. Creating a smart search strategy

Not all questions are the same, so we shouldn't search for answers the same way every time.

We will create different approaches using Python's dataclass feature to organize our strategies.

We will have three strategies (conservative, broad, and semantic-focused) based on three attributes:

- Semantic ratio: Controls how much our search focuses on understanding the meaning of words.

- Limit: How many results to retrieve.

- Filter expression: Any filter to be considered when retrieving results.

@dataclass class SearchConfig: """Configuration for different search strategies""" semantic_ratio: float limit: int filter_expr: Optional[str] = None @classmethod def conservative(cls) -> "SearchConfig": """Conservative search: more full-text, fewer results, filtered""" return cls(semantic_ratio=0.3, limit=5, filter_expr='lang = "en"') @classmethod def broad(cls) -> "SearchConfig": """Broad search: balanced hybrid, more results, no filter""" return cls(semantic_ratio=0.5, limit=10, filter_expr=None) @classmethod def semantic_focused(cls) -> "SearchConfig": """Semantic-focused search for conceptual queries""" return cls(semantic_ratio=0.8, limit=8, filter_expr=None)

Here are the best use cases for each of our strategy types:

- Conservative: Perfect for ‘When did X happen?’

- Broad: Good fallback when initial searches don't find enough results.

- Semantic-focused: Ideal for ‘How does X work?’

4. Preparing sample documents

We need some documents to search through. In a real system, these would come from your database, but we will use a mock collection of news articles for our example.

DOCS: List[Dict[str, Any]] = [ { "id": "n1", "title": "City Marathon Draws Record Crowd", "text": "The city marathon drew over 20,000 runners and raised funds for local schools. The event was the largest in the city's history.", "date": "2025-08-09", "category": "sports", "lang": "en", "source": "local_sports.md" }, { "id": "n2", "title": "New Tram Line Opens Downtown", "text": "A new tram line began service downtown, cutting commute times by 15 minutes. The route connects the business district to residential areas.", "date": "2025-08-10", "category": "city", "lang": "en", "source": "city_transport.md" }, { "id": "n3", "title": "Tech Expo Highlights Energy-Saving Devices", "text": "Expo exhibitors showcased smart thermostats and efficient lighting systems. The technology promises to reduce household energy consumption by up to 30%.", "date": "2025-08-08", "category": "tech", "lang": "en", "source": "tech_expo.md" }, { "id": "n4", "title": "Local Restaurant Wins Sustainability Award", "text": "Green Bistro received recognition for its farm-to-table practices and zero-waste initiative. The restaurant sources 90% of ingredients locally.", "date": "2025-08-11", "category": "food", "lang": "en", "source": "food_news.md" } ]

5. Building the main RAG class

Now we'll create our main system class that brings everything together. This organizes all our functionality in one place and ensures our search index is properly set up when we create our RAG system.

class MeilisearchRAG: def __init__(self): self.index = self._ensure_index() self.embedders_available = self._check_embedders()

6. Setting up the search index

We will create a search index combining traditional and semantic keyword searches. This will ensure the RAG is smart and can use both searches for a query.

def _ensure_index(self) -> meilisearch.index.Index: """Initialize index with proper configuration for hybrid search""" try: ms.create_index(INDEX, {"primaryKey": "id"}) except meilisearch.errors.MeilisearchApiError: pass # Index already exists idx = ms.index(INDEX) # Configure for hybrid search idx.update_settings({ "filterableAttributes": ["lang", "category", "date", "source"], "searchableAttributes": ["title", "text"], "sortableAttributes": ["date"], "rankingRules": [ "words", # How many search terms match "typo", # Handle spelling mistakes "proximity", # How close words are to each other "attribute", # Title matches rank higher than text matches "sort", # Custom sorting (like by date) "exactness" # Exact matches rank higher ] }) # Add documents if index is empty stats = idx.get_stats() if getattr(stats, "number_of_documents", 0) == 0: idx.add_documents(DOCS) return idx

Note:

filterableAttributesdefines which fields we can filter by (e.g., ‘only English articles’).searchableAttributesdefines which fields to search in (title and text content).rankingRulesdefines how the search should prioritize results. This is Meilisearch's secret sauce for relevance.

Also, to know whether embedders are available, we can create a function that checks for them.

def _check_embedders(self) -> bool: """Check if embedders are properly configured""" try: settings = self.index.get_settings() embedders = settings.get("embedders", {}) return bool(embedders and "default" in embedders) except Exception: return False

7. Understanding different question types

Our system needs to automatically recognize what kind of question the user is asking so it can choose the right search strategy.

We will classify queries based on keywords into the following categories:

- News queries: Need the most recent, specific information

- Factual queries: Need precise keyword matching

- Conceptual queries: Benefit from semantic understanding

- General queries: Get a balanced approach

def _classify_query(self, query: str) -> str: """Classify query type to determine search strategy""" query_lower = query.lower() # News/time-sensitive queries if any(word in query_lower for word in ["latest", "today", "news", "recent", "current"]): return "news" # Specific factual queries (good for keyword search) if any(word in query_lower for word in ["what", "when", "where", "how many", "specific"]): return "factual" # Conceptual queries (good for semantic search) if any(word in query_lower for word in ["why", "how", "explain", "about", "like", "similar"]): return "conceptual" return "general"

8. Implementing the core search logic

Now, we can perform the search using our configured strategies. We use the hybrid parameter with semanticRatio to take advantage of Meilisearch's built-in way of balancing keyword and semantic search.

def _search_with_config(self, query: str, config: SearchConfig) -> List[Dict[str, Any]]: """Perform search with given configuration""" search_params: Dict[str, Any] = {"limit": config.limit} if config.filter_expr: search_params["filter"] = config.filter_expr # Only use hybrid search if embedders are available if self.embedders_available: search_params["hybrid"] = { "semanticRatio": config.semantic_ratio, "embedder": "default", } try: results = self.index.search(query, search_params) return results.get("hits", []) except Exception as e: print(f"Search failed: {e}") return []

9. Building the adaptive search

Now, we can build the ‘adaptive’ part. Our system tries one search strategy; if it doesn't find enough good results, it automatically tries a different approach.

def adaptive_search(self, query: str) -> List[Dict[str, Any]]: """Adaptive search that tries different strategies based on results""" query_type = self._classify_query(query) # Choose initial strategy based on query type if query_type == "news": initial_config = SearchConfig.conservative() elif query_type == "factual": initial_config = SearchConfig.conservative() elif query_type == "conceptual": initial_config = SearchConfig.semantic_focused() else: initial_config = SearchConfig.conservative() # Try initial search hits = self._search_with_config(query, initial_config) # Adaptive fallback if results are insufficient if len(hits) < 2: print(f"Initial search returned {len(hits)} results, trying broader search...") fallback_config = SearchConfig.broad() hits = self._search_with_config(query, fallback_config) return hits

10. Formatting the results for the LLM

Once we have search results, we need to format them in a way that the LLM can easily understand and cite properly.

Each result gets a number [1], [2], etc., that the LLM can use for citations.

def format_context(self, hits: List[Dict[str, Any]]) -> str: """Format search results for LLM context""" if not hits: return "No relevant documents found." context_parts = [] for i, hit in enumerate(hits, 1): title = hit.get('title', 'Untitled') text = hit.get('text', '') date = hit.get('date', 'Unknown date') source = hit.get('source', 'Unknown source') context_parts.append( f"[{i}] {title} {text} (Date: {date}, Source: {source})" ) return " ".join(context_parts)

11. Generating smart answers with context

Now, we can pass the query to the LLM. We have two paths: direct answers for general knowledge, and context-based answers for information from our documents.

def generate_response(self, query: str, context: str) -> str: """Generate response using OpenAI with retrieved context""" if not context or context == "No relevant documents found.": # Direct answer for non-RAG queries response = oa.chat.completions.create( model="gpt-4o-mini", messages=[ {"role": "system", "content": "You are a helpful assistant. Answer clearly and concisely in 2-3 sentences."}, {"role": "user", "content": query} ], max_tokens=200, temperature=0.7 ) return response.choices[0].message.content # RAG response with context system_prompt = """You are a helpful assistant that answers questions based on the provided context. Use ONLY the information in the context to answer. Cite sources using [1], [2], etc. If the context doesn't contain relevant information, say so clearly.""" user_prompt = f"""Query: {query} Context: {context} Answer:""" response = oa.chat.completions.create( model="gpt-4o-mini", messages=[ {"role": "system", "content": system_prompt}, {"role": "user", "content": user_prompt} ], max_tokens=300, temperature=0.3 ) return response.choices[0].message.content

12. Building the method that returns the answer

This brings everything together.

def answer(self, query: str) -> str: """Main method to answer queries with adaptive RAG""" query_type = self._classify_query(query) # For non-news queries, consider if RAG is needed if query_type not in ["news", "factual"] and not any( word in query.lower() for word in ["latest", "recent", "current", "today"] ): # Try direct answer first for general knowledge direct_response = self.generate_response(query, "") return f"Direct answer: {direct_response}" # Use RAG for news and factual queries hits = self.adaptive_search(query) context = self.format_context(hits) return self.generate_response(query, context)

13. Testing our code with CLI.

Finally, we add a simple way to test our system from the command line.

def main(): import argparse parser = argparse.ArgumentParser(description="Adaptive RAG with Meilisearch") parser.add_argument("--query", required=True, help="Query to process") parser.add_argument("--debug", action="store_true", help="Show debug information") args = parser.parse_args() rag = MeilisearchRAG() if args.debug: print(f"Processing query: {args.query}") print(f"Query type: {rag._classify_query(args.query)}") print("-" * 50) answer = rag.answer(args.query) print(answer) if __name__ == "__main__": main()

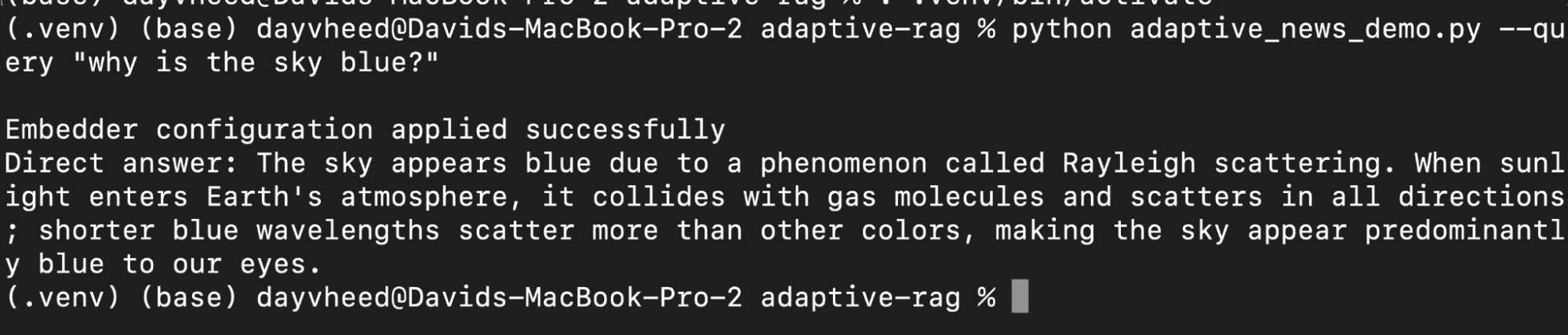

In a terminal, we can run a simple query: ‘Why is the sky blue?’ Because this is a simple question, it should return the answer directly.

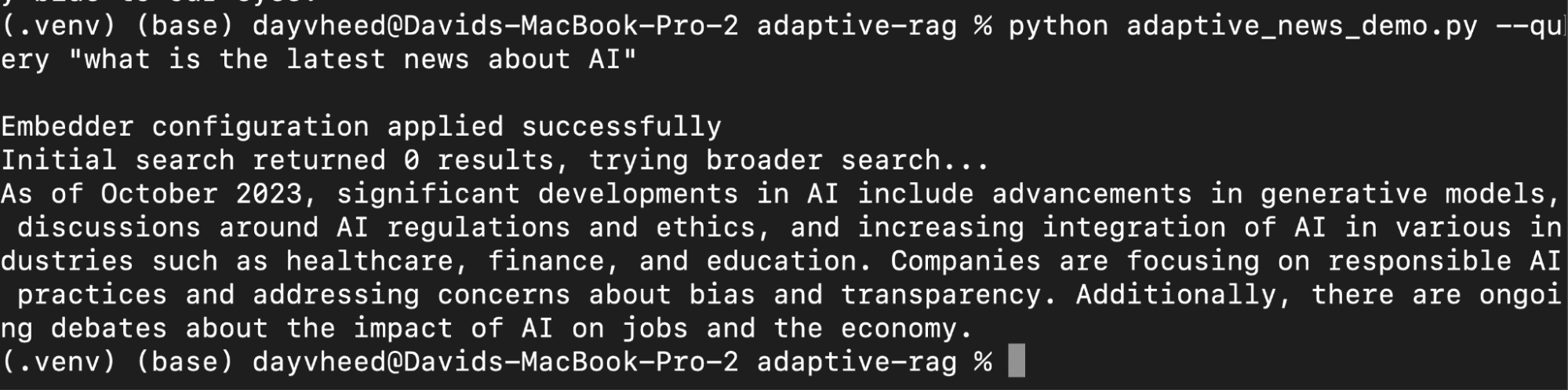

We can ask a question that involves searching the Internet, ‘What is the latest news about AI?’

As can be seen, the initial search generated zero results because the external document did not mention AI. Our system then proceeded to do a broader search, this time asking the LLM.

Smarter retrieval starts with adaptation

The key to smarter retrieval is not just finding information; it is about knowing how to search and when to dig deeper.

Instead of treating every query the same, adaptive RAG adjusts its strategy based on what the question needs. If the answer to a question is a simple fact, it goes straight to the point. But if the question requires more context, it adjusts its approach, sharpening its search after every step.